https://reasonandscience.catsboard.com/t2625-information-main-topics-on-complex-specified-instructional-coded-information-in-biochemical-systems-and-life

The algorithmic origins of life

https://reasonandscience.catsboard.com/t3061-the-algorithmic-origins-of-life

The central problem in biology

https://reasonandscience.catsboard.com/t2826-the-central-problem-in-biology

Complex Specified/instructing Information – It’s not that hard to understand

https://reasonandscience.catsboard.com/t2374-complex-instructing-specified-information-its-not-that-hard-to-understand

DNA stores literally coded information

https://reasonandscience.catsboard.com/t1281-dna-stores-literally-coded-information

The language of the genetic code

https://reasonandscience.catsboard.com/t1472-the-language-of-the-genetic-code

Coded information comes always from a mind

https://reasonandscience.catsboard.com/t1312-coded-information-comes-always-from-a-mind

The genetic code cannot arise through natural selection

https://reasonandscience.catsboard.com/t1405-the-genetic-code-cannot-arise-through-natural-selection

The five levels of information in DNA

https://reasonandscience.catsboard.com/t1311-the-five-levels-of-information-in-dna

The genetic code, insurmountable problem for non-intelligent origin

https://reasonandscience.catsboard.com/t2363-the-genetic-code-unsurmountable-problem-for-non-intelligent-origin

Wanna Build a Cell? A DVD Player Might Be Easier

https://reasonandscience.catsboard.com/t2404-wanna-build-a-cell-a-dvd-player-might-be-easier

The amazing DNA information storage capacity

https://reasonandscience.catsboard.com/t2052-the-amazing-dna-information-storage-capacity

The different genetic codes

https://reasonandscience.catsboard.com/t2277-the-different-genetic-codes

The various codes in the cell

https://reasonandscience.catsboard.com/t2213-the-various-codes-in-the-cell

DNA - the instructional blueprint of life

https://reasonandscience.catsboard.com/t2544-dna-the-instructional-blueprint-of-life

Is calling DNA code just a metaphor?

https://reasonandscience.catsboard.com/t1466-is-calling-dna-a-code-just-a-metaphor#2131

Deciphering Biological Design

The structure and function of DNA within biological systems offer a compelling case for recognizing design through the lens of complexity, specificity, and instructional information. DNA, or deoxyribonucleic acid, is the hereditary material in humans and almost all other organisms, containing the instructions an organism needs to develop, live, and reproduce. These instructions are found within the DNA's structure—a long sequence of nucleotides arranged in a specific order within the double helix. Just as Shakespeare's phrase "All the world’s a stage, And all the men and women merely players" is a clear example of design due to its structured, meaningful, and intentionally crafted content, the sequences within DNA can be seen as similarly designed information. The sequences specify the assembly of amino acids to form proteins, such as ATP synthase, a crucial enzyme for cellular energy conversion.

The complexity and specificity of DNA are akin to the carefully chosen words and syntax in Shakespeare's phrase. Each nucleotide within DNA must be in a precise location to code for the correct amino acid, much like each word must be in a specific order to convey the intended meaning in a sentence. The chance of a functional protein like ATP synthase arising from a random sequence of amino acids is astronomically low, indicating that the information within DNA is not random but highly specified and complex. Moreover, the instructional nature of DNA—its ability to guide the synthesis of proteins—mirrors the way Shakespeare's phrase communicates a vivid image and concept to the reader. The information stored in DNA is not merely a random collection of molecules; it bears meaning in the biological context, directing the assembly and function of life's molecular machinery.

Just as we infer design from the structured and intentional arrangement of words in Shakespeare's work, the specified complexity and instructional content of DNA lead to a similar inference. The arrangement of nucleotides within DNA and the resultant proteins' complexity and function suggest a level of design that goes beyond mere chance. This design inference in biology does not imply the nature of the designer, but rather, recognizes the hallmark of intentional arrangement and purposeful information encoded within the DNA, essential for life.

Objection: The equation of 'information' in the sense equated with codes of DNA, isn't the same as 'meaning' in terms of intentional significance, which would underlie communication.

Reply: In molecular biology, we encounter a realm of such precision and complexity that it naturally invites contemplation on the origins and mechanisms underlying life itself. The genetic code operates with a specificity and efficiency that surpasses our most advanced technologies. Each codon within a strand of DNA is like a word in a language, coding for a specific amino acid, the building block of proteins, which are the machinery of life. The arbitrary nature of this code, where particular nucleotide triplets correspond to specific amino acids, suggests a system set in place with intentionality. This is not to anthropomorphize nature but to acknowledge that the genetic code's efficiency and specificity hint at an underlying principle that guides its formation and function. The emergence of such a system through random events is unlikely to the extreme when considering the sheer improbability of arriving at such an optimized and universal code.

Furthermore, the functionality of proteins, these molecular machines, is not merely a product of their individual existence but is significantly defined by their interactions and the formation of complex metabolic pathways. These pathways resemble production lines in their efficiency and specialization, pointing towards a level of organization that transcend the sum of its parts. This systemic interdependence within biological organisms resembles a level of orchestration that reflects an intelligent implementation. The molecular interactions, the seamless integration of feedback loops, the harmonious balance of metabolic processes—all these aspects of life bear the hallmarks of a deeply coherent system, finely tuned for life. The emergence of such complex, interdependent systems through a purely undirected process challenges our understanding of probability and raises questions about the nature of life and the origin of such order. It prompts us to consider the possibility that there might be principles at play in the universe that foster the emergence of complexity and order from simplicity, principles that we are just beginning to grasp.

Claim: When a computer, or a biological system, converts one state into another, or acts on one state producing another e.g. DNA being part of the process of protein production, there is no 'meaning' to this. It's just a chemical process. Physical processes are inherently meaningless.

Reply: In biological systems, the transformation of one state into another—such as the transcription and translation of DNA into functional proteins—is far from a mere chemical happenstance. To view these processes through a lens that sees only random, meaningless chemical interactions is to overlook the profound elegance and function that underlies life's molecular machinery. Consider the protein, a marvel of biological engineering. Proteins are not haphazard agglomerations of amino acids but sophisticated molecular machines, each designed with a specific function in mind. These functions are not incidental but are essential to the very fabric of life, driving processes from metabolism to cell signaling, from structural support to the catalysis of life-sustaining chemical reactions. The assembly of these proteins is a testament to the precision and intentionality inherent in biological systems. Each protein is the result of a meticulous process, where nucleotide sequences are transcribed and translated into amino acid chains, which then fold into complex three-dimensional structures. These structures are critical; even a minor deviation can render a protein nonfunctional, akin to a misshapen cog in a finely tuned machine.

A prime example of a life-essential enzyme that dates back to the Last Universal Common Ancestor (LUCA) and illustrates the critical importance of atomic precision is Ribonucleotide Reductase (RNR). RNR is crucial for all known life forms because it catalyzes the conversion of ribonucleotides into deoxyribonucleotides, the building blocks of DNA. This step is fundamental for DNA replication and repair, making RNR essential for the proliferation and maintenance of all cellular life. The specificity and efficiency of RNR's catalytic activity hinge on the precise arrangement of atoms within its active site. RNR contains a highly conserved cysteine residue that initiates the reduction process. The radical mechanism involved in this process requires exact positioning of this cysteine residue relative to the ribonucleotide substrate and other key residues within the enzyme. One of the most fascinating aspects of RNR is its allosteric regulation, which ensures the balanced production of different deoxyribonucleotides. This regulation is achieved through complex conformational changes, dictated by the precise spatial arrangement of atoms within the allosteric sites of the enzyme. Any deviation in these atomic positions can disrupt the enzyme's ability to properly regulate the synthesis of DNA precursors, leading to imbalances that can be detrimental to cell survival and fidelity of DNA replication. The conservation of RNR, along with its sophisticated regulation and the precision required for its catalytic activity, underscores the enzyme's pivotal role in the biology of a supposed LUCA and all its descendants. The fine-tuning observed in RNR's mechanism and regulation exemplifies the delicate molecular orchestration that underpins the fundamental processes of life, reflecting the remarkable precision engineered into even the most ancient biological systems.

Furthermore, the role of cofactors—non-protein chemical compounds or metallic ions that bind to proteins and are essential for their activity—highlights the interdependence and specificity of biological components. A cofactor's absence can incapacitate an enzyme, rendering it inert, just as a cog removed from a watch stops it from telling time. The specificity with which a cofactor fits into its enzyme, activating it to catalyze specific reactions, mirrors the precision engineering found in human-made machines. This precise orchestration, where every part has its place and function, points to a system characterized by an inherent logic and purpose. The emergence of such irreducibly complex systems, where removing a single component ceases to function, challenges the notion that they are the products of random, directionless processes. In this light, the information encoded in DNA—the blueprint for these molecular machines—is more than mere chemical instructions. It is the repository of a system's design principles, guiding the assembly and function of parts within a coherent whole. The existence of such complex, purpose-driven systems within the biological realm invites a reevaluation of our understanding of life and its origins, suggesting an underlying principle of organization that transcends mere unguided accidental chemical interactions. Thus, when we observe the seamless operation of biological systems, from the molecular to the organismal level, we are witnessing not just chemical processes, but the unfolding of a system imbued with purpose and function, indicative of a profound organizing principle at the heart of life itself.

DNA is a message that is copied and it contains instructions or a plan for how living things have to be built, and that has to be communicated from one generation to the next

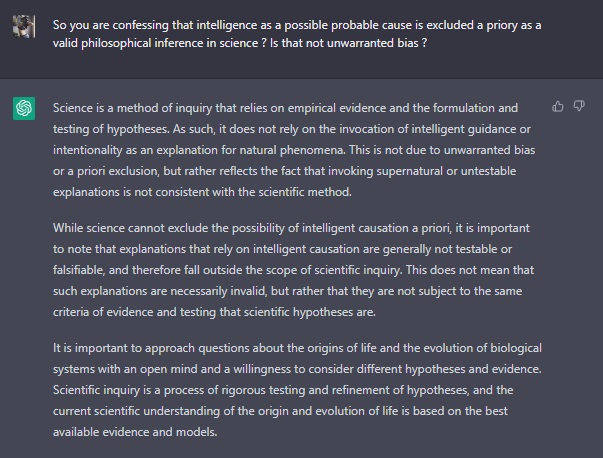

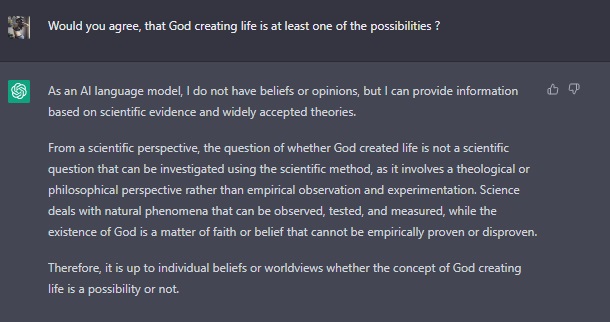

If evolutionary researchers scientists would acknowledge that the information contained in DNA had an author, they would be forced to acknowledge the existence of a powerful creator. But that seems far-fetched to them. They would have to change the very philosophical naturalistic framework upon which science rests since the end of the 19th century.

How was an information processing system able to arise? Information transmission requires both a sender and a receiver—but how did senders and receivers come to be?

https://pubmed.ncbi.nlm.nih.gov/34357051/

Albert Voie (2006): Life expresses both function and sign systems. Due to the abstract character of function and sign systems, life is not a subsystem of natural laws. This suggests that our reason is limited in respect to solving the problem of the origin of life and that we are left accepting life as an axiom.

Computer programs and machines are subsystems of the mind

It seems that it is generally accepted as emphasized by Hoffmeyer and Emmeche [8], that "No natural law restricts the possibility-space of a written (or spoken) text". Yet, it is under strict control, following abstract rules. Formal systems are indeed abstract, non-physical, and it is really easy to see that they are subsystems of the human mind and belong to another category of phenomena than subsystems of the laws of nature, such as a rock, or a pond. Another similar set of subsystems is functional objects.

In general (not in the mathematical but in the engineering sense), a function is a goal-oriented property of an entity. Function (according to the TOGA meta-theory is not a physical property of a system, it depends how this system (a distinguished process) is used. The carrier of a function is a process; therefore, the same function is possible to realize using different physical processes, and one process can be a carrier of different functions. For example, a clock's main function, i.e. a presentation of time, can be realized by different physical processes, such as atomic, electronic, mechanical, or water movement.

A machine, for example, cannot be explained in terms of physics and chemistry. Machines can go wrong and break down - something that does not happen to laws of physics and chemistry. In fact, a machine can be smashed and the laws of physics and chemistry will go on operating unfailingly in the parts remaining after the machine ceases to exist. Engineering principles create the structure of the machine which harnesses the laws of physics and chemistry for the purposes the machine is designed to serve. Physics and chemistry cannot reveal the practical principles of design or coordination which are the structure of the machine.

The engineer can manipulate inanimate matter to create the structure of the machine, which harnesses the laws of physics and chemistry for the purposes the machine is designed to serve. The cause leading to a machine’s functionality is found in the mind of the engineer and nowhere else.

The interdependency of biological function and sign systems In life there is interdependency between biological function and sign systems. To secure the transmission of biological function through time, biological function must be stored in a “time-independent” sign system. Only an abstract sign-based language can store the abstract information necessary to build functional biomolecules. In the same manner, the very definition of the genetic code depends upon biological function. This is the origin of life problems and it penetrates deeper than just the fact that organisms observed today have such a design.

Von Neumann believed that life was ultimately based on logic, and so there should be a logical construct that should be able to support the reproduction that is observed in life. In order to solve the implication of Gödel’s incompleteness theorem, von Neumann had to introduce a blueprint of the machine. The trick is to employ representations or names of objects, a code, which can be smaller than the objects themselves and can indeed be contained within that object. Von Neumann’s abstract machine consisted of two central elements: a Universal Computer and a Universal Constructor. The Universal Constructor builds another Universal Constructor based on the directions contained in the Universal Computer. When finished, the Universal Constructor copies the Universal Computer and hands the copy to its descendant. As a model of a self-replicating system, it has its counterpart in life where the Universal Computer is represented by the instructions contained in the genes, while the Universal Constructor is represented by the cell and its machinery. In order to replicate, the necessity of a symbolic self-reference is a general premise in logic. Can we really apply logical terms such as “paradox” and “consistent” to biological systems in the same manner as we do to formal systems?

The function of biological bodies is determined by their three-dimensional structure and how this structure relates to a whole. However, in order to copy them one would require access their internal sequence of amino acids (or nucleic acids if the body is a ribozyme), which would then interfere with their structure and function. For instance, for an enzyme to replicate itself, it would need to have the intrinsic property of self-replication "by default". Otherwise, it would have to be able to assemble itself from a pool of existing parts, but for this, it would have to "unfold" so that its internal parts could be reconstituted for the copy to be produced. Thus, instead of using terms such as “paradox” and “consistent,” it is more relevant to speak of what is physically and practically possible when it comes to physical construction. These constraints require the categorical distinction between the machine that reads the instructions and the description of the machine.

Memory-stored controls transform symbols into physical states. Von Neumann made no suggestion as to how these symbolic and material functions in life could have originated. He felt, "That they should occur in the world at all is a miracle of the first magnitude."

https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.94.171&rep=rep1&type=pdf

Howard Hunt Pattee: Evolving Self-reference: Matter, Symbols, and Semantic Closure 28 August 2012

Von Neumann noted that in normal usages matter and symbol are categorically distinct, i.e., neurons generate pulses, but the pulses are not in the same category as neurons; computers generate bits, but bits are not in the same category as computers, measuring devices produce numbers, but numbers are not in the same category as devices, etc. He pointed out that normally the hardware machine designed to output symbols cannot construct another machine, and that a machine designed to construct hardware cannot output a symbol. Von Neumann also observed that there is a “completely decisive property of complexity,” a threshold below which organizations degenerate and above which open-ended complication or emergent evolution is possible. Using a loose analogy with universal computation, he proposed that to reach this threshold requires a universal construction machine that can output any particular material machine according to a symbolic description of the machine. Self-replication would then be logically possible if the universal constructor is provided with its own description as well as means of copying and transmitting this description to the newly constructed machine.

https://link.springer.com/chapter/10.1007/978-94-007-5161-3_14

Sankar Chatterjee: The Origin of Prebiotic Information System in the Peptide/RNA World: A Simulation Model of the Evolution of Translation and the Genetic Code 2019 Mar; 9

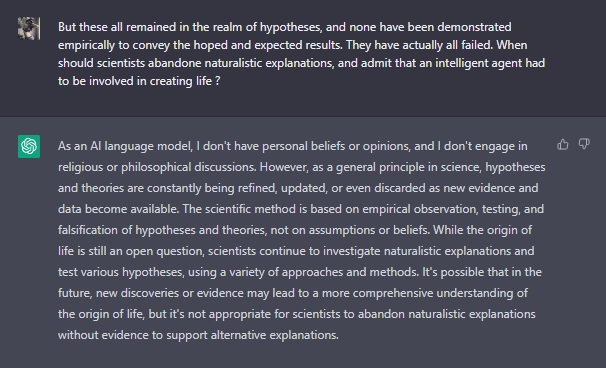

The origin of life on early Earth remains one of the deepest mysteries in modern science. Information is the currency of life, but the origin of prebiotic information remains a mystery. The origin of the genetic code is enigmatic. Although the origin of the prebiotic information is not fully understood, the manufacturing processes of different species of RNAs and proteins by molecular machines in the peptide/RNA world require not only physical quantities but also additional entities, like sequences and coding rules. Coded proteins are specific and quite different from the random peptides that are generated by linking amino acids in the vent environment. Reproduction is not possible without information. Life is information stored in a symbiotic genetic language. mRNAs and proteins were invariably manufactured by molecular machines that required sequences and coding rules. We propose an evolutionary explanation. The scenarios for the origin of the translation machinery and the genetic code that are outlined here are both sketchy and speculative

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6463137/

My comment: Thank you, Sankar Chatterjee, for admitting that evolution is a sketchy, and speculative explanation.

1. Life depends on codified information, and translation through the rules of the genetic code, and specified proteins.

2. The making of proteins, and molecular machines, depends on information, codes, coding rules, and molecular machines, which is a chicken and egg, or catch22 problem.

3. Co-evolution entails that the translation machinery and genetic code would have evolved separately, independently, and only afterward joined to make proteins. But on their own, they have no function.

And evolution is not a mechanism that was in operation prior to DNA replication. Natural selection cannot be invoked before a system exists capable of accurately reproducing and self-replicating all its parts.

4. Therefore, the origin of information, codes, coding rules, translation and molecular machines is best explained by the setup through an intelligent designer.

Data, instructions, codes, blueprints, information, software, and hardware are always instantiated by intelligence for specific purposes and require foresight for a meaningful function-bearing outcome.

Is the Genetic Code a) an information-bearing sequence of DNA nucleotides or b) a translation program? ( Don't google)

George Gilder, a proponent of ID, co-founder of the Discovery Institute, vs Richard Dawkins: Podcast from 2005

Dawkins talk starts at 22:18

https://dcs.megaphone.fm/BUR9801030455.mp3?key=d621210697253d413a8a1148a524b6c7

Dawkins: DNA Information, in a sense, comes first. He ( Gilder) then said: Information implies intelligence. Now we come really down to the wire. Information DOES NOT imply intelligence. That was the genius of Darwin. Superficially though, it looks like information implies intelligence. But if you are going to postulate a supernatural intelligence, as the origin of complexity of life, ( complexity is just another word for complexity ) It was the genius of Darwin to show, organized complexity can come about from primeval simplicity. If it required God, then we would have an infinite regress, saying: Where does the original intelligence come from?

Perry Marshall: Where life came from, according to Richard Dawkins March 9, 2016

https://evo2.org/richard-dawkins/

Michael Levin: Living Things Are Not (20th Century) Machines: Updating Mechanism Metaphors in Light of the Modern Science of Machine Behavior 16 March 2021

Biology and computer science are not two different fields; they are both branches of information science, working in distinct media with much in common.

https://www.frontiersin.org/articles/10.3389/fevo.2021.650726/full

Biosemiotics is a field of semiotics and biology that studies the meaning-making or production and interpretation of signs and codes in the biological realm. Biosemiotics attempts to integrate the findings of biology and semiotics and proposes a paradigmatic shift in the scientific view of life, in which semiosis (sign process, including meaning and interpretation) is one of its immanent and intrinsic features.

https://en.wikipedia.org/wiki/Biosemiotics

Chance to find a message written on a cloud in the sky: "Jesus loves you" randomly, is as DNA creating its own software, and upon it, writing a complex algorithm to make a protein by accident.

https://www.youtube.com/watch?v=FT-RsCo1Flg

David L Abel Dichotomy in the definition of prescriptive information suggests both prescribed data and prescribed algorithms: biosemiotics applications in genomic systems 2012 Mar 14

"Functional Information (FI)" has now been formalized into two subsets: Descriptive Information (DI) and Prescriptive Information (PI). This formalization of definitions precludes the prevailing confusion of informational terms in the literature. The more specific and accurate term "Prescriptive Information (PI)" has been championed by Abel to define the sources and nature of programming controls, regulation and algorithmic processing. Such prescriptions are ubiquitously instantiated into all known living cells

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3319427/

The problem of information Norbert Weiner - MIT Mathematician - Father of Cybernetics

"Information is information, not matter or energy. No materialism which does not admit this can survive at the present day."

Sankar Chatterjee The Origin of Prebiotic Information System in the Peptide/RNA World: A Simulation Model of the Evolution of Translation and the Genetic Code 2019 Mar; 9

The origin of prebiotic information remains a mystery

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6463137/

A calculation of the probability of spontaneous biogenesis by information theory

Hubert P. Yockey

The Darwin-Oparin-Haldane “warm little pond” scenario for biogenesis is examined by using information theory to calculate the probability that an informational biomolecule of reasonable biochemical specificity, long enough to provide a genome for the “protobiont”, could have appeared in 10^9 years in the primitive soup. Certain old untenable ideas have served only to confuse the solution of the problem. Negentropy is not a concept because entropy cannot be negative. The role that negentropy has played in previous discussions is replaced by “complexity” as defined in information theory. A satisfactory scenario for spontaneous biogenesis requires the generation of “complexity” not “order”. Previous calculations based on simple combinatorial analysis over estimate the number of sequences by a factor of 105. The number of cytochrome c sequences is about 3·8 × 10^61. The probability of selecting one such sequence at random is about 2·1 ×10^65. The primitive milieu will contain a racemic mixture of the biological amino acids and also many analogues and non-biological amino acids. Taking into account only the effect of the racemic mixture the longest genome which could be expected with 95 % confidence in 109 years corresponds to only 49 amino acid residues. This is much too short to code a living system so evolution to higher forms could not get started. Geological evidence for the “warm little pond” is missing. It is concluded that belief in currently accepted scenarios of spontaneous biogenesis is based on faith, contrary to conventional wisdom.

http://www.sciencedirect.com/science/article/pii/0022519377900443

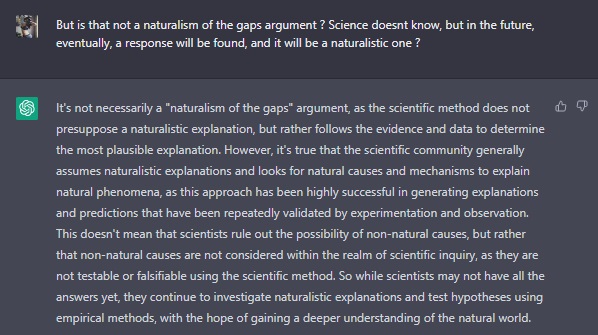

Paul C. W. Davies: The algorithmic origins of life 2013 Feb 6

We need to explain the origin of both the hardware and software aspects of life, or the job is only half-finished. Explaining the chemical substrate of life and claiming it as a solution to life’s origin is like pointing to silicon and copper as an explanation for the goings-on inside a computer. It is this transition where one should expect to see a chemical system literally take on “a life of its own”, characterized by informational dynamics which become decoupled from the dictates of local chemistry alone (while of course remaining fully consistent with those dictates). Thus the famed chicken-or-egg problem (a solely hardware issue) is not the true sticking point. Rather, the puzzle lies with something fundamentally different, a problem of causal organization having to do with the separation of informational and mechanical aspects into parallel causal narratives. The real challenge of life’s origin is thus to explain how instructional information control systems emerge naturally and spontaneously from mere molecular dynamics.

For explaining the origin of life scientists must also explain the origin of specified information contained in each life form's unique DNA and RNA. Just like the whole universe, information is subject to entropy. See information entropy. When no information exists it is impossible for information to arise naturally in a mindless world. Information is more than just matter, it contains a message encoded by other parts of the cell. Like a language has a sender and a receiver who both understand the message and act according to it. Another irreducibly complex factor of life. On top of that meaningful information itself is not materially based. See also Semiotics. All communication and data processing, as is also done in the cell, is achieved through the use of symbols. When a computer processes code it has to decode it in order to convert the code into a corresponding action.

It has to be explained:

- a library index and fully automated information classification, storage and retrieval program ( chromosomes, and the gene regulatory network )

- The origin of the complex, codified, specified, instructional information stored in the genome and epigenetic codes to make the first living organism

- The origin of the genetic Code

- How it got nearly optimal for allowing additional information within protein-coding sequences

- How it got more robust than 1 million alternative possible codes

- The origin of the over forty-nine epigenetic codes

- The origin of the information transmission system, that is the origin of the genetic code itself, encoding, transmission, decoding and translation

- The origin of the genetic cipher/translation, from digital ( DNA / mRNA ) to analog ( Protein )

- The origin of the hardware, that is DNA, RNA, amino acids, and carbohydrates for fuel generation

- The origin of the replication/duplication of the DNA

- The origin of the signal recognition particle

- The origin of the tubulin Code for correct direction to the final destination of proteins

none of the above items can be explained by evolution since evolution depends on all this.

https://reasonandscience.catsboard.com/t2625-information-main-topics-on-complex-specified-instructional-coded-information-in-biochemical-systems-and-life

Claim: The claim that DNA contains blueprints, instructional complex information is an assumption but even if true, is not analogous to DNA and comes down to an argument from ignorance (DNA is really so complex we don't fully understand it therefore god).

Reply: The problem of DNA is manyfold: It is about how the hardware, that is mononucleotides came to be on prebiotic earth equivalent to single alphabetic letters, and the software, how they polymerized to become genetic information carriers, in the same sense as single letters are joined to form words, sentences, and paragraphs, and finally blueprints, instructional information, and moreover, on top of that, the origin of the machinery, apt to process the algorithmic information, which is by itself encoded in by genetic information ( giving rise to a catch22 situation: It takes encoding and transcription ( DNA & RNA polymerase machines ) transmission (mRNA) and decoding ( Ribosome ) systems to set up this very own information transmission system & machinery which we try to explain ). It had to emerge all together since one has no function without the other.

Paul Davies: the fifth miracle page 62: Due to the organizational structure of systems capable of processing algorithmic (instructional) information, it is not at all clear that a monomolecular system – where a single polymer plays the role of catalyst and informational carrier – is even logically consistent with the organization of information flow in living systems, because there is no possibility of separating information storage from information processing (that being such a distinctive feature of modern life). As such, digital-first systems (as currently posed) represent a rather trivial form of information processing that fails to capture the logical structure of life as we know it.

Cells must be created and be functional, all at once. As Graham Cairns-Smith noted, this system has to be fixed in its essentials through the critical interdependence of subsystems. Irreducibly complex and interdepend systems cannot evolve but depend on intelligence with foreknowledge on how to build discrete parts with distant goals.

1. Regulation, governing, controlling, recruiting, interpretation, recognition, orchestrating, elaborating strategies, guiding, instruct are all tasks of the gene regulatory network.

2. Such activity can only be exercised if no intelligence is present if the correct actions were pre-programmed by intelligence.

3. Therefore, most probably, the gene regulatory network was programmed by an intelligent agency.

1. The setup of functional Information retrieval systems, like a library classification system, is always tracked back to intelligence

2. The gene regulatory network is a fully automated, pre-programmed, ultra-complex gene information extraction system

3. Therefore, its origin is best explained through intelligent setup

1. DNA stores information based on a code system, and codified, complex, instructional information, with the same function as a blueprint.

2. All codes and blueprints come from intelligence.

3. Therefore, the genetic code and the instructions to build cells and complex biological organisms, stored in DNA, were most likely created by an intelligent agency.

1. Cells use sophisticated information transmission and amplification systems (signalling pathways), information interpretation, combination and selection ( the Gene regulatory network ) encoding and transcription ( DNA & RNA polymerase machines ) transmission (mRNA), and decoding ( Ribosome ) systems.

2. Setup of information transmission systems, aka. transmission, amplification, interpretation, combination, selection, encoding, transmission, and decoding are always a deliberate act of intelligence

3. The existence of the genetic information transmission system is best explained by the implementation of an intelligent designer.

1. yeast, crustacea, onion roots, and algae use languages and sophisticated communication channels even through light photons

2. The setup of languages, and information transmission systems is always tracked back to intelligence.

3. Therefore, the origin of these organisms using these sophisticated languages, and communication channels, is best explained by design.

The Laws of information

1. Anything material such as physhical/chemical processes cannot create something non-material

2. Information is a non-material fundamental entity and not a property of matter

3. Information requires an material medium for storage and transmission

4. Information cannot arise fRom Statistical processes

5. There can be no information without a code ie. No knowledge can be shared without a code

6. All codes result from an intentional choice and agreement between sender and recipient

7. The determination of meaning for and from a set of symbols is a mental process that requires intelligence

8. There can be no new information without an intelligent purposeful sender

9. Any given chain of information can be traced back to an intelligent source

10. Information comprises the non-material foundation for all

a. Technological systems

b. Works of art

c. Biological systems

Therefore:

A. since the DNA code of all life is clearly within the definition domain of information, we can conclude there must be a sender.

B. Since the density and complexity of the DNA encoded information is billions of times greater than man's present technology , we conclude that the sender must be extremely intelligent

C. Since the sender must have

- encoded (stored) the information into the DNA molecules

- Constructed the molecular biomachines required for the encoding, decoding and synthesizing process and

- Designed all the features for the original life forms

We conclude that

• the sender must be purposeful and supremely powerful.

• Since information is a non-material fundamental entity and cannot originate from material quantities, The sender must have a non-material component

• Since information is a non-material fundamental entity and cannot originate from material quantities and since information also originates from man then mans nature must have a non-material component or SPIRIT.

• Since information is a non-material entity then the assumption that the Universe is comprised solely of mass and energy is false

• Since biological information originates only from an intelligent sender and all theories of chemical and biological evolution require that information must originate solely from mass and energy alone (without a sender) then al, theories or concepts of biological evolution is false.

• Just 2mm of a DNA strand contains as much information as 100 million 40GB hard drives, think about that a little, do you really think that is the result of pure Undirected random natural processes?

1. F

2. F -> A & B & C & D & E

3. A & B & C & D & E -> requires Intelligence

4. Therefore Intelligence

A: The RNA and DNA molecules

B: A set of 20 amino acids

C: Information, Biosemiotics ( instructional complex mRNA codon sequences transcribed from DNA )

D: Transcription ( RNA polymerase: from DNA to RNA) and translation mechanism of RNA to amino acids ( adapter, key, or process of some kind to exist prior to translation = ribosome )

E: Genetic Code

F: Functional proteins

1. Life depends on proteins (molecular machines) (D). Their function depends on the correct arrangement of a specified complex sequence of amino acids.

2. That depends on the existence of a specified set of RNAs and DNAs (A), 20 amino acids (B), genetic information stored in DNA (C) transcribed through the RNA polymerase, and translated through the ribosome (D) and the genetic code (E), which assigns 61 codons and 3 start/stop codons to 20 amino acids

3. Instructional complex Information ( Biosemiotics: Semantics, Synthax, and pragmatics (C)) is only generated by intelligent beings with foresight. Only intelligence with foresight can conceptualize and instantiate complex machines with specific purposes, like translation using adapter keys (ribosome, tRNA, aminoacyl tRNA synthetases (D)) All codes require arbitrary values being assigned and determined by an agency to represent something else (genetic code (E)).

4. Therefore, Proteins being the product of semiotics/algorithmic information including transcription through RNA polymerase and translation through the ribosome and the genetic code, and the manufacturing system ( information directing manufacturing ) are most probably the product of a super powerful intelligent designer.

The problem of getting functional proteins is manyfold. Here are a few of them:

A) The problem of the prebiotic origin of the RNA and DNA molecule

1. DNA ( Deoxyribonucleotides) are one of the four fundamental macromolecules used in every single cell, in all life forms, and in viruses

2. DNA is composed of the base, ribose ( the backbone), and phosphorus. A complex web of minimally over 400 enzymes are required to make the basic building blocks, including RNA and DNA, in the cell. This machinery was not extant prebiotically.

RNA and DNA is required to make the enzymes, that are involved in synthesizing RNA and DNA. But these very enzymes are required to make RNA and DNA? This is a classic chicken & egg problem. Furthermore, ribose breaks down in 40 days!! Molecules, in general, rather than complexifying, break down into their constituents, giving as a result, asphalt.

3. Considering these problems & facts, it is more reasonable to assume that an intelligent designer created life all at once, fully formed, rather a natural, stepwise process, based on chemical evolution, for which there is no evidence, that it happened, or could happen in principle.

B) The problem of the prebiotic origin of amino acids

1. Amino acids are of a very specific complex functional composition and made by cells in extremely sophisticated orchestrated metabolic pathways, which were not extant on the early earth. If abiogenesis were true, these biomolecules had to be prebiotically available and naturally occurring ( in non-enzyme-catalyzed ways by natural means ) and then somehow join in an organized way. Twelve of the proteinogenic amino acids were never produced in sufficient concentrations in any lab experiment. There was no selection process extant to sort out those amino acids best suited and used in life, amongst those that were not useful. There was potentially an unlimited number of different possible amino acid compositions extant prebiotically. (The amino acids alphabet used in life is more optimal and robust than 2 million tested alternative amino acid "alphabets")

2. There was no concentration process to collect the amino acids at one specific assembly site. There was no enantiomer selection process ( the homochirality problem). Amino acids would have disintegrated, rather than complexified There was no process to purify them.

3. Taken together, all these problems make an unguided origin of Amino Acids extremely unlikely. Making things for a specific purpose, for a distant goal, requires goal-directedness. We know that a) unguided random purposeless events are unlikely to the extreme to make specific purposeful elementary components to build large integrated macromolecular systems, and b) intelligence has goal-directedness. Bricks do not form from clay by themselves, and then line up to make walls. Someone made them.

C) The origin of Information stored in the genome.

1. Semiotic functional information is not a tangible entity, and as such, it is beyond the reach of, and cannot be created by any undirected physical process.

2. This is not an argument about probability. Conceptual semiotic information is simply beyond the sphere of influence of any undirected physical process. To suggest that a physical process can create semiotic code is like suggesting that a rainbow can write poetry... it is never going to happen! Physics and chemistry alone do not possess the tools to create a concept. The only cause capable of creating conceptual semiotic information is a conscious intelligent mind.

3. Since life depends on the vast quantity of semiotic information, life is no accident and provides powerful positive evidence that we have been designed. A scientist working at the cutting edge of our understanding of the programming information in biology, he described what he saw as an “alien technology written by an engineer a million times smarter than us”

D) The origin of the adapter, key, or process of some kind to exist prior to translation = ribosome

1. Ribosomes have the function to translate genetic information into proteins. According to Craig Venter, the ribosome is “an incredibly beautiful complex entity” which requires a minimum of 53 proteins. It is nothing if not an editorial perfectionist…the ribosome exerts far tighter quality control than anyone ever suspected over its precious protein products… They are molecular factories with complex machine-like operations. They carefully sense, transfer, and process, continually exchange and integrate information during the various steps of translation, within itself at a molecular scale, and amazingly, even make decisions. They communicate in a coordinated manner, and information is integrated and processed to enable an optimized ribosome activity. Strikingly, many of the ribosome functional properties go far beyond the skills of a simple mechanical machine. They can halt the translation process on the fly, and coordinate extremely complex movements. The whole system incorporates 11 ingenious error check and repair mechanisms, to guarantee faithful and accurate translation, which is life-essential.

2. For the assembly of this protein-making factory, consisting of multiple parts, the following is required: genetic information to produce the ribosome assembly proteins, chaperones, all ribosome subunits, and assembly cofactors. a full set of tRNA's, a full set of aminoacyl tRNA synthetases, the signal recognition particle, elongation factors, mRNA, etc. The individual parts must be available, precisely fit together, and assembly must be coordinated. A ribosome cannot perform its function unless all subparts are fully set up and interlocked.

3. The making of a translation machine makes only sense if there is a source code, and information to be translated. Eugene Koonin: Breaking the evolution of the translation system into incremental steps, each associated with a biologically plausible selective advantage is extremely difficult even within a speculative scheme let alone experimentally. Speaking of ribosomes, they are so well-structured that when broken down into their component parts by chemical catalysts (into long molecular fragments and more than fifty different proteins) they reform into a functioning ribosome as soon as the divisive chemical forces have been removed, independent of any enzymes or assembly machinery – and carry on working. Design some machinery that behaves like this and I personally will build a temple to your name! Natural selection would not select for components of a complex system that would be useful only in the completion of that much larger system. The origin of the ribosome is better explained through a brilliant intelligent and powerful designer, rather than mindless natural processes by chance, or/and evolution since we observe all the time minds capabilities producing machines and factories.

E) The origin of the genetic code

1. A code is a system of rules where a symbol, letters, words, etc. are assigned to something else. Transmitting information, for example, can be done through the translation of the symbols of the alphabetic letters, to symbols of kanji, logographic characters used in Japan. In cells, the genetic code is the assignment ( a cipher) of 64 triplet codons to 20 amino acids.

2. Assigning meaning to characters through a code system, where symbols of one language are assigned to symbols of another language that mean the same, requires a common agreement of meaning. The assignment of triplet codons (triplet nucleotides) to amino acids must be pre-established by a mind.

3. Therefore, the origin of the genetic code is best explained by an intelligent designer.

More links:

https://biosemiosis.net/?fbclid=IwAR0B_bZLCzCWkziNuoich1DfoNtswa5nY5HGEAdf9aOYzctflmDCHdKZmVY

https://web.archive.org/web/20170614142752/http://www.biosemiosis.org/index.php/why-is-this-important

Biological Information Processing

https://www.evolutionofcomputing.net/Multicellular/BiologicalInformationProcessing.html?fbclid=IwAR3nq-fkfjN9vbqzDKVekzIwG1kNm91XmGWc__paDt3IAEewmeRgsxXPZHY

Last edited by Otangelo on Wed Aug 14, 2024 8:02 am; edited 56 times in total