The algorithmic origins of life

https://reasonandscience.catsboard.com/t3061-the-algorithmic-origins-of-life

1. Creating a recipe to make a cake is always a mental process. Creating a blueprint to make a machine is always a mental process.

2. To suggest that a physical process can create instructional assembly information, a recipe or a blueprint, is like suggesting that a throwing ink on paper will create a blueprint. It is never going to happen!

3. Physics and chemistry alone do not possess the tools to create a concept, or functional complex machines made of interlocked parts for specific purposes

4. The only cause capable of creating conceptual semiotic information is a conscious intelligent mind.

5. DNA stores codified information to make proteins, and cells, which are chemical factories in a literal sense.

https://biosemiosis.net/?fbclid=IwAR1xTZ-JWsSeSaTHqN5MmsaXpPN6dlFQz4liWCcOYzt6ugib-TVWv9y8YIE

Information is not a tangible entity, it has no energy and no mass, it is not physical, it is conceptual.

- Life is a software/information-driven process.

- Information is not physical it is conceptual.

- The only known source of semiotic information is prior to intelligence.

- Life is therefore the direct product of a deliberate creative intellectual process.

Semiotic functional information is not a tangible entity, and as such, it is beyond the reach of, and cannot be created by any undirected physical process.

This is not an argument about probability. Conceptual semiotic information is simply beyond the sphere of influence of any undirected physical process. To suggest that a physical process can create semiotic code is like suggesting that a rainbow can write poetry... it is never going to happen! Physics and chemistry alone do not possess the tools to create a concept. The only cause capable of creating conceptual semiotic information is a conscious intelligent mind.

Life is no accident, the vast quantity of semiotic information in life provides powerful positive evidence that we have been designed.

To quote one scientist working at the cutting edge of our understanding of the programming information in biology, he described what he saw as an “alien technology written by an engineer a million times smarter than us”

If you convert the idea to a sentence to communicate (as I do here) or to remember it, that sentence may be physical, yet is dependent upon the non-physical idea, which is in no way dependent upon it.

Howard Hunt Pattee: Evolving Self-reference: Matter, Symbols, and Semantic Closure 28 August 2012

Von Neumann noted that in normal usages matter and symbol are categorically distinct, i.e., neurons generate pulses, but the pulses are not in the same category as neurons; computers generate bits, but bits are not in the same category as computers, measuring devices produce numbers, but numbers are not in the same category as devices, etc. He pointed out that normally the hardware machine designed to output symbols cannot construct another machine, and that a machine designed to construct hardware cannot output a symbol. Von Neumann also observed that there is a “completely decisive property of complexity,” a threshold below which organizations degenerate and above which open-ended complication or emergent evolution is possible. Using a loose analogy with universal computation, he proposed that to reach this threshold requires a universal construction machine that can output any particular material machine according to a symbolic description of the machine. Self-replication would then be logically possible if the universal constructor is provided with its own description as well as means of copying and transmitting this description to the newly constructed machine.

https://link.springer.com/chapter/10.1007/978-94-007-5161-3_14

Information is not physical

https://arxiv.org/pdf/1402.2414.pdf

Information is a disembodied abstract entity independent of its physical carrier. ”Information is always tied to a physical representation. It is represented by engraving on a stone tablet, a spin, a charge, a hole in a punched card, a mark on paper, or some other equivalent. This ties the handling of information to all the possibilities and restrictions of our real physical word, its laws of physics and its storehouse”. However, the legitimate questions concern the physical properties of information carriers like ”stone tablet, a spin, a charge, a hole in a punched card, a mark on paper”, but not the information itself. Information is neither classical nor quantum, it is independent of the properties of physical systems used to its processing.

An algorithm is a finite sequence of well-defined, computer-implementable instructions resulting in precise intended functions. A prescriptive algorithm in biological context can be described as performing control operations using rules, axioms and coherent instructions. These instructions are performed, using a linear, digital, cybernetic string of symbols representing syntactic, semantic and pragmatic prescriptive information.

Cells host algorithmic programs for cell division, cell death, enzymes pre-programmed to perform DNA splicing, programs for dynamic changes of gene expression in response to the changing environment. Cells use pre-programmed adaptive responses to genomic stress, pre-programmed genes for fetal development regulation, temporal programs for genome replication, pre-programmed animal genes dictating behaviors including reflexes and fixed action patterns, pre-programmed biological timetables for aging etc.

A programming algorithm is like a recipe that describes the exact steps needed to solve a problem or reach a goal. We've all seen food recipes - they list the ingredients needed and a set of steps for how to make a meal. Well, an algorithm is just like that. A programming algorithm describes how to do something, and it will be done exactly that way every time.

Okay, you probably wish you could see an example, how that works in the cell, right? Lets make an analogy. Lets suppose you have a receipe to make spaghetti with a special tomato sauce written on a word document saved on your computer. You have a japanese friend, and only communicate with him using the google translation program. Now he wants to try out that receipe, and asks you to send him a copy. So you write an email, annex the word document, and send it to him. When he receives it, he will use google translate, and get the receipe in japanese, written in kanji, in logographic japanese characters which he understands. With the information at hand, he can make the spaghetti with that fine special tomato souce exactly as described in the receipe. In order for that communication to happen, you use at your end 26 letters from the alphabet to write the receipe, and your friend has 2,136 kanji characters that permits him to understand the receipe in Japanese. Google translate does the translation work.

While the receipe is written on a word document saved on your computer, in the cell, the receipe (instructions or master plan) for the construction of proteins which are the life essential molecular machines, veritable working horses, is written in genes through DNA. While you use the 26 letters of the alphabet to write your receipe, the Cell uses DNA, deoxyribonucleotides, four monomer "letters". In kanji there are 2136 characters, the alphabet uses 26, computer codes being binary, use 0,1. The language of DNA is digital, but not binary. Where binary encoding has 0 and 1 to work with (2 - hence the 'bi'nary) DNA uses four different organic bases, which are adenine (A), guanine (G), cytosine (C) and thymine (T)

The way by which DNA stores the genetic information consists of codons equivalent to words, consisting of an array of three DNA nucleotides. These triplets form "words". While you used sentences to write the spaghetti receipt, the equivalent sentences are called genes written through codon "words", . With four possible nucleobases, the three nucleotides can give 4^3 = 64 different possible "words" (tri-nucleotide sequences). In the standard genetic code, three of these 64 codons (UAA, UAG and UGA) are stop codons.

There has to be a mechanism to extract the information in the genome, and send it to the ribosome, the factory that makes proteins, which is at another place in the cell, free floating in the cytoplasm. The message contained in the genome is transcribed by a very complex molecular machine, called RNA polymerase. It makes a transcript, a copy of the message in the genome, and that transcript is sent to the Ribosome. That transcript is called messenger RNA or typically mRNA.

In communications and information processing, code is a system of rules to convert information—such as assigning the meaning of a letter, word, into another form, ( as another word, letter, etc. ) In translation, 64 genetic codons are assigned to 20 amino acids. It refers to the assignment of the codons to the amino acids, thus being the cornerstone template underling the translation process. Assignment means designating, ascribing, corresponding, correlating.

The Ribosome does basically what google translate does. But while google translate just gives the receipt in another language, and our japanese friend still has to make the spaghettis, the Ribosome actually makes in one step the end product, which are proteins.

Imagine the brainpower involved in the entire process from inventing the receipt to make spaghettis, until they are on the table of your japanese friend. What is involved ?

1. Your imagination of the receipt

2. Inventing an alphabet, a language

3. Inventing the medium to write down the message

4. Inventing the medium to store the message

5. Storing the message in the medium

6. Inventing the medium to extract the message

7. Inventing the medium to send the message

8. Inventing the second language ( japanese)

9. Inventing the translation code/cipher from your language, to japanese

10. Making the machine that performs the translation

11. Programming the machine to know both languages, to make the translation

12. Making the translation

12. Makin of the spaghettis on the other end using the receipt in japanese

1. Cells host Genetic information

2. This information prescribes functional outcomes due to the right particular specified complex sequence of triplet codons and ultimately the translated sequencing of amino acid building blocks into protein strings. The sequencing of nucleotides in DNA also prescribes highly specific regulatory micro RNAs and other epigenetic factors.

3. Algorithms, prescribing functional instructions, digital programming, using symbols and coding systems are abstract respresentations and non-physical, and originate always from thought—from conscious or intelligent activity.

4. Therefore, genetic and epigenetic information comes from an intelligent mind. Since there was no human mind present to create life, it must have been a supernatural agency.

1. Algorithms, prescribing functional instructions, digital programming, using symbols and coding systems are abstract respresentations and non-physical, and originate always from thought—from conscious or intelligent activity.

2. Genetic and epigenetic information is characterized containing prescriptive codified information, which result in functional outcomes due to the right particular specified complex sequence of triplet codons and ultimately the translated sequencing of amino acid building blocks into protein strings. The sequencing of nucleotides in DNA also prescribes highly specific regulatory micro RNAs and other epigenetic factors.

3. Therefore, genetic and epigenetic information comes from an intelligent mind. Since there was no human mind present to create life, it must have been a supernatural agency.

Three subsets of sequence complexity and their relevance to biopolymeric information

https://link.springer.com/article/10.1186/1742-4682-2-29

An algorithm is a finite sequence of well-defined, computer-implementable instructions. Genetic algorithms instruct sophisticated biological organization. Three qualitative kinds of sequence complexity exist: random (RSC), ordered (OSC), and functional (FSC). FSC alone provides algorithmic instruction. A linear, digital, cybernetic string of symbols representing syntactic, semantic and pragmatic prescription; each successive sign in the string is a representation of a decision-node configurable switch-setting – a specific selection for function. Selection, specification, or signification of certain "choices" in FSC sequences results only from nonrandom selection.

Nucleotides are grouped into triplet Hamming block codes, each of which represents a certain amino acid. No direct physicochemical causative link exists between codon and its symbolized amino acid in the physical translative machinery. Physics and chemistry do not explain why the "correct" amino acid lies at the opposite end of tRNA from the appropriate anticodon. Physics and chemistry do not explain how the appropriate aminoacyl tRNA synthetase joins a specific amino acid only to a tRNA with the correct anticodon on its opposite end. Genes are not analogous to messages; genes are messages. Genes are literal programs. They are sent from a source by a transmitter through a channel. Prescriptive sequences are called "instructions" and "programs." They are not merely complex sequences. They are algorithmically complex sequences. They are cybernetic.

Leroy Hood The digital code of DNA 23 January 2003

The discovery of the double helix in 1953 immediately raised questions about how biological information is encoded in DNA. A remarkable feature of the structure is that DNA can accommodate almost any sequence of base pairs — any combination of the bases adenine (A), cytosine (C), guanine (G) and thymine (T) — and, hence any digital message or information.

https://www.nature.com/articles/nature01410

Translation occurs after the messenger RNA (mRNA) has carried the transcribed ‘message’ from the DNA to protein-making factories in the cell, called ribosomes?.

The message carried by the mRNA is read by a carrier molecule called transfer RNA ?(tRNA).

https://www.yourgenome.org/facts/what-is-gene-expression

The capabilities of chaos and complexity.

http://europepmc.org/article/PMC/2662469

Do symbol systems exist outside of human minds?

Molecular biology’s two-dimensional complexity (secondary biopolymeric structure) and three-dimensional complexity (tertiary biopolymeric structure) are both ultimately determined by linear sequence complexity (primary structure; functional sequence complexity, FSC). The codon table is arbitrary and formal, not physical. The linking of each tRNA with the correct amino acid depends entirely upon on a completely independent family of tRNA aminoacyl synthetase proteins. Each of these synthetases must be specifically prescribed by separate linear digital programming, but using the same MSS. These symbol and coding systems not only predate human existence, they produced humans along with their anthropocentric minds.

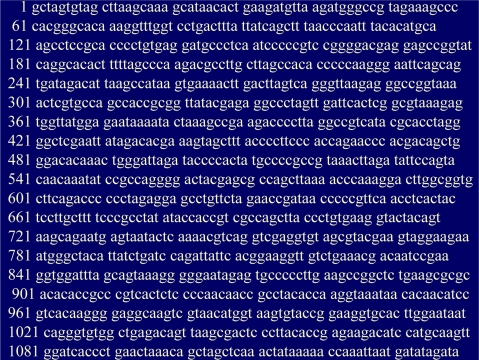

The image above shows the prescriptive coding of a section of DNA. Each letter represents a choice from an alphabet of four options. The particular sequencing of letter choices prescribes the sequence of triplet codons and ultimately the translated sequencing of amino acid building blocks into protein strings. The sequencing of nucleotides in DNA also prescribes highly specific regulatory micro RNAs and other epigenetic factors. Thus linear digital instructions program cooperative and holistic metabolic proficiency.

Chaos is neither organized nor a true system, let alone “self-organized.” A bona fide system requires organization. Chaos by definition lacks organization. What formal functions does for example a hurricane perform? It doesn’t DO anything constructive or formally functional because it contains no formal organizational components. It has no programming talents or creative instincts. A hurricane is not a participant in Decision Theory. A hurricane does not set logic gates according to arbitrary rules of inference. A hurricane has no specifically designed dynamically-decoupled configurable switches. No means exists to instantiate formal choices or function into physicality. A highly self-ordered hurricane does nothing but destroy organization. That applies to any unguided, random, natural events.

The capabilities of stand-alone chaos, complexity, self-ordered states, natural attractors, fractals, drunken walks, complex adaptive systems, and other subjects of non linear dynamic models are often inflated. Scientific mechanism must be provided for how purely physicodynamic phenomena can program decision nodes, optimize algorithms, set configurable switches so as to achieve integrated circuits, achieve computational halting, and organize otherwise unrelated chemical reactions into a protometabolism. We know only of conscious or intelligent agents able to provide such things.

Information and the Nature of Reality From Physics to Metaphysics page 149

The concept of information has been a victim of a philosophical impasse that has a long and contentious history: the problem of specifying the ontological status of the representations or contents of our thoughts. How can the

content (aka meaning, reference, significant aboutness) of a sign or thought have any causal efficacy in the world if it is by definition not intrinsic to whatever physical object or process represents it?

Consider the classic example of a wax impression left by a signet ring in wax. Except for the mind that interprets it, the wax impression is just wax, the ring is just a metallic form, and their conjunction at a time when the wax was still warm and malleable was just a physical event in which one object alters another when they are brought into contact. Something more makes the wax impression a sign that conveys information. It must be interpreted by someone.

In order to develop a full scientific understanding of information we will be required to give up thinking about it, even metaphorically, as some artifact or commodity. To make sense of the implicit representational function that distinguishes information from other merely physical relationships, we will need to find a precise way to characterize its defining nonintrinsic feature – its referential content – and show how it can be causally efficacious despite its physical absence. The enigmatic status of this relationship was eloquently, if enigmatically, framed by Brentano’s use of the term “inexistence” when describing mental phenomena.

Signature in the Cell, Stephen Meyer page 16

What humans recognize as information certainly originates from thought—from conscious or intelligent activity. A message received via fax by one person first arose as an idea in the mind of another. The software stored and sold on a compact disc resulted from the design of a software engineer. The great works of literature began first as ideas in the minds of writers—Tolstoy, Austen, or Donne. Our experience of the world shows that what we recognize as information invariably reflects the prior activity of conscious and intelligent persons.

We now know that we do not just create information in our own technology; we also find it in our biology—and, indeed, in the cells of every living organism on earth. But how did this information arise? The age-old conflict between the mind-first and matter-first world-views cuts right through the heart of the mystery of life’s origin. Can the origin of life be explained purely by reference to material processes such as undirected chemical reactions or random collisions of molecules?

The algorithmic origins of life

https://royalsocietypublishing.org/doi/full/10.1098/rsif.2012.0869

The key distinction between the origin of life and other ‘emergent’ transitions is the onset of distributed information control, enabling context-dependent causation, where an abstract and non-physical systemic entity (algorithmic information) effectively becomes a causal agent capable of manipulating its material substrate.

Biological information is functional due to the right sequence. There have been a variety of terms employed for measuring functional biological information — complex and specified information (CSI), Functional Sequence Complexity (FSC) Instructional complex Information. I like the term instructional because it defines accurately what is being done, namely instructing the right sequence of amino acids to make proteins, and also the sequence of messenger RNA, which is used for gene regulation, and a variety of yet unexplored function.

Another term is prescriptive information (PI). It describes as well accurately what genes do. They prescribe how proteins have to be assembled. But it smuggles in as well a meaning, which is highly disputed between proponents of intelligent design, and unguided evolution. Prescribing implies that an intelligent agency preordained the nucleotide sequence in order to be functional. The following paper states:

Dichotomy in the definition of prescriptive information suggests both prescribed data and prescribed algorithms: biosemiotics applications in genomic systems

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3319427/

Biological information frequently manifests its “meaning” through instruction or actual production of formal bio-function. Such information is called Prescriptive Information (PI). PI programs organize and execute a prescribed set of choices. Closer examination of this term in cellular systems has led to a dichotomy in its definition suggesting both prescribed data and prescribed algorithms are constituents of PI. This paper looks at this dichotomy as expressed in both the genetic code and in the central dogma of protein synthesis. An example of a genetic algorithm is modeled after the ribosome, and an examination of the protein synthesis process is used to differentiate PI data from PI algorithms.

Both the method used to combine several genes together to produce a molecular machine and the operational logic of the machine are examples of an algorithm. Molecular machines are a product of several polycodon instruction sets (genes) and may be operated upon algorithmically. But what process determines what algorithm to execute?

In addition to algorithm execution, there needs to be an assembly algorithm. Any manufacturing engineer knows that nothing (in production) is built without plans that precisely define orders of operations to properly and economically assemble components to build a machine or product. There must be by necessity, an order of operations to construct biological machines. This is because biological machines are neither chaotic nor random, but are functionally coherent assemblies of proteins/RNA elements. A set of operations that govern the construction of such assemblies may exist as an algorithm which we need to discover. It details real biological processes that are operated upon by a set of rules that define the construction of biological elements both in a temporal and physical assembly sequence manner.

An Algorithm is a set of rules or procedures that precisely defines a finite sequence of operations. These instructions prescribe a computation or action that, when executed, will proceed through a finite number of well-defined states that leads to specific outcomes. In this context an algorithm can be represented as: Algorithm = logic + control; where the logic component expresses rules, operations, axioms and coherent instructions. These instructions may be used in the computation and control, while decision-making components determines the way in which deduction is applied to the axioms according to the rules as it applies to instructions.

A ribosome is a biological machine consisting of nearly 200 proteins (assembly factors) that assist in assembly operations, along with 4 RNA molecules and 78 ribosomal proteins that compose a mature ribosome. This complex of proteins and RNAs collectively produce a new function that is greater than the individual functionality of proteins and RNAs that compose it.

The DNA (source data), RNA (edited mRNA), large and small RNA components of ribosomal RNA, ribosomal protein, tRNA, aminoacyl-tRNA synthetase enzymes, and "manufactured" protein (ribosome output) are part of this one way, irreversible bridge contained in the central dogma of molecular biology.

One of the greatest enigmas of molecular biology is how codonic linear digital programming is not only able to anticipate what the Gibbs free energy folding will be, but it actually prescribes that eventual folding through its sequencing of amino acids. Much the same as a human engineer, the nonphysical, formal PI instantiated into linear digital codon prescription makes use of physical realities like thermodynamics to produce the needed globular molecular machines.

The functional operation of the ribosome consists of logical structures and control that obeys the rules for an algorithm. The simplest element of logical structure in an algorithm is a linear sequence. A linear sequence consists of one instruction or datum, followed immediately by another as is evident in the linear arrangement of codons that make up the genes of the DNA.

The mRNA (which is itself a product of the gene copy and editor subroutine) is a necessary input which is formatted by grammatical rules.

Top-down causation by information control: from a philosophical problem to a scientific research programme

https://sci-hub.st/https://royalsocietypublishing.org/doi/10.1098/rsif.2008.0018

https://reasonandscience.catsboard.com/t3061-the-algorithmic-origins-of-life

1. Creating a recipe to make a cake is always a mental process. Creating a blueprint to make a machine is always a mental process.

2. To suggest that a physical process can create instructional assembly information, a recipe or a blueprint, is like suggesting that a throwing ink on paper will create a blueprint. It is never going to happen!

3. Physics and chemistry alone do not possess the tools to create a concept, or functional complex machines made of interlocked parts for specific purposes

4. The only cause capable of creating conceptual semiotic information is a conscious intelligent mind.

5. DNA stores codified information to make proteins, and cells, which are chemical factories in a literal sense.

https://biosemiosis.net/?fbclid=IwAR1xTZ-JWsSeSaTHqN5MmsaXpPN6dlFQz4liWCcOYzt6ugib-TVWv9y8YIE

Information is not a tangible entity, it has no energy and no mass, it is not physical, it is conceptual.

- Life is a software/information-driven process.

- Information is not physical it is conceptual.

- The only known source of semiotic information is prior to intelligence.

- Life is therefore the direct product of a deliberate creative intellectual process.

Semiotic functional information is not a tangible entity, and as such, it is beyond the reach of, and cannot be created by any undirected physical process.

This is not an argument about probability. Conceptual semiotic information is simply beyond the sphere of influence of any undirected physical process. To suggest that a physical process can create semiotic code is like suggesting that a rainbow can write poetry... it is never going to happen! Physics and chemistry alone do not possess the tools to create a concept. The only cause capable of creating conceptual semiotic information is a conscious intelligent mind.

Life is no accident, the vast quantity of semiotic information in life provides powerful positive evidence that we have been designed.

To quote one scientist working at the cutting edge of our understanding of the programming information in biology, he described what he saw as an “alien technology written by an engineer a million times smarter than us”

If you convert the idea to a sentence to communicate (as I do here) or to remember it, that sentence may be physical, yet is dependent upon the non-physical idea, which is in no way dependent upon it.

Howard Hunt Pattee: Evolving Self-reference: Matter, Symbols, and Semantic Closure 28 August 2012

Von Neumann noted that in normal usages matter and symbol are categorically distinct, i.e., neurons generate pulses, but the pulses are not in the same category as neurons; computers generate bits, but bits are not in the same category as computers, measuring devices produce numbers, but numbers are not in the same category as devices, etc. He pointed out that normally the hardware machine designed to output symbols cannot construct another machine, and that a machine designed to construct hardware cannot output a symbol. Von Neumann also observed that there is a “completely decisive property of complexity,” a threshold below which organizations degenerate and above which open-ended complication or emergent evolution is possible. Using a loose analogy with universal computation, he proposed that to reach this threshold requires a universal construction machine that can output any particular material machine according to a symbolic description of the machine. Self-replication would then be logically possible if the universal constructor is provided with its own description as well as means of copying and transmitting this description to the newly constructed machine.

https://link.springer.com/chapter/10.1007/978-94-007-5161-3_14

Information is not physical

https://arxiv.org/pdf/1402.2414.pdf

Information is a disembodied abstract entity independent of its physical carrier. ”Information is always tied to a physical representation. It is represented by engraving on a stone tablet, a spin, a charge, a hole in a punched card, a mark on paper, or some other equivalent. This ties the handling of information to all the possibilities and restrictions of our real physical word, its laws of physics and its storehouse”. However, the legitimate questions concern the physical properties of information carriers like ”stone tablet, a spin, a charge, a hole in a punched card, a mark on paper”, but not the information itself. Information is neither classical nor quantum, it is independent of the properties of physical systems used to its processing.

An algorithm is a finite sequence of well-defined, computer-implementable instructions resulting in precise intended functions. A prescriptive algorithm in biological context can be described as performing control operations using rules, axioms and coherent instructions. These instructions are performed, using a linear, digital, cybernetic string of symbols representing syntactic, semantic and pragmatic prescriptive information.

Cells host algorithmic programs for cell division, cell death, enzymes pre-programmed to perform DNA splicing, programs for dynamic changes of gene expression in response to the changing environment. Cells use pre-programmed adaptive responses to genomic stress, pre-programmed genes for fetal development regulation, temporal programs for genome replication, pre-programmed animal genes dictating behaviors including reflexes and fixed action patterns, pre-programmed biological timetables for aging etc.

A programming algorithm is like a recipe that describes the exact steps needed to solve a problem or reach a goal. We've all seen food recipes - they list the ingredients needed and a set of steps for how to make a meal. Well, an algorithm is just like that. A programming algorithm describes how to do something, and it will be done exactly that way every time.

Okay, you probably wish you could see an example, how that works in the cell, right? Lets make an analogy. Lets suppose you have a receipe to make spaghetti with a special tomato sauce written on a word document saved on your computer. You have a japanese friend, and only communicate with him using the google translation program. Now he wants to try out that receipe, and asks you to send him a copy. So you write an email, annex the word document, and send it to him. When he receives it, he will use google translate, and get the receipe in japanese, written in kanji, in logographic japanese characters which he understands. With the information at hand, he can make the spaghetti with that fine special tomato souce exactly as described in the receipe. In order for that communication to happen, you use at your end 26 letters from the alphabet to write the receipe, and your friend has 2,136 kanji characters that permits him to understand the receipe in Japanese. Google translate does the translation work.

While the receipe is written on a word document saved on your computer, in the cell, the receipe (instructions or master plan) for the construction of proteins which are the life essential molecular machines, veritable working horses, is written in genes through DNA. While you use the 26 letters of the alphabet to write your receipe, the Cell uses DNA, deoxyribonucleotides, four monomer "letters". In kanji there are 2136 characters, the alphabet uses 26, computer codes being binary, use 0,1. The language of DNA is digital, but not binary. Where binary encoding has 0 and 1 to work with (2 - hence the 'bi'nary) DNA uses four different organic bases, which are adenine (A), guanine (G), cytosine (C) and thymine (T)

The way by which DNA stores the genetic information consists of codons equivalent to words, consisting of an array of three DNA nucleotides. These triplets form "words". While you used sentences to write the spaghetti receipt, the equivalent sentences are called genes written through codon "words", . With four possible nucleobases, the three nucleotides can give 4^3 = 64 different possible "words" (tri-nucleotide sequences). In the standard genetic code, three of these 64 codons (UAA, UAG and UGA) are stop codons.

There has to be a mechanism to extract the information in the genome, and send it to the ribosome, the factory that makes proteins, which is at another place in the cell, free floating in the cytoplasm. The message contained in the genome is transcribed by a very complex molecular machine, called RNA polymerase. It makes a transcript, a copy of the message in the genome, and that transcript is sent to the Ribosome. That transcript is called messenger RNA or typically mRNA.

In communications and information processing, code is a system of rules to convert information—such as assigning the meaning of a letter, word, into another form, ( as another word, letter, etc. ) In translation, 64 genetic codons are assigned to 20 amino acids. It refers to the assignment of the codons to the amino acids, thus being the cornerstone template underling the translation process. Assignment means designating, ascribing, corresponding, correlating.

The Ribosome does basically what google translate does. But while google translate just gives the receipt in another language, and our japanese friend still has to make the spaghettis, the Ribosome actually makes in one step the end product, which are proteins.

Imagine the brainpower involved in the entire process from inventing the receipt to make spaghettis, until they are on the table of your japanese friend. What is involved ?

1. Your imagination of the receipt

2. Inventing an alphabet, a language

3. Inventing the medium to write down the message

4. Inventing the medium to store the message

5. Storing the message in the medium

6. Inventing the medium to extract the message

7. Inventing the medium to send the message

8. Inventing the second language ( japanese)

9. Inventing the translation code/cipher from your language, to japanese

10. Making the machine that performs the translation

11. Programming the machine to know both languages, to make the translation

12. Making the translation

12. Makin of the spaghettis on the other end using the receipt in japanese

1. Cells host Genetic information

2. This information prescribes functional outcomes due to the right particular specified complex sequence of triplet codons and ultimately the translated sequencing of amino acid building blocks into protein strings. The sequencing of nucleotides in DNA also prescribes highly specific regulatory micro RNAs and other epigenetic factors.

3. Algorithms, prescribing functional instructions, digital programming, using symbols and coding systems are abstract respresentations and non-physical, and originate always from thought—from conscious or intelligent activity.

4. Therefore, genetic and epigenetic information comes from an intelligent mind. Since there was no human mind present to create life, it must have been a supernatural agency.

1. Algorithms, prescribing functional instructions, digital programming, using symbols and coding systems are abstract respresentations and non-physical, and originate always from thought—from conscious or intelligent activity.

2. Genetic and epigenetic information is characterized containing prescriptive codified information, which result in functional outcomes due to the right particular specified complex sequence of triplet codons and ultimately the translated sequencing of amino acid building blocks into protein strings. The sequencing of nucleotides in DNA also prescribes highly specific regulatory micro RNAs and other epigenetic factors.

3. Therefore, genetic and epigenetic information comes from an intelligent mind. Since there was no human mind present to create life, it must have been a supernatural agency.

Three subsets of sequence complexity and their relevance to biopolymeric information

https://link.springer.com/article/10.1186/1742-4682-2-29

An algorithm is a finite sequence of well-defined, computer-implementable instructions. Genetic algorithms instruct sophisticated biological organization. Three qualitative kinds of sequence complexity exist: random (RSC), ordered (OSC), and functional (FSC). FSC alone provides algorithmic instruction. A linear, digital, cybernetic string of symbols representing syntactic, semantic and pragmatic prescription; each successive sign in the string is a representation of a decision-node configurable switch-setting – a specific selection for function. Selection, specification, or signification of certain "choices" in FSC sequences results only from nonrandom selection.

Nucleotides are grouped into triplet Hamming block codes, each of which represents a certain amino acid. No direct physicochemical causative link exists between codon and its symbolized amino acid in the physical translative machinery. Physics and chemistry do not explain why the "correct" amino acid lies at the opposite end of tRNA from the appropriate anticodon. Physics and chemistry do not explain how the appropriate aminoacyl tRNA synthetase joins a specific amino acid only to a tRNA with the correct anticodon on its opposite end. Genes are not analogous to messages; genes are messages. Genes are literal programs. They are sent from a source by a transmitter through a channel. Prescriptive sequences are called "instructions" and "programs." They are not merely complex sequences. They are algorithmically complex sequences. They are cybernetic.

Leroy Hood The digital code of DNA 23 January 2003

The discovery of the double helix in 1953 immediately raised questions about how biological information is encoded in DNA. A remarkable feature of the structure is that DNA can accommodate almost any sequence of base pairs — any combination of the bases adenine (A), cytosine (C), guanine (G) and thymine (T) — and, hence any digital message or information.

https://www.nature.com/articles/nature01410

Translation occurs after the messenger RNA (mRNA) has carried the transcribed ‘message’ from the DNA to protein-making factories in the cell, called ribosomes?.

The message carried by the mRNA is read by a carrier molecule called transfer RNA ?(tRNA).

https://www.yourgenome.org/facts/what-is-gene-expression

The capabilities of chaos and complexity.

http://europepmc.org/article/PMC/2662469

Do symbol systems exist outside of human minds?

Molecular biology’s two-dimensional complexity (secondary biopolymeric structure) and three-dimensional complexity (tertiary biopolymeric structure) are both ultimately determined by linear sequence complexity (primary structure; functional sequence complexity, FSC). The codon table is arbitrary and formal, not physical. The linking of each tRNA with the correct amino acid depends entirely upon on a completely independent family of tRNA aminoacyl synthetase proteins. Each of these synthetases must be specifically prescribed by separate linear digital programming, but using the same MSS. These symbol and coding systems not only predate human existence, they produced humans along with their anthropocentric minds.

The image above shows the prescriptive coding of a section of DNA. Each letter represents a choice from an alphabet of four options. The particular sequencing of letter choices prescribes the sequence of triplet codons and ultimately the translated sequencing of amino acid building blocks into protein strings. The sequencing of nucleotides in DNA also prescribes highly specific regulatory micro RNAs and other epigenetic factors. Thus linear digital instructions program cooperative and holistic metabolic proficiency.

Chaos is neither organized nor a true system, let alone “self-organized.” A bona fide system requires organization. Chaos by definition lacks organization. What formal functions does for example a hurricane perform? It doesn’t DO anything constructive or formally functional because it contains no formal organizational components. It has no programming talents or creative instincts. A hurricane is not a participant in Decision Theory. A hurricane does not set logic gates according to arbitrary rules of inference. A hurricane has no specifically designed dynamically-decoupled configurable switches. No means exists to instantiate formal choices or function into physicality. A highly self-ordered hurricane does nothing but destroy organization. That applies to any unguided, random, natural events.

The capabilities of stand-alone chaos, complexity, self-ordered states, natural attractors, fractals, drunken walks, complex adaptive systems, and other subjects of non linear dynamic models are often inflated. Scientific mechanism must be provided for how purely physicodynamic phenomena can program decision nodes, optimize algorithms, set configurable switches so as to achieve integrated circuits, achieve computational halting, and organize otherwise unrelated chemical reactions into a protometabolism. We know only of conscious or intelligent agents able to provide such things.

Information and the Nature of Reality From Physics to Metaphysics page 149

The concept of information has been a victim of a philosophical impasse that has a long and contentious history: the problem of specifying the ontological status of the representations or contents of our thoughts. How can the

content (aka meaning, reference, significant aboutness) of a sign or thought have any causal efficacy in the world if it is by definition not intrinsic to whatever physical object or process represents it?

Consider the classic example of a wax impression left by a signet ring in wax. Except for the mind that interprets it, the wax impression is just wax, the ring is just a metallic form, and their conjunction at a time when the wax was still warm and malleable was just a physical event in which one object alters another when they are brought into contact. Something more makes the wax impression a sign that conveys information. It must be interpreted by someone.

In order to develop a full scientific understanding of information we will be required to give up thinking about it, even metaphorically, as some artifact or commodity. To make sense of the implicit representational function that distinguishes information from other merely physical relationships, we will need to find a precise way to characterize its defining nonintrinsic feature – its referential content – and show how it can be causally efficacious despite its physical absence. The enigmatic status of this relationship was eloquently, if enigmatically, framed by Brentano’s use of the term “inexistence” when describing mental phenomena.

Signature in the Cell, Stephen Meyer page 16

What humans recognize as information certainly originates from thought—from conscious or intelligent activity. A message received via fax by one person first arose as an idea in the mind of another. The software stored and sold on a compact disc resulted from the design of a software engineer. The great works of literature began first as ideas in the minds of writers—Tolstoy, Austen, or Donne. Our experience of the world shows that what we recognize as information invariably reflects the prior activity of conscious and intelligent persons.

We now know that we do not just create information in our own technology; we also find it in our biology—and, indeed, in the cells of every living organism on earth. But how did this information arise? The age-old conflict between the mind-first and matter-first world-views cuts right through the heart of the mystery of life’s origin. Can the origin of life be explained purely by reference to material processes such as undirected chemical reactions or random collisions of molecules?

The algorithmic origins of life

https://royalsocietypublishing.org/doi/full/10.1098/rsif.2012.0869

The key distinction between the origin of life and other ‘emergent’ transitions is the onset of distributed information control, enabling context-dependent causation, where an abstract and non-physical systemic entity (algorithmic information) effectively becomes a causal agent capable of manipulating its material substrate.

Biological information is functional due to the right sequence. There have been a variety of terms employed for measuring functional biological information — complex and specified information (CSI), Functional Sequence Complexity (FSC) Instructional complex Information. I like the term instructional because it defines accurately what is being done, namely instructing the right sequence of amino acids to make proteins, and also the sequence of messenger RNA, which is used for gene regulation, and a variety of yet unexplored function.

Another term is prescriptive information (PI). It describes as well accurately what genes do. They prescribe how proteins have to be assembled. But it smuggles in as well a meaning, which is highly disputed between proponents of intelligent design, and unguided evolution. Prescribing implies that an intelligent agency preordained the nucleotide sequence in order to be functional. The following paper states:

Dichotomy in the definition of prescriptive information suggests both prescribed data and prescribed algorithms: biosemiotics applications in genomic systems

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3319427/

Biological information frequently manifests its “meaning” through instruction or actual production of formal bio-function. Such information is called Prescriptive Information (PI). PI programs organize and execute a prescribed set of choices. Closer examination of this term in cellular systems has led to a dichotomy in its definition suggesting both prescribed data and prescribed algorithms are constituents of PI. This paper looks at this dichotomy as expressed in both the genetic code and in the central dogma of protein synthesis. An example of a genetic algorithm is modeled after the ribosome, and an examination of the protein synthesis process is used to differentiate PI data from PI algorithms.

Both the method used to combine several genes together to produce a molecular machine and the operational logic of the machine are examples of an algorithm. Molecular machines are a product of several polycodon instruction sets (genes) and may be operated upon algorithmically. But what process determines what algorithm to execute?

In addition to algorithm execution, there needs to be an assembly algorithm. Any manufacturing engineer knows that nothing (in production) is built without plans that precisely define orders of operations to properly and economically assemble components to build a machine or product. There must be by necessity, an order of operations to construct biological machines. This is because biological machines are neither chaotic nor random, but are functionally coherent assemblies of proteins/RNA elements. A set of operations that govern the construction of such assemblies may exist as an algorithm which we need to discover. It details real biological processes that are operated upon by a set of rules that define the construction of biological elements both in a temporal and physical assembly sequence manner.

An Algorithm is a set of rules or procedures that precisely defines a finite sequence of operations. These instructions prescribe a computation or action that, when executed, will proceed through a finite number of well-defined states that leads to specific outcomes. In this context an algorithm can be represented as: Algorithm = logic + control; where the logic component expresses rules, operations, axioms and coherent instructions. These instructions may be used in the computation and control, while decision-making components determines the way in which deduction is applied to the axioms according to the rules as it applies to instructions.

A ribosome is a biological machine consisting of nearly 200 proteins (assembly factors) that assist in assembly operations, along with 4 RNA molecules and 78 ribosomal proteins that compose a mature ribosome. This complex of proteins and RNAs collectively produce a new function that is greater than the individual functionality of proteins and RNAs that compose it.

The DNA (source data), RNA (edited mRNA), large and small RNA components of ribosomal RNA, ribosomal protein, tRNA, aminoacyl-tRNA synthetase enzymes, and "manufactured" protein (ribosome output) are part of this one way, irreversible bridge contained in the central dogma of molecular biology.

One of the greatest enigmas of molecular biology is how codonic linear digital programming is not only able to anticipate what the Gibbs free energy folding will be, but it actually prescribes that eventual folding through its sequencing of amino acids. Much the same as a human engineer, the nonphysical, formal PI instantiated into linear digital codon prescription makes use of physical realities like thermodynamics to produce the needed globular molecular machines.

The functional operation of the ribosome consists of logical structures and control that obeys the rules for an algorithm. The simplest element of logical structure in an algorithm is a linear sequence. A linear sequence consists of one instruction or datum, followed immediately by another as is evident in the linear arrangement of codons that make up the genes of the DNA.

The mRNA (which is itself a product of the gene copy and editor subroutine) is a necessary input which is formatted by grammatical rules.

Top-down causation by information control: from a philosophical problem to a scientific research programme

https://sci-hub.st/https://royalsocietypublishing.org/doi/10.1098/rsif.2008.0018

Last edited by Otangelo on Sat Jul 09, 2022 12:44 pm; edited 13 times in total