https://reasonandscience.catsboard.com/t2363-the-genetic-code-insurmountable-problem-for-non-intelligent-origin

Eugene V. Koonin: Origin and evolution of the genetic code: the universal enigma 2012 Mar 5

In our opinion, despite extensive and, in many cases, elaborate attempts to model code optimization, ingenious theorizing along the lines of the coevolution theory, and considerable experimentation, very little definitive progress has been made. Summarizing the state of the art in the study of the code evolution, we cannot escape considerable skepticism. It seems that the two-pronged fundamental question: “why is the genetic code the way it is and how did it come to be?”, that was asked over 50 years ago, at the dawn of molecular biology, might remain pertinent even in another 50 years. Our consolation is that we cannot think of a more fundamental problem in biology.

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3293468/

In a paper published by Omachi et al. (2023), the authors investigated the robustness of the standard genetic code (SGC) by exploring its position in a theoretical "fitness landscape," where different genetic codes are evaluated for their ability to minimize the effects of mutations and translation errors. Using an advanced multicanonical Monte Carlo sampling technique, the authors sampled a much broader range of genetic codes than in previous study mentioned previously, from 2015, which often relied on biased evolutionary algorithms. The researchers estimated that among all possible genetic codes, only one in approximately 10^20 random codes surpasses the SGC in robustness—a far rarer occurrence than previous estimates, which suggested one in a million codes. 1

Charles W. Carter, Jr. (2015): Inheritance, catalysis, and coding can be viewed fundamentally as problems of emerging specificity. The universal genetic code is highly specific, and there has been no way to account for its gradual emergence by phenotypic selection from among more simply coded peptides. The absence of transitional links between earlier intermolecular interactions and the triplet code is a fundamental stumbling block that has continued to justify the questionable conclusion that a biologically sufficient set of functional RNA molecules arose by themselves, providing all informational continuity and catalysis [6] necessary to produce the code, without then leaving a trace behind in the phylogenetic record.

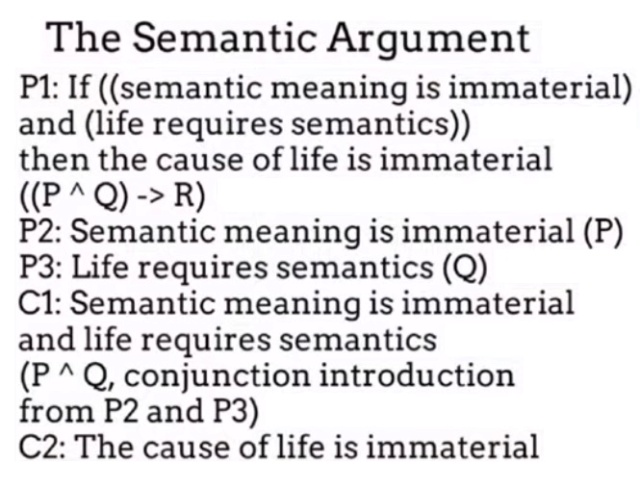

Life requires Inheritance, catalysis, and coding

1. Creating a translation dictionary, for example of English to Chinese, requires always a translator, that understands both languages.

2. The meaning of words of one language that are assigned to words of another language that mean the same requires the agreement of meaning in order to establish translation.

3. That is analogous to what we see in biology, where the ribosome translates the words of the genetic language composed of 64 codon words to the language of proteins, composed of 20 amino acids.

4. The origin of such complex communication systems is best explained by an intelligent designer.

Alberts: The Molecular Biology of the Cell et al, p367

The relationship between a sequence of DNA and the sequence of the corresponding protein is called the genetic code…the genetic code is deciphered by a complex apparatus that interprets the nucleic acid sequence. …the conversion of the information in [messenger] RNA represents a translation of the information into another language that uses quite different symbols.

Florian Kaiser: The structural basis of the genetic code: amino acid recognition by aminoacyl-tRNA synthetases 28 July 2020

One of the most profound open questions in biology is how the genetic code was established. The emergence of this self-referencing system poses a chicken-or-egg dilemma and its origin is still heavily debated

https://www.nature.com/articles/s41598-020-69100-0

Patrick J. Keeling: Genomics: Evolution of the Genetic Code September 26, 2016

Understanding how this code originated and how it affects the molecular biology and evolution of life today are challenging problems, in part because it is so highly conserved — without variation to observe it is difficult to dissect the functional implications of different aspects of a character.

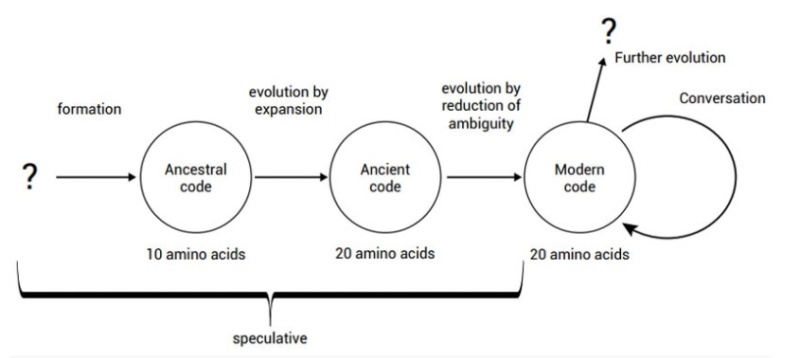

It is tempting to think that a system so central to life should be elegant, but of course that’s not how evolution works; the genetic code was not designed by clever scientists, but rather built through a series of contingencies. The ‘frozen accident’, as it was described by Crick, that ultimately emerged is certainly non-random, but is more of a mishmash than an elegant plan, which led to new ideas about how the code may have evolved in a series of steps from simpler codes with fewer amino acids. So the code was not always thus, but once it was established before the last universal common ancestor of all extant life (LUCA) it has remained under very powerful selective constraints that kept the code frozen in nearly all genomes that subsequently diversified.

https://sci-hub.st/https://www.sciencedirect.com/science/article/pii/S0960982216309174

My comment: A series of contingencies !!! a mishmash than an elegant plan !!! Contingent means accidental, incidental, adventitious, casual, chance. So, in other words, luck. A fortuitous accident. Is that a rational proposition ?

The genetic codons are assigned to amino acids. Why should or would molecules promote designate, dictate, ascribe, correspond, correlate, specify anything at all ? How does that make sense? The genetic code could not be the product of evolution, since it had to be fully operational when life started ( and so, DNA replication, upon which evolution depends ). The only alternative to design is that random unguided events originated it.

Marcello Barbieri Code Biology February 2018

"...there is no deterministic link between codons and amino acids because any codon can be associated with any amino acid. This means that the rules of the genetic code do not descend from chemical necessity and in this sense they are arbitrary." "...we have the experimental evidence that the genetic code is a real code, a code that is compatible with the laws of physics and chemistry but is not dictated by them."

https://www.sciencedirect.com/journal/biosystems/vol/164/suppl/C

[Comment on other biological codes]: "In signal transduction, in short, we find all the essential components of a code: (a) two independents worlds of molecules (first messengers and second messengers), (b) a set of adaptors that create a mapping between them, and (c) the proof that the mapping is arbitrary because its rules can be changed in many different ways."

RNA's, ( if they were extant prebiotically anyway), would just lay around and then disintegrate in a short period of time ( a month or so). If we disconsider that the prebiotic synthesis of RNA's HAS NEVER BEEN DEMONSTRATED IN THE LAB, they would not polymerize. Clay experiments have failed. And even IF they would bind in GC rich configurations to small peptides, they would as well simply lay around, and disintegrate. It is in ANY scneario a far stretch to believe that unguided events would produce randomly codes. That's simply putting far too much faith into what molecules on their own are capable of doing.

My comment: Without stop codons, the translation machinery would not know where to end the protein synthesis, and there could/would never be functional proteins, and no life on earth. At all. These characteristics may render such changes more statistically probable, less likely to be deleterious, or both. However, most non-canonical genetic codes are inferred from DNA sequence alone, or occasionally DNA sequences and corresponding tRNAs.

"In signal transduction, in short, we find all the essential components of a code: (a) two independents worlds of molecules (first messengers and second messengers), (b) a set of adaptors that create a mapping between them, and (c) the proof that the mapping is arbitrary because its rules can be changed in many different ways." Why should or would molecules promote designate, assign, dictate, ascribe, correspond, correlate, specify anything at all? How does that make sense? This is not an argument from incredulity. The proposition defies reasonable principles and the known and limited, unspecific range of chance, physical necessity, mutations and natural selection. What we need, is to give a *plausible* account of how it came about to be in the first place. It is in ANY scenario a far stretch to believe that unguided random events would produce a functional code system and arbitrary assignments of meaning. That's simply putting far too much faith into what molecules on their own are capable of doing. RNA's, ( if they were extant prebiotically anyway), would just lay around and then disintegrate in a short period of time. If we disconsider that the prebiotic synthesis of RNAs HAS NEVER BEEN DEMONSTRATED IN THE LAB, they would not polymerize. Clay experiments have failed. Systems, given energy and left to themselves, DEVOLVE to give uselessly complex mixtures, “asphalts”. the literature reports (to our knowledge) exactly ZERO CONFIRMED OBSERVATIONS where molecule complexification emerged spontaneously from a pool of random chemicals. It is IMPOSSIBLE for any non-living chemical system to escape devolution to enter into the world of the “living”.

Eugene V. Koonin (2009): In our opinion, despite extensive and, in many cases, elaborate attempts to model code optimization, ingenious theorizing along the lines of the coevolution theory, and considerable experimentation, very little definitive progress has been made. Summarizing the state of the art in the study of the code evolution, we cannot escape considerable skepticism. It seems that the two-pronged fundamental question: “why is the genetic code the way it is and how did it come to be?”, that was asked over 50 years ago, at the dawn of molecular biology, might remain pertinent even in another 50 years. Our consolation is that we cannot think of a more fundamental problem in biology. Many of the same codo ns are reassigned (compared to the standard code) in independent lineages (e.g., the most frequent change is the reassignment of the stop codon UGA to tryptophan), this conclusion implies that there should be predisposition towards certain changes; at least one of these changes was reported to confer selective advantage The origin of the genetic code is acknowledged to be a major hurdle in the origin of life, and I shall mention just one or two of the main problems. Calling it a ‘code’ can be misleading because of associating it with humanly invented codes which at their core usually involve some sort of pre-conceived algorithm; whereas the genetic code is implemented entirely mechanistically – through the action of biological macromolecules. This emphasises that, to have arisen naturally – e.g. through random mutation and natural selection – no forethought is allowed: all of the components would need to have arisen in an opportunistic manner.

Crucial role of the tRNA activating enzymes

To try to explain the source of the code various researchers have sought some sort of chemical affinity between amino acids and their corresponding codons. But this approach is misguided:

1. the code is mediated by tRNAs which carry the anti-codon (in the mRNA) rather than the codon itself (in the DNA). So, if the code were based on affinities between amino acids and anti-codons, it implies that the process of translation via transcription cannot have arisen as a second stage or improvement on a simpler direct system - the complex two-step process would need to have arisen right from the start.

2. The amino acid has no role in identifying the tRNA or the codon (This can be seen from an experiment in which the amino acid cysteine was bound to its appropriate tRNA in the normal way – using the relevant activating enzyme, and then it was chemically modified to alanine. When the altered aminoacyl-tRNA was used in an in vitro protein synthesizing system (including mRNA, ribosomes etc.), the resulting polypeptide contained alanine (instead of the usual cysteine) corresponding to wherever the codon UGU occurred in the mRNA. This clearly shows that it is the tRNA alone (with no role for the amino acid) with its appropriate anticodon that matches the codon on the mRNA.). This association is done by an activating enzyme (aminoacyl tRNA synthetase) which attaches each amino acid to its appropriate tRNA (clearly requiring this enzyme to correctly identify both components). There are 20 different activating enzymes - one for each type of amino acid.

The Genetic Code (2017): Progressive development of the genetic code is not realistic

In view of the many components involved in implementing the genetic code, origin-of-life researchers have tried to see how it might have arisen in a gradual, evolutionary, manner. For example, it is usually suggested that to begin with the code applied to only a few amino acids, which then gradually increased in number. But this sort of scenario encounters all sorts of difficulties with something as fundamental as the genetic code.

1. First, it would seem that the early codons need have used only two bases (which could code for up to 16 amino acids); but a subsequent change to three bases (to accommodate 20) would seriously disrupt the code. Recognizing this difficulty, most researchers assume that the code used 3-base codons from the outset; which was remarkably fortuitous or implies some measure of foresight on the part of evolution (which, of course, is not allowed).

2. Much more serious are the implications for proteins based on a severely limited set of amino acids. In particular, if the code was limited to only a few amino acids, then it must be presumed that early activating enzymes comprised only that limited set of amino acids, and yet had the necessary level of specificity for reliable implementation of the code. There is no evidence of this; and subsequent reorganization of the enzymes as they made use of newly available amino acids would require highly improbable changes in their configuration. Similar limitations would apply to the protein components of the ribosomes which have an equally essential role in translation.

3. Further, tRNAs tend to have atypical bases which are synthesized in the usual way but subsequently modified. These modifications are carried out by enzymes, so these enzymes too would need to have started life based on a limited number of amino acids; or it has to be assumed that these modifications are later refinements - even though they appear to be necessary for reliable implementation of the code.

4. Finally, what is going to motivate the addition of new amino acids to the genetic code? They would have little if any utility until incorporated into proteins - but that will not happen until they are included in the genetic code. So the new amino acids must be synthesized and somehow incorporated into useful proteins (by enzymes that lack them), and all of the necessary machinery for including them in the code (dedicated tRNAs and activating enzymes) put in place – and all done opportunistically! Totally incredible!

https://evolutionunderthemicroscope.com/ool02.html

Comparison of translation loads for standard and alternative genetic codes

David M. Seaborg Was Wright Right? The Canonical Genetic Code is an Empirical Example of an Adaptive Peak in Nature; Deviant Genetic Codes Evolved Using Adaptive Bridges 2010 Aug 15

The error minimization hypothesis postulates that the canonical genetic code evolved as a result of selection to minimize the phenotypic effects of point mutations and errors in translation.

My comment: How can the authors claim that there was already translation, if it depends on the genetic code already being set up/

It is likely that the code in its early evolution had few or even a minimal number of tRNAs that decoded multiple codons through wobble pairing, with more amino acids and tRNAs being added as the code evolved.

My comment: Why do the authors claim that the genetic code emerged based on evolutionary selective pressures, if at this stage, there was no evolution AT ALL? Evolution starts with DNA replication, which DEPENDS on translation being already fully set up. Also, the origin of tRNA's is a huge problem for proponents of abiogenesis by the fact, that they are highly specific, and their biosynthesis in modern cells is a highly complex, multistep process requiring many complex enzymes

(2018) The hypothetical RNA World does not furnish an adequate basis for explaining how this system came into being, but principles of self-organisation that transcend Darwinian natural selection furnish an unexpectedly robust basis for a rapid, concerted transition to genetic coding from a peptide RNA world. The preservation of encoded information processing during the historically necessary transition from any ribozymally operated code to the ancestral aaRS enzymes of molecular biology appears to be impossible, rendering the notion of an RNA Coding World scientifically superfluous. Instantiation of functional reflexivity in the dynamic processes of real-world molecular interactions demanded of nature that it fall upon, or we might say “discover”, a computational “strange loop” (Hofstadter, 1979): a self-amplifying set of nanoscopic “rules” for the construction of the pattern that we humans recognize as “coding relationships” between the sequences of two types of macromolecular polymers. However, molecules are innately oblivious to such abstractions. Many relevant details of the basic steps of code evolution cannot yet be outlined.

Only one fact concerning the RNA World can be established by direct observation: if it ever existed, it ended without leaving any unambiguous trace of itself. Having left no such trace, the latest time of its demise can thus be situated in the period of emergence of the current universal system of genetic coding, a transformative innovation that provided an algorithmic procedure for reproducibly generating identical proteins from patterns in nucleic acid sequences.

Insuperable problems of the genetic code initially emerging in an RNA World

Now observe the colorful just so stories that the authors come up with to explain the unexplicable:

We can now understand how the self-organised state of coding can be approached “from below”, rather than thinking of molecular sequence computation as existing on the verge of a catastrophic fall over a cliff of errors. In GRT systems, an incremental improvement in the accuracy of translation produces replicase molecules. that are more faithfully produced from the gene encoding them. This leads to an incremental improvement in information copying, in turn providing for the selection of narrower genetic quasispecies and an incrementally better encoding of the protein functionalities, promoting more accurate translation.

My comment: This is an entirely unwarranted claim. It is begging the question. There was no translation at this stage, since translation depends on a fully developed and formed genetic code.

The vicious circle can wind up rapidly from below as a selfamplifying process, rather than precipitously winding down the cliff from above. The balanced push-pull tension between these contradictory tendencies stably maintains the system near a tipping point, where, all else being equal, informational replication and translation remain impedance matched – that is, until the system falls into a new vortex of possibilities, such as that first enabled by the inherent incompleteness of the primordial coding “boot block”. Bootstrapped coded translation of genes is a natural feature of molecular processes unique to living systems. Organisms are the only products of nature known to operate an essentially computational system of symbolic information processing. In fact, it is difficult to envisage how alien products of nature found with a similar computational capability, which proved to be necessary for their existence, no matter how primitive, would fail classification as a form of “life”.

My comment: I would rather say, it is difficult to envisage how such a complex system could get "off the hook" by natural, unguided means.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2924497/

Massimo Di Giulio The lack of foundation in the mechanism on which are based the Physico-chemical theories for the origin of the genetic code counterposed to the credible and natural mechanism suggested by the Q2 coevolution theory 1 April 2016

The majority of theories advanced for explaining the origin of Q4 the genetic code maintain that the Physico-chemical properties of amino acids had a fundamental role to organize the structuring of the genetic code....... but this does not seem to have been the case. The Physico-chemical properties of amino acids played only a subsidiary role in organizing the code – and important only if understood as manifestation of the catalysis performed by proteins . The mechanism on which lie on the majority of theories based on the Physico-chemical properties of amino acids is not credible or at least not satisfactory.

https://sci-hub.ren/10.1016/j.jtbi.2016.04.005

There are enough data to refute the possibility that the genetic code was randomly constructed (“a frozen accident”). For example, the genetic code clusters certain amino acid assignments. Amino acids that share the same biosynthetic pathway tend to have the same first base in their codons. Amino acids with similar physical properties tend to have similar codons.

either bottom-up processes (e.g. unknown chemical principles that make the code a necessity), or bottom-up constraints (i.e. a kind of selection process that occurred early in the evolution of life, and that favored the code we have now), then we can dispense with the code metaphor. The ultimate explanation for the code has nothing to do with choice or agency; it is ultimately the product of necessity.

In responding to the “code skeptics,” we need to keep in mind that they are bound by their own methodology to explain the origin of the genetic code in non-teleological, causal terms. They need to explain how things happened in the way that they suppose. Thus if a code-skeptic were to argue that living things have the code they do because it is one which accurately and efficiently translates information in a way that withstands the impact of noise, then he/she is illicitly substituting a teleological explanation for an efficient causal one. We need to ask the skeptic: how did Nature arrive at such an ideal code as the one we find in living things today?

https://uncommondescent.com/intelligent-design/is-the-genetic-code-a-real-code/

Genetic code: Lucky chance or fundamental law of nature?

It becomes clear that the information code is intrinsically related to the physical laws of the universe, and thus life may be an inevitable outcome of our universe. The lack of success in explaining the origin of the code and life itself in the last several decades suggest that we miss something very fundamental about life, possibly something fundamental about matter and the universe itself. Certainly, the advent of the genetic code was no “play of chance”.

Open questions:

1. Did the dialects, i.e., mitochondrial version, with UGA codon (being the stop codon in the universal version) codifying tryptophan; AUA codon (being the isoleucine in the universal version), methionine; and Candida cylindrica (funges), with CUG codon (being the leucine in the universal version) codifying serine, appear accidentally or as a result of some kind of selection process?

2. Why is the genetic code represented by the four bases A, T(U), G, and C?

3. Why does the genetic code have a triplet structure?

4. Why is the genetic code not overlapping, that is, why does the translation apparatus of a cell, which transcribes information, have a discrete equaling to three, but not to one?

5. Why does the degeneracy number of the code vary from one to six for various amino acids?

6. Is the existing distribution of codon degeneracy for particular amino acids accidental or some kind of selection process?

7. Why were only 20 canonical amino acids selected for the protein synthesis? 9. Is this very choice of amino acids accidental or some kind of selection process?

8. Why should there be a genetic code at all?

9. Why should there be the emergency of stereochemical association of a specific arbitrary codon-anticodon set?

10. Aminoacyl-tRNA synthetases recognize the correct tRNA. How did that recognition emerge, and why?

John Maynard Smith British biologist: The Major Transitions in Evolution 1997: has described the origin of the code as the most perplexing problem in evolutionary biology. With collaborator Eörs Szathmáry he writes:

“The existing translational machinery is at the same time so complex, so universal, and so essential that it is hard to see how it could have come into existence, or how life could have existed without it.” To get some idea of why the code is such an enigma, consider whether there is anything special about the numbers involved. Why does life use twenty amino acids and four nucleotide bases? It would be far simpler to employ, say, sixteen amino acids and package the four bases into doublets rather than triplets. Easier still would be to have just two bases and use a binary code, like a computer. If a simpler system had evolved, it is hard to see how the more complicated triplet code would ever take over. The answer could be a case of “It was a good idea at the time.” A good idea of whom? If the code evolved at a very early stage in the history of life, perhaps even during its prebiotic phase, the numbers four and twenty may have been the best way to go for chemical reasons relevant at that stage. Life simply got stuck with these numbers thereafter, their original purpose lost. Or perhaps the use of four and twenty is the optimum way to do it. There is an advantage in life’s employing many varieties of amino acid, because they can be strung together in more ways to offer a wider selection of proteins. But there is also a price: with increasing numbers of amino acids, the risk of translation errors grows. With too many amino acids around, there would be a greater likelihood that the wrong one would be hooked onto the protein chain. So maybe twenty is a good compromise. Do random chemical reactions have knowledge to arrive at a optimal conclusion, or a " good compromise" ?

An even tougher problem concerns the coding assignments—i.e., which triplets code for which amino acids. How did these designations come about? Because nucleic-acid bases and amino acids don’t recognize each other directly, but have to deal via chemical intermediaries, there is no obvious reason why particular triplets should go with particular amino acids. Other translations are conceivable. Coded instructions are a good idea, but the actual code seems to be pretty arbitrary. Perhaps it is simply a frozen accident, a random choice that just locked itself in, with no deeper significance.

https://3lib.net/book/1102567/9707b4

That frozen accident means, that good old luck would have hit the jackpot trough trial and error amongst 1.5 × 10^84 possible genetic codes. That is the number of atoms in the whole universe. That puts any real possibility of chance providing the feat out of question. Its, using Borel's law, in the realm of impossibility. The maximum time available for it to originate was estimated at 6.3 x 10^15 seconds. Natural selection would have to evaluate roughly 10^55 codes per second to find the one that's universal. Put simply, natural selection lacks the time necessary to find the universal genetic code.

Victor A. Gusev Arzamastsev AA The nature of optimality of DNA code 1997

“the situation when Nature invented the DNA code surprisingly resembles designing a computer by man. If a computer were designed today, the binary notation would be hardly used. Binary notation was chosen only at the first stage, for the purpose to simplify at most the construction of decoding machine. But now, it is too late to correct this mistake”.

https://www.webpages.uidaho.edu/~stevel/565/literature/Genetic%20code%20-%20Lucky%20chance%20or%20fundamental%20law%20of%20nature.pdf

Julian Mejıa Origin of Information Encoding in Nucleic Acids through a Dissipation-Replication Relation April 18, 2018

Due to the complexity of such an event, it is highly unlikely that that this information could have been generated randomly. A number of theories have attempted to addressed this problem by considering the origin of the association between amino acids and their cognate codons or anticodons. There is no physical-chemical description of how the specificity of such an association relates to the origin of life, in particular, to enzyme-less reproduction, proliferation and evolution. Carl Woese recognized this early on and emphasized the probelm, still unresolved, of uncovering the basis of the specifity between amino acids and codons in the genetic code. Carl Woese (1967) reproduced in the seminal paper of Yarus et al. cited frequently above; “I am particularly struck by the difficulty of getting [the genetic code] started unless there is some basis in the specificity of interaction between nucleic acids and amino acids or polypeptide to build upon.”

https://arxiv.org/pdf/1804.05939.pdf

S J Freeland The genetic code is one in a million 1998 Sep;4

if we employ weightings to allow for biases in translation, then only 1 in every million random alternative codes generated is more efficient than the natural code. We thus conclude not only that the natural genetic code is extremely efficient at minimizing the effects of errors, but also that its structure reflects biases in these errors, as might be expected were the code the product of selection.

http://www.ncbi.nlm.nih.gov/pubmed/9732450

Shalev Itzkovitz The genetic code is nearly optimal for allowing additional information within protein-coding sequences 2007 Apr; 17

DNA sequences that code for proteins need to convey, in addition to the protein-coding information, several different signals at the same time. These “parallel codes” include binding sequences for regulatory and structural proteins, signals for splicing, and RNA secondary structure. Here, we show that the universal genetic code can efficiently carry arbitrary parallel codes much better than the vast majority of other possible genetic codes. This property is related to the identity of the stop codons. We find that the ability to support parallel codes is strongly tied to another useful property of the genetic code—minimization of the effects of frame-shift translation errors. Whereas many of the known regulatory codes reside in nontranslated regions of the genome, the present findings suggest that protein-coding regions can readily carry abundant additional information.

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1832087/?report=classic

The Genetic Code Part II: Not Mundane and Not Evolvable

https://www.youtube.com/watch?v=oQ9tAL2AM6M

Monya Baker Hidden code in the protein code 28 October 2010

Different codons for the same amino acid may affect how quickly mRNA transcripts are translated, and that this pace can influence post-translational modifications. Despite being highly homologous, the mammalian cytoskeletal proteins beta- and gamma-actin contain notably different post-translational modifications: though both proteins are actually post-translationally arginylated, only arginylated beta-actin persists in the cell. This difference is essential for each protein's function.

To investigate whether synonymous codons might have a role in how arginylated forms persist, Kashina and colleagues swapped the synonymous codons between the genes for beta- and gamma-actin and found that the patterns of post-translational modification switched as well. Next, they examined translation rates for the wild-type forms of each protein and found that gamma-actin accumulated more slowly. Computational analysis suggested that differences between the folded mRNA structures might cause differences in translation speed. When the researchers added an antibiotic that slowed down translation rates, accumulation of arginylated actin slowed dramatically. Subsequent work indicated that N-arginylated proteins may, if translated slowly, be subjected to ubiquitination, a post-translational modification that targets proteins for destruction.

Thus, these apparently synonymous codons can help explain why some arginylated proteins but not others accumulate in cells. “One of the bigger implications of our work is that post-translational modifications are actually encoded in the mRNA,” says Kashina. “Coding sequence can define a protein's translation rate, metabolic fate and post-translational regulation.”

https://www.nature.com/articles/nmeth1110-874

Rosario Gil Determination of the Core of a Minimal Bacterial Gene Set Sept. 2004

Based on the conjoint analysis of several computational and experimental strategies designed to define the minimal set of protein-coding genes that are necessary to maintain a functional bacterial cell, we propose a minimal gene set composed of 206 genes ( which code for 13 protein complexes ) Such a gene set will be able to sustain the main vital functions of a hypothetical simplest bacterial cell with the following features. These protein complexes could not emerge through evolution ( muations and natural selection ) , because evolution depends on the dna replication, which requires precisely these original genes and proteins ( chicken and egg prolem ). So the only mechanism left is chance, and physical necessity.

http://mmbr.asm.org/content/68/3/518.full.pdf

Yuri I Wolf On the origin of the translation system and the genetic code in the RNA world by means of natural selection, exaptation, and subfunctionalization 2007 May 31

The origin of the translation system is, arguably, the central and the hardest problem in the study of the origin of life, and one of the hardest in all evolutionary biology. The problem has a clear catch-22 aspect: high translation fidelity hardly can be achieved without a complex, highly evolved set of RNAs and proteins but an elaborate protein machinery could not evolve without an accurate translation system. The origin of the genetic code and whether it evolved on the basis of a stereochemical correspondence between amino acids and their cognate codons (or anticodons), through selectional optimization of the code vocabulary, as a "frozen accident" or via a combination of all these routes is another wide open problem despite extensive theoretical and experimental studies.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1894784/

Literature from those who posture in favor of creation abounds with examples of the tremendous odds against chance producing a meaningful code. For instance, the estimated number of elementary particles in the universe is 10^80. The most rapid events occur at an amazing 10^45 per second. Thirty billion years contains only 10^18 seconds. By totaling those, we find that the maximum elementary particle events in 30 billion years could only be 10^143. Yet, the simplest known free-living organism, Mycoplasma genitalium, has 470 genes that code for 470 proteins that average 347 amino acids in length. The odds against just one specified protein of that length are 1:10^451.

Problem no.1

The genetic code system ( language ) must be created, and the universal code is nearly optimal and maximally efficient

V A Ratner [The genetic language: grammar, semantics, evolution] 1993 May;29

The genetic language is a collection of rules and regularities of genetic information coding for genetic texts. It is defined by alphabet, grammar, collection of punctuation marks and regulatory sites, semantics.

http://www.ncbi.nlm.nih.gov/pubmed/8335231

Eugene V. Koonin Origin and evolution of the genetic code: the universal enigma 2012 Mar 5

In our opinion, despite extensive and, in many cases, elaborate attempts to model code optimization, ingenious theorizing along the lines of the coevolution theory, and considerable experimentation, very little definitive progress has been made. Summarizing the state of the art in the study of the code evolution, we cannot escape considerable skepticism. It seems that the two-pronged fundamental question: “why is the genetic code the way it is and how did it come to be?”, that was asked over 50 years ago, at the dawn of molecular biology, might remain pertinent even in another 50 years. Our consolation is that we cannot think of a more fundamental problem in biology.

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3293468/

S J Freeland The genetic code is one in a million 1998 Sep

if we employ weightings to allow for biases in translation, then only 1 in every million random alternative codes generated is more efficient than the natural code. We thus conclude not only that the natural genetic code is extremely efficient at minimizing the effects of errors, but also that its structure reflects biases in these errors, as might be expected were the code the product of selection.

http://www.ncbi.nlm.nih.gov/pubmed/9732450

Shalev Itzkovitz The genetic code is nearly optimal for allowing additional information within protein-coding sequences 2007 Apr; 17

DNA sequences that code for proteins need to convey, in addition to the protein-coding information, several different signals at the same time. These “parallel codes” include binding sequences for regulatory and structural proteins, signals for splicing, and RNA secondary structure. Here, we show that the universal genetic code can efficiently carry arbitrary parallel codes much better than the vast majority of other possible genetic codes. This property is related to the identity of the stop codons. We find that the ability to support parallel codes is strongly tied to another useful property of the genetic code—minimization of the effects of frame-shift translation errors. Whereas many of the known regulatory codes reside in nontranslated regions of the genome, the present findings suggest that protein-coding regions can readily carry abundant additional information.

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1832087/?report=classic

Problem no.2

The origin of the information to make the first living cells must be explained.

Rosario Gil Determination of the Core of a Minimal Bacterial Gene Set , Sept. 2004

Based on the conjoint analysis of several computational and experimental strategies designed to define the minimal set of protein-coding genes that are necessary to maintain a functional bacterial cell, we propose a minimal gene set composed of 206 genes ( which code for 13 protein complexes ) Such a gene set will be able to sustain the main vital functions of a hypothetical simplest bacterial cell with the following features. These protein complexes could not emerge through evolution ( muations and natural selection ) , because evolution depends on the dna replication, which requires precisely these original genes and proteins ( chicken and egg prolem ). So the only mechanism left is chance, and physical necessity.

http://mmbr.asm.org/content/68/3/518.full.pdf

Literature from those who posture in favor of creation abounds with examples of the tremendous odds against chance producing a meaningful code. For instance, the estimated number of elementary particles in the universe is 10^80. The most rapid events occur at an amazing 10^45 per second. Thirty billion years contains only 10^18 seconds. By totaling those, we find that the maximum elementary particle events in 30 billion years could only be 10^143. Yet, the simplest known free-living organism, Mycoplasma genitalium, has 470 genes that code for 470 proteins that average 347 amino acids in length. The odds against just one specified protein of that length are 1:10^451.

Paul Davies once said;

How did stupid atoms spontaneously write their own software … ? Nobody knows … … there is no known law of physics able to create information from nothing.

Problem no.3

The genetic cipher

Yuri I Wolf On the origin of the translation system and the genetic code in the RNA world by means of natural selection, exaptation, and subfunctionalization 2007 May 31

The origin of the translation system is, arguably, the central and the hardest problem in the study of the origin of life, and one of the hardest in all evolutionary biology. The problem has a clear catch-22 aspect: high translation fidelity hardly can be achieved without a complex, highly evolved set of RNAs and proteins but an elaborate protein machinery could not evolve without an accurate translation system. The origin of the genetic code and whether it evolved on the basis of a stereochemical correspondence between amino acids and their cognate codons (or anticodons), through selectional optimization of the code vocabulary, as a "frozen accident" or via a combination of all these routes is another wide open problem despite extensive theoretical and experimental studies.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1894784/

John Maynard Smith British biologist: The Major Transitions in Evolution 1997

has described the origin of the code as the most perplexing problem in evolutionary biology. With collaborator Eörs Szathmáry he writes:

“The existing translational machinery is at the same time so complex, so universal, and so essential that it is hard to see how it could have come into existence, or how life could have existed without it.” To get some idea of why the code is such an enigma, consider whether there is anything special about the numbers involved. Why does life use twenty amino acids and four nucleotide bases? It would be far simpler to employ, say, sixteen amino acids and package the four bases into doublets rather than triplets. Easier still would be to have just two bases and use a binary code, like a computer. If a simpler system had evolved, it is hard to see how the more complicated triplet code would ever take over. The answer could be a case of “It was a good idea at the time.” A good idea of whom? If the code evolved at a very early stage in the history of life, perhaps even during its prebiotic phase, the numbers four and twenty may have been the best way to go for chemical reasons relevant at that stage. Life simply got stuck with these numbers thereafter, their original purpose lost. Or perhaps the use of four and twenty is the optimum way to do it. There is an advantage in life’s employing many varieties of amino acid, because they can be strung together in more ways to offer a wider selection of proteins. But there is also a price: with increasing numbers of amino acids, the risk of translation errors grows. With too many amino acids around, there would be a greater likelihood that the wrong one would be hooked onto the protein chain. So maybe twenty is a good compromise. Do random chemical reactions have knowledge to arrive at a optimal conclusion, or a " good compromise" ?

An even tougher problem concerns the coding assignments—i.e., which triplets code for which amino acids. How did these designations come about? Because nucleic-acid bases and amino acids don’t recognize each other directly, but have to deal via chemical intermediaries, there is no obvious reason why particular triplets should go with particular amino acids. Other translations are conceivable. Coded instructions are a good idea, but the actual code seems to be pretty arbitrary. Perhaps it is simply a frozen accident, a random choice that just locked itself in, with no deeper significance.

https://3lib.net/book/1102567/9707b4

That frozen accident means, that good old luck would have hit the jackpot trough trial and error amongst 1.5 × 1084 possible genetic codes . That is the number of atoms in the whole universe. That puts any real possibility of chance providing the feat out of question. Its , using Borel's law, in the realm of impossibility. The maximum time available for it to originate was estimated at 6.3 x 10^15 seconds. Natural selection would have to evaluate roughly 10^55 codes per second to find the one that's universal. Put simply, natural selection lacks the time necessary to find the universal genetic code.

Put it in other words : The task compares to invent two languages, two alphabets, and a translation system, and the information content of a book ( for example hamlet) being written in english translated to chinese in a extremely sophisticared hardware system. The conclusion that a intelligent designer had to setup the system follows not based on missing knowledge ( argument from ignorance ). We know that minds do invent languages, codes, translation systems, ciphers, and complex, specified information all the time. The genetic code and its translation system is best explained through the action of a intelligent designer.

The genetic code could not be the product of evolution, since it had to be fully operational when life started ( and so, DNA replication, upon which evolution depends ). The only alternative to design is that random unguided events originated it.

Last edited by Otangelo on Sun Nov 10, 2024 3:59 am; edited 70 times in total