http://reasonandscience.heavenforum.org/t2267-the-origin-of-the-genetic-cipher-the-most-perplexing-problem-in-biology

On the origin of the translation system and the genetic code in the RNA world by means of natural selection, exaptation, and subfunctionalization

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1894784/

The origin of the translation system is, arguably, the central and the hardest problem in the study of the origin of life, and one of the hardest in all evolutionary biology. The problem has a clear catch-22 aspect: high translation fidelity hardly can be achieved without a complex, highly evolved set of RNAs and proteins but an elaborate protein machinery could not evolve without an accurate translation system. The origin of the genetic code and whether it evolved on the basis of a stereochemical correspondence between amino acids and their cognate codons (or anticodons), through selectional optimization of the code vocabulary, as a "frozen accident" or via a combination of all these routes is another wide open problem despite extensive theoretical and experimental studies.

DNA is transcribed (sort of copied) to RNA which is translated to(codes for) Protein. DNA>>RNA>>>Protein. He is referring to the mechanism by which this occurs today.

Imagine a recording tape made of RNA. It passes through a structure (a machine) called a Ribosome. The tape has a sequence of nucleotides (a chain of sugars each connected to a phosphate and a nitrogen containing chemical). Every 3 nucleotides codes for an amino acid. Somehow the sequence (the chain) of RNA has to result in a chain of amino acids. This sequence of RNA is called messenger-RNA (M-RNA) The chain of amino acids is an entirely different substance than the M-RNA. Like imagining cassette recording tape going through a machine and on the other side of the machine a chain of “metal charms” is made that corresponds to the musical notes coded for by the magnetic recording tape.

So this is accomplished in a structure called a ribosome and another molecule of RNA physically attached to an amino acid. This other molecule of RNA is called Transfer-RNA (T-RNA).

So anyway, one end of the T-RNA mirrors the code on the M-RNA and the other end is attached to the amino acid that that is coded for. the correct amino acid is attached to the correct T-RNA by an enzymne called aminoacid T-RNA Synthase.. When Prof Bio uses the cryptic abbreviation aars he is referring to Alanyl-TRNA Synthetase. This enzyme attaches the amino alanine to the T-RNA that codes for Alanine. There are T-RNA synthetases for each amino acid.

A review of Paul Davies book, the fifth miracle, about the genetic code. Starting at page 105:

I have described life as a deal struck between nucleic acids and proteins. However, these molecules inhabit very different chemical realms; indeed, they are barely on speaking terms. This is most clearly reflected in the arithmetic of information transfer. The data needed to assemble proteins are stored in DNA using the four-letter alphabet A, G, C, T. On the other hand, proteins are made out of twenty different sorts of amino acids. Obviously twenty into four won’t go. So how do nucleic acids and proteins communicate?

Earthlife has discovered a neat solution to this numerical mismatch by packaging the bases in triplets.

How could earth life have discoverd a mechanism upon which life depends ?

Four bases can be arranged in sixty-four different permutations of three, and twenty will go into sixty-four, with some room left over for redundancy and punctuation. The sequence of rungs of the DNA ladder thus determines, three by three, the exact sequence of amino acids in the proteins. To translate from the sixty-four triplets into the twenty amino acids means assigning each triplet (termed a codon) a corresponding amino acid. This assignment is called the genetic code. The idea that life uses a cipher was first suggested in the early 1950s by George Gamow, the same physicist who proposed the modern big-bang theory of the universe. As in all translations, there must be someone, or something, that is bilingual, in this case to turn the coded instructions written in nucleicacid language into a result written in amino-acid language. From what I have explained, it should be apparent that this crucial translation step occurs in living organisms when the appropriate amino acids are attached to the respective molecules of tRNA prior to the protein-assembly process. This attachment is carried out by a group of clever enzymes

cleverness is something we do usually assign to someone that is intelligent

that recognize both RNA sequences and the different amino acids, and marry them up accordingly with the right designation.

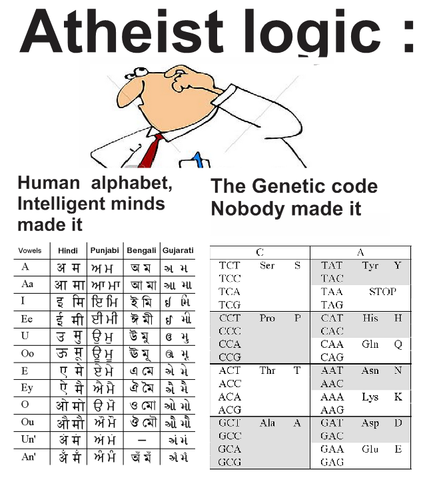

My comment: The recognition must be PRE-PROGRAMMED. In the same manner, as a translator must learn two languages, in order to be able to assign one written word in english, for example, to the written symbol in chinese with the same significance. There must be a previous common agreement of meaning before the translation process can begin. As for example, the word translator, is written 翻譯者 in traditional chinese. Ask someone that does not speak chinese, to translate the word translator into chinese . He has around 3500 different symbols to chose from. In the " amino acid language", there are 20 different amino acids to chose from. The translator must know both, the word translator in english and in chinese, and know both written alphabets, previous to make the assignment. Only mental processes are able to do this. Chance is simply a impotent cause.

William Dembski:

http://www.discovery.org/a/1256

The problem is that nature has too many options and without design couldn’t sort through all those options. The problem is that natural mechanisms are too unspecific to determine any particular outcome. Natural processes could theoretically form a protein, but also compatible with the formation of a plethora of other molecular assemblages, most of which have no biological significance. Nature allows them full freedom of arrangement. Yet it’s precisely that freedom that makes nature unable to account for specified outcomes of small probability.Nature, in this case, rather than being intent on doing only one thing, is open to doing any number of things. Yet when one of those things is a highly improbable specified event, design becomes the more compelling, better inference. Occam's razor also boils down to an argument from ignorance: in the absence of better information, you use a heuristic to accept one hypothesis over the other.

The genetic code, with a few recently discovered minor variations, is common to all known forms of life. That the code is universal is extremely significant, for it suggests it was used by the common ancestor of all life, and is robust enough to have survived through billions of years of evolution. Without it, the production of proteins would be a hopelessly hit-or-miss affair. Questions abound. How did such a complicated and specific system as the genetic code arise in the first place? Why, out of the 10 possible codes based on triplets, has nature chosen the one in universal use? Could a different code work as well? If there is life on Mars, will it have the same genetic code as Earthlife? Can we imagine uncoded life, in which interdependent molecules deal directly with each other on the basis of their chemical affinities alone? Or is the origin of the genetic code itself (or at least a genetic code) the key to the origin of life? The British biologist John Maynard Smith has described the origin of the code as the most perplexing problem in evolutionary biology. With collaborator Eörs Szathmáry he writes: “The existing translational machinery is at the same time so complex, so universal, and so essential that it is hard to see how it could have come into existence, or how life could have existed without it.” To get some idea of why the code is such an enigma, consider whether there is anything special about the numbers involved. Why does life use twenty amino acids and four nucleotide bases? It would be far simpler to employ, say, sixteen amino acids and package the four bases into doublets rather than triplets. Easier still would be to have just two bases and use a binary code, like a computer. If a simpler system had evolved, it is hard to see how the more complicated triplet code would ever take over. The answer could be a case of “It was a good idea at the time.”

A good idea of whom ?

If the code evolved at a very early stage in the history of life, perhaps even during its prebiotic phase, the numbers four and twenty may have been the best way to go for chemical reasons relevant at that stage. Life simply got stuck with these numbers thereafter, their original purpose lost. Or perhaps the use of four and twenty is the optimum way to do it. There is an advantage in life’s employing many varieties of amino acid, because they can be strung together in more ways to offer a wider selection of proteins. But there is also a price: with increasing numbers of amino acids, the risk of translation errors grows. With too many amino acids around, there would be a greater likelihood that the wrong one would be hooked onto the protein chain. So maybe twenty is a good compromise.

Do random chemical reactions have knowledge to arrive at a optimal conclusion, or a " good compromise" ?

An even tougher problem concerns the coding assignments—i.e., which triplets code for which amino acids. How did these designations come about? Because nucleic-acid bases and amino acids don’t recognize each other directly, but have to deal via chemical intermediaries, there is no obvious reason why particular triplets should go with particular amino acids. Other translations are conceivable. Coded instructions are a good idea, but the actual code seems to be pretty arbitrary. Perhaps it is simply a frozen accident, a random choice that just locked itself in, with no deeper significance.

That frozen accident means, that good old luck would have hit the jackpot trough trial and error amongst 1.5 × 1084 possible genetic codes . That is the number of atoms in the whole universe. That puts any real possibility of chance providing the feat out of question. Its , using Borel's law, in the realm of impossibility. The maximum time available for it to originate was estimated at 6.3 x 10^15 seconds. Natural selection would have to evaluate roughly 10^55 codes per second to find the one that's universal. Put simply, natural selection lacks the time necessary to find the universal genetic code.

Paul Hayden The fundamental question is how these regularities of the standard code came into being, considering that there are more than 10 ^ 84 possible alternative code tables if each of the 20 amino acids and the stop signal are to be assigned to at least one codon. More specifically, the question is, what kind of interplay of chemical constraints, historical accidents, and evolutionary forces could have produced the standard amino acid assignment, which displays many remarkable properties.

And how could that make sense ? THINK !!

On the other hand, there may be some subtle reason why this particular code works best. If one code had the edge over another, reliability-wise, then evolution would favor it

The problem once more is, that evolution could not be in play at this time and stage of affairs, since evolution only works upon replication. Replication depends on the machinery in question. Catch22....

, and, by a process of successive refinement, an optimal code would be reached. It seems reasonable.

DOES IT SEEM REASONABLE ? TO ME IT SEEMS UTMOST IRRATIONAL. IRRATIONAL TO THE EXTREME. So did random , unguided, non-intelligent chemicals have a pre-established goal to reach a optimal code? And even if that were the case, what good would it be without the translation machinery, the ribosome, fully set up aind in place, and doing its job ?

But this theory is not without problems either. Darwinian evolution works in incremental steps, accumulating small advantages over many generations. In the case of the code, this won’t do. Changing even a single assignment would normally prove lethal, because it alters not merely one but a whole set of proteins. Among these are the proteins that activate and facilitate the translation process itself. So a change in the code risks feeding back into the very translation machinery that implements it, leading to a catastrophic feedback of errors that would wreck the whole process. To have accurate translation, the cell must first translate accurately. This conclusion seems paradoxical. A possible resolution has been suggested by Carl Woese. He thinks the code assignments and the translation mechanism evolved together. Initially there was only a rough-and-ready code, and the translation process was very sloppy. At this early stage, which is likely to have involved less than the present complement of twenty amino acids, organisms had to make do with very inefficient enzymes: the highly specific and refined enzymes life uses today had not yet evolved. Obviously some coding assignments would prove better than others, and any organism that employed the least error-prone assignments to code for its most important enzymes would be on to a winner.

Since when does dead matter have the intrinsic desire to get alive, and to win ?

It would replicate more accurately, and in the process its coding arrangements would predominate among daughter cells. In this context, a “better” coding assignment would mean a robust one, so that, if there was a translation error, the same amino acid would nevertheless be made—i.e., there would be enough ambiguity for the error to make no difference. Or, in case the error did cause a different amino acid to be made, it would be a close cousin of the intended one, and the resulting protein would do the job almost as well. Successive refinements of this process might then lead to the universal code seen today—like a picture gradually coming into focus.

Now THAT was a great lecture of how to do pseudo-science, and assign to chance creative power that it does not have.

The code may have an altogether deeper explanation. If a table of coding assignments is drawn up, it can be analyzed mathematically to see if there are any hidden patterns. Peter Jarvis and his colleagues at the University of Tasmania claim that the universal code conceals abstract sequences similar to the energy levels of atomic nuclei, and might even involve a subtle property of subatomic particles called supersymmetry. These mathematical correspondences may be purely coincidental, or they may point to some underlying connection between the physics of the molecules involved and the organization of the code. I have subjected the reader to the technicalities of the genetic code to make a general conceptual point that goes right to the heart of the mystery of life. Any coded input is merely a jumble of useless data unless an interpreter or a key is available. A coded message is only as good as the context in which it is put to use. That is to say, it has to mean something. In chapter 2, I drew the distinction between syntactic and semantic information. On their own, genetic data are mere syntax. The striking utility of encoded genetic information stems from the fact that amino acids “understand” it. The information distributed along a strand of DNA is biologically relevant. In computerspeak, genetic data are semantic data. For a clear perspective on this point, consider the way in which the four bases A, G, C, and T are arranged in DNA. As explained, these sequences are like letters in an alphabet, and the letters may spell out, in code, the instructions for making proteins. A different sequence of letters would almost certainly be biologically useless. Only a very tiny fraction of all possible sequences spells out a biologically meaningful message, in the same way that only certain very special sequences of letters and words constitute a meaningful book. Another way of expressing this is to say that genes and proteins require exceedingly high degrees of specificity in their structure. As I stated in my list of properties in chapter 1, living organisms are mysterious not for their complexity per se, but for their tightly specified complexity. To comprehend fully how life arose from nonlife, we need to know not only how biological information was concentrated, but also how biologically useful information came to be specified, given that the milieu from which the first organism emerged was presumably just a random mix of molecular building blocks. In short, how did meaningful information emerge spontaneously from incoherent junk?

I began this section by stressing the dual nature of biomolecules: they can be both hardware— particular three-dimensional forms—and software. The genetic code shows just how important the informational aspect of biomolecules is. The job of explaining the origin of life goes beyond finding a plausible chemical pathway out of a primordial soup. We need to know, conceptually, how mere hardware can give rise to software.

The genetic code, with a few recently discovered minor variations, is common to all known forms of life. That the code is universal is extremely significant, for it suggests it was used by the common ancestor of all life, and is robust enough to have survived through billions of years of evolution. Without it, the production of proteins would be a hopelessly hit-or-miss affair. Questions abound. How did such a complicated and specific system as the genetic code arise in the first place? Why, out of the 10^84 possible codes based on triplets, has nature chosen the one in universal use? Could a different code work as well? If there is life on Mars, will it have the same genetic code as Earthlife? Can we imagine uncoded life, in which interdependent molecules deal directly with each other on the basis of their chemical affinities alone? Or is the origin of the genetic code itself (or at least a genetic code) the key to the origin of life? The British biologist John Maynard Smith has described the origin of the code as the most perplexing problem in evolutionary biology. With collaborator Eörs Szathmáry he writes: “The existing translational machinery is at the same time so complex, so universal, and so essential that it is hard to see how it could have come into existence, or how life could have existed without it.” To get some idea of why the code is such an enigma, consider whether there is anything special about the numbers involved. Why does life use twenty amino acids and four nucleotide bases? It would be far simpler to employ, say, sixteen amino acids and package the four bases into doublets rather than triplets. Easier still would be to have just two bases and use a binary code, like a computer. If a simpler system had evolved, it is hard to see how the more complicated triplet code would ever take over. The answer could be a case of “It was a good idea at the time.” A good idea of whom ? If the code evolved at a very early stage in the history of life, perhaps even during its prebiotic phase, the numbers four and twenty may have been the best way to go for chemical reasons relevant at that stage. Life simply got stuck with these numbers thereafter, their original purpose lost. Or perhaps the use of four and twenty is the optimum way to do it. There is an advantage in life’s employing many varieties of amino acid, because they can be strung together in more ways to offer a wider selection of proteins. But there is also a price: with increasing numbers of amino acids, the risk of translation errors grows. With too many amino acids around, there would be a greater likelihood that the wrong one would be hooked onto the protein chain. So maybe twenty is a good compromise.

Do random chemical reactions have knowledge to arrive at a optimal conclusion, or a " good compromise" ?

An even tougher problem concerns the coding assignments—i.e., which triplets code for which amino acids. How did these designations come about? Because nucleic-acid bases and amino acids don’t recognize each other directly, but have to deal via chemical intermediaries, there is no obvious reason why particular triplets should go with particular amino acids. Other translations are conceivable. Coded instructions are a good idea, but the actual code seems to be pretty arbitrary. Perhaps it is simply a frozen accident, a random choice that just locked itself in, with no deeper significance.

That frozen accident means, that good old luck would have hit the jackpot trough trial and error amongst 1.5 × 1084 possible genetic codes . That is the number of atoms in the whole universe. That puts any real possibility of chance providing the feat out of question. Its , using Borel's law, in the realm of impossibility. The maximum time available for it to originate was estimated at 6.3 x 10^15 seconds. Natural selection would have to evaluate roughly 10^55 codes per second to find the one that's universal. Put simply, natural selection lacks the time necessary to find the universal genetic code.

1) https://en.wikipedia.org/wiki/Genetic_code

2) http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3293468/

Last edited by Otangelo on Mon Jan 04, 2021 6:17 am; edited 19 times in total