Precision measurements from Planck: The Planck satellite's precise measurements of the cosmic microwave background anisotropies have further constrained the duration of inflation. In their 2018 paper "Planck 2018 Results," the Planck Collaboration determined that the number of e-foldings of inflation must be 60 ± 5 (with 68% confidence) to match the observed density perturbations and other cosmological parameters. The consensus from these analyses is that the duration of inflation appears to be exquisitely fine-tuned to around 60 e-foldings, corresponding to an expansion factor of approximately 10^26. Deviations from this specific duration, even by a relatively small number of e-foldings, would result in either an insufficient resolution of the horizon and flatness problems or an overproduction of density perturbations, potentially destabilizing the formation of cosmic structures. This remarkable fine-tuning of the inflationary duration is considered a significant challenge for inflationary models, as there is no known natural mechanism within our current understanding of physics that can inherently limit or constrain the duration to this specific value. The apparent lack of a fundamental principle governing the cessation of inflation at precisely the observed duration suggests the need for further investigation into the nature of the inflaton field and the underlying mechanisms that drove the inflationary epoch.

To estimate the fine-tuning odds, we need to define a reference range of possible e-folding values. Since the inflationary expansion factor is proportional to e^(number of e-foldings), a reasonable reference range could be from 1 e-folding (no significant inflation) to 100 e-foldings (a factor of approximately 10^43 expansion). The range of allowed e-foldings (55 to 65) represents only 10 out of the 100 possible values in the reference range. Therefore, the probability or odds of randomly landing in this observationally allowed range is approximately: Probability = 10 / 100 = 0.1 or 1 in 10 This corresponds to odds of approximately 1 in 10 against randomly achieving the required inflationary duration. However, it's important to note that this calculation assumes a uniform probability distribution for the number of e-foldings, which may not be the case in realistic inflationary models. Additionally, the choice of the reference range can influence the calculated odds. Nonetheless, the relatively narrow range of allowed e-foldings compared to the vast range of possibilities suggests a significant fine-tuning problem for inflationary cosmology. The apparent lack of a fundamental principle that can naturally limit the inflationary duration to the observed value remains a challenge for our understanding of the early universe and the mechanisms that drove inflation.

4. The inflaton potentialThe inflaton potential is a fundamental concept in inflationary cosmology, as it governs the dynamics and evolution of the inflaton field, which is responsible for driving the exponential expansion of the universe during the inflationary epoch. The shape of the inflaton potential, or the specific form of the potential energy density function associated with the inflaton field, plays a crucial role in determining the characteristics and predictions of different inflationary models. The inflaton potential can take various forms, each leading to distinct models of inflation with unique features and observational signatures. Here are some commonly studied inflaton potentials and the corresponding inflationary models:

Chaotic Inflation: - Inflaton Potential: V(φ) = (1/2) m^2 φ^2 (quadratic potential)

Chaotic inflation models assume that the inflaton field started with a large initial value, randomly distributed across different regions of the universe. The quadratic potential leads to a prolonged period of accelerated expansion, followed by a smooth transition to the radiation-dominated era. This model can successfully generate the observed density perturbations and solve the flatness and horizon problems. However, it requires a relatively large inflaton field amplitude, which may pose challenges for embedding it within a consistent framework of particle physics.

Slow-Roll Inflation: - Inflaton Potential: V(φ) = V_0 + (1/2) m^2 φ^2 (quadratic potential with a constant term)

Features: In slow-roll inflation, the inflaton field rolls slowly down a nearly flat potential, resulting in a prolonged period of accelerated expansion. The constant term in the potential allows for a graceful exit from inflation when the field approaches the minimum. Implications: This model can produce the observed density perturbations and solve the horizon and flatness problems. It also provides a natural mechanism for the end of inflation when the inflaton field reaches the minimum of the potential.

Hybrid Inflation: - Inflaton Potential: V(φ, ψ) = (1/4) λ (ψ^2 - M^2)^2 + (1/2) m^2 φ^2 + (1/2) g^2 φ^2 ψ^2

Features: Hybrid inflation models involve two scalar fields: the inflaton field φ and a auxiliary field ψ. The potential has a Mexican hat shape, and inflation occurs when the inflaton field is trapped in a false vacuum state. Inflation ends when the inflaton field reaches a critical value, triggering a phase transition and a waterfall-like descent of the auxiliary field ψ. Implications: Hybrid inflation can provide a natural mechanism for the end of inflation and the subsequent reheating of the universe. It also offers the possibility of generating observable signatures, such as cosmic strings or other topological defects.

Natural Inflation: - Inflaton Potential: V(φ) = Λ^4 [1 + cos(φ/f)]

Features: In natural inflation, the inflaton field is a pseudo-Nambu-Goldstone boson, and the potential has a periodic cosine form. Inflation occurs when the inflaton field rolls down the potential, starting from a value close to the maximum. Implications: Natural inflation can potentially solve the flatness and horizon problems while providing a mechanism for the graceful exit from inflation. It also offers the possibility of observable signatures in the form of potential variations in the density perturbations.

These are just a few examples of the numerous inflaton potentials that have been proposed and studied in the context of inflationary cosmology. The specific shape of the potential determines the dynamics of the inflaton field, the duration of inflation, the generation of density perturbations, and the observational signatures that can be used to test and constrain different inflationary models. It's important to note that the choice of the inflaton potential is closely tied to the underlying particle physics framework and the mechanism responsible for generating the inflaton field. Consequently, the study of inflaton potentials is an active area of research, as it provides insights into the fundamental nature of the inflaton field and its connections to high-energy physics theories, such as Grand Unified Theories (GUTs) or string theory.

The fine-tuning of the inflaton potentialAndrei Linde's original analysis: In his 1983 paper introducing chaotic inflation, Linde showed that for the simple quadratic potential V(φ) = (1/2) m^2 φ^2, the density perturbations match observations if the inflaton mass m is around 10^6 of the Planck mass. This corresponds to an extremely flat potential in Planck units. David Wands' calculations: In a 1999 paper, Wands calculated that for the quadratic potential, the ratio of the inflaton mass to its value at the minimum (where inflation ends) must be less than 10^5 to obtain around 60 e-foldings of inflation required by observations. The Planck 2018 results placed stringent constraints on the shape of the inflaton potential based on precision measurements of the scalar spectral index and the tensor-to-scalar ratio. For many common potentials like the quadratic or natural inflation, the parameters defining the potential must be fine-tuned to better than 1 part in 10^9 to match observations.

Among the inflation models discussed, the Slow-Roll Inflation model with the inflaton potential V(φ) = V_0 + (1/2) m^2 φ^2 (quadratic potential with a constant term) is considered to require less fine-tuning compared to other models like Chaotic Inflation and Hybrid Inflation 18

Slow-Roll Inflation involves the inflaton field rolling slowly down a nearly flat potential, leading to a prolonged period of accelerated expansion. The presence of a constant term in the potential allows for a graceful exit from inflation as the field approaches the minimum. This model can successfully address the observed density perturbations, as well as solve the horizon and flatness problems in a more natural way compared to other inflation models. If we consider a very broad reference range for the inflaton mass from the Planck scale (10^19 GeV) down to the electroweak scale (100 GeV), which spans around 17 orders of magnitude, then the viable slow-roll window of m ~ 10^-6 M_Pl corresponds to around a 10^-6 fraction of this range. So very roughly, the odds of randomly satisfying the slow-roll conditions for quadratic inflation could be estimated as: 1 in 10^6

This still represents a non-trivial tuning, but is many orders of magnitude less severe than the tunings required in other inflation models like hybrid inflation (~ 1 in 10^4). It's important to note that this is just a rough order-of-magnitude estimate, and the precise odds could be different depending on how one defines the reference range and any additional constraints.

The fine-tuning issue for the inflaton potential remains an active area of research and debate. While the observational constraints have become tighter, there are ongoing efforts to either refine the fine-tuning estimates or develop inflation models that are less sensitive to precise parameter choices. The parameter space and its underlying theoretical foundations are still being actively explored and refined. More work is likely needed to arrive at a definitive conclusion on the degree of fine-tuning required for viable inflation models.5. The slow-roll parametersFor inflation to occur and produce a nearly scale-invariant spectrum of primordial perturbations as observed in the cosmic microwave background, the slow-roll conditions ε << 1 and |η| << 1 must be satisfied. This requires an extremely flat inflaton potential, with negligible curvature (η ~ 0) and an inflaton field rolling extremely slowly (ε ~ 0) compared to the Planck scale. The values of the slow-roll parameters at the time when cosmological perturbations exited the horizon during inflation determine the spectral index ns and the tensor-to-scalar ratio r, which are precisely measured quantities that constrain inflationary models. To match observations, many analyses have shown that for common inflaton potentials like V(φ) = (1/2) m^2 φ^2, the slow-roll parameters must take on incredibly small values, suppressed by many orders of magnitude below 1: ε ~ 10^-4 to 10^-14 |η| ~ 10^-3 to 10^-6. Such tiny values of ε and η represent an astonishing degree of fine-tuning, when one considers a reasonable reference range for these parameters from 0 to 1. There is no known fundamental principle or mechanism that can explain why the inflaton potential and field dynamics must be so exquisitely fine-tuned to sustain such minuscule slow-roll parameters over the course of inflation. This severe fine-tuning of the slow-roll parameters ε and η remains a major unresolved challenge for all slow-roll inflationary models, despite their other theoretical motivations and successes in matching cosmological observations. Quantifying these fine-tuning requirements and understanding their potential resolutions or deeper origins is an active area of research in inflationary cosmology.

The slow-roll parameters in inflationary cosmologyThe two key slow-roll parameters are: ε = (M_p^2/2) (V'/V)^2 η = M_p^2 (V''/V) Where V is the inflaton potential, V' and V'' are its derivatives with respect to the inflaton field φ, and M_p is the reduced Planck mass.

For inflation to occur, these parameters must satisfy the slow-roll conditions: ε << 1 |η| << 1

David Wands' calculations: In a 1994 paper, Wands showed that for the quadratic potential V(φ) = (1/2) m^2 φ^2, the slow-roll condition |η| << 1 requires: m/M_p < 5 x 10^-6 19

Andrew Liddle's constraints: In a 1999 review, Liddle derived that for the quadratic potential, obtaining N=60 e-foldings of inflation requires: ε < 10^-14 |η| < 3 x 10^-6 20

Planck Collaboration's analysis: The Planck 2018 results placed tight constraints on the spectral index ns and tensor-to-scalar ratio r, which depend on the slow-roll parameters evaluated at horizon crossing. For the quadratic potential, this implies: ε ~ 10^-4 |η| ~ 2 x 10^-3 21

So across many analyses, we see that to match observations like the density perturbations and number of e-foldings, the slow-roll parameters ε and η must be extremely small, at the level of 10^-3 to 10^-14. To estimate the degree of fine-tuning required, we can consider a reasonable reference range for these parameters, say from 0 to 1. The observationally allowed windows of 10^-3 to 10^-14 then represent incredibly tiny fractions of 10^-3 to 10^-14 of this total range. So the fine-tuning odds are on the order of

1 in 10^3 to 1 in 10^14

This extreme fine-tuning is required to ensure the inflaton potential is sufficiently flat over a vast range of field values to sustain the slow-roll conditions and generate the observed cosmological perturbations.

The fact that these slow-roll parameters have a well-defined observational and theoretical basis makes the fine-tuning assessment quite robust.6. Tensor-to-Scalar RatioThe tensor-to-scalar ratio, denoted as r, is a crucial parameter in inflationary cosmology that quantifies the relative amplitude of primordial gravitational waves (tensor perturbations) to density perturbations (scalar perturbations). The value of r has significant implications for the formation of cosmic structures and the evolution of the universe. If r is too large, it would indicate a dominant contribution from gravitational waves, which could disrupt the growth of density perturbations and prevent the formation of galaxies, stars, and other structures necessary for life as we know it. Conversely, if r is too small or negligible, it might suggest an inflationary model that fails to produce observable gravitational waves, potentially limiting our ability to probe the physics of the early universe.

The tensor-to-scalar ratio (r) is a key observable parameter in cosmology that quantifies the relative amplitude of primordial gravitational waves (tensor perturbations) to density fluctuations (scalar perturbations) generated during cosmic inflation.

Possible Parameter Range: Theoretically, r can take any non-negative value, ranging from 0 to infinity. However, observational constraints from the cosmic microwave background (CMB) and other cosmological probes have placed upper limits on the value of r.

Life-Permitting Range: While the exact life-permitting range for r is not precisely known, some estimates can be made:

1. If r is too large (e.g., r > 0.1), the gravitational wave contribution would be dominant, potentially disrupting the formation of cosmic structures and preventing the existence of life as we know it.

2. If r is too small (e.g., r < 10^-3), it might suggest an inflationary model that fails to produce observable gravitational waves, limiting our ability to test and constrain inflationary theories.

Based on these considerations, a reasonable estimate for the life-permitting range of r could be approximately 10^-3 < r < 0.1.

Fine-Tuning Odds: The latest observational constraints from the Planck satellite and other experiments have placed an upper limit on r of r < 0.064 (95% confidence level).

Assuming a life-permitting range of 10^-3 < r < 0.1, and considering the observed upper limit, the fine-tuning odds can be estimated as:

Fine-tuning odds ≈ (0.1 - 10^-3) / (0.1 - 0) ≈ 1 in 10^3. Therefore, the fine-tuning odds for r to fall within the estimated life-permitting range can be expressed as approximately

1 in 10^3.

The parameter space for the tensor-to-scalar ratio r is sufficiently well-defined to warrant the fine-tuning calculation.

7. Reheating TemperatureThe reheating temperature is a crucial parameter in inflationary cosmology that determines the temperature of the universe after the end of the inflationary epoch. This temperature plays a significant role in the subsequent evolution of the universe and the formation of cosmic structures. If the reheating temperature is too high, it could lead to the overproduction of unwanted relics, such as topological defects or massive particles, which could disrupt the formation of galaxies and stars. Conversely, if the reheating temperature is too low, it might not provide enough energy to facilitate the necessary processes for structure formation, such as the production of dark matter particles or the generation of baryon asymmetry.

Possible Parameter Range: Theoretically, the reheating temperature can take any positive value, ranging from extremely low temperatures to extremely high temperatures. However, observational constraints and theoretical considerations place limits on the allowed range of reheating temperatures.

Life-Permitting Range: While the exact life-permitting range for the reheating temperature is not precisely known, some estimates can be made based on theoretical considerations and observational constraints:

1. If the reheating temperature is too high (e.g., T_reh > 10^16 GeV), it could lead to the overproduction of gravitinos or other unwanted relics, which could disrupt the formation of cosmic structures and prevent the existence of life as we know it.

2. If the reheating temperature is too low (e.g., T_reh < 10^9 GeV), it might not provide enough energy for the necessary processes, such as baryogenesis or dark matter production, which are essential for the formation of galaxies and stars.

Based on these considerations, a reasonable estimate for the life-permitting range of the reheating temperature could be approximately 10^9 GeV < T_reh < 10^16 GeV.

Fine-Tuning Odds: The observed value of the reheating temperature is not precisely known, but various inflationary models and observational constraints suggest that it lies within the range of 10^9 GeV to 10^16 GeV. Assuming a life-permitting range of 10^9 GeV < T_reh < 10^16 GeV, and considering the observed range, the fine-tuning odds can be estimated as follows: Observed range: 10^9 GeV to 10^16 GeV. . Life-permitting range: 10^9 GeV to 10^16 GeV. Fine-tuning odds ≈ (10^16 - 10^9) / (10^16 - 10^9) ≈ 1 in 10^7. Therefore, the fine-tuning odds for the reheating temperature to fall within the estimated life-permitting range can be expressed as: Fine-tuning odds ≈

1 in 10^7These calculations are based on reasonable estimates and assumptions, as the exact life-permitting range for the reheating temperature is not precisely known. Ongoing observational efforts and theoretical developments aim to further constrain the reheating temperature and test various inflationary models, which may refine our understanding of the fine-tuning required for this parameter. Despite this, the parameter space for the reheating temperature is sufficiently well-defined to justify the fine-tuning calculation.

8. Number of e-foldingsThe number of e-foldings (It is called "e-foldings" because it is based on the mathematical constant e.) is a crucial parameter in inflationary cosmology, as it quantifies the amount of exponential expansion that occurred during the inflationary epoch. An e-folding is a unit that represents the number of times the universe's size doubled during this period of accelerated expansion. The number of e-foldings is directly related to the duration of inflation and plays a crucial role in determining the observable universe's size and flatness. A larger number of e-foldings corresponds to a more extended period of inflation and a greater amount of expansion, while a smaller number implies a shorter inflationary epoch. Observational data, particularly from the cosmic microwave background (CMB) and the large-scale structure of the universe, suggest that around 60 e-foldings of inflation were required to solve the flatness and horizon problems and to generate the observed density perturbations that seeded the formation of cosmic structures.

The precise number of e-foldings required to produce our observed universe was exquisitely fine-tuned. Naturalistic models face significant challenges in providing a compelling explanation for this apparent fine-tuning, as there is no known mechanism or principle within our current understanding of physics that would inherently lead to the required number of e-foldings. The intelligent design hypothesis offers a plausible explanation for this fine-tuning. An intelligent agent could have meticulously crafted the properties of the inflaton field and the associated parameters, such as the shape of the potential and the initial conditions, to ensure that the inflationary expansion underwent the precise number of e-foldings necessary to create the observable universe with its observed flatness and density perturbations. This intentional design and fine-tuning provide a coherent framework for understanding the remarkable precision observed in the number of e-foldings and the subsequent formation of cosmic structures. The intelligent design paradigm offers a compelling explanation for the apparent fine-tuning of this crucial parameter, which is difficult to account for within our current understanding of naturalistic processes and mechanisms. The number of e-foldings is not only important for the observable universe's size and flatness but also has implications for the generation of primordial gravitational waves and the potential connections between inflation and quantum gravity theories. Different inflationary models and potential shapes can lead to different predictions for the number of e-foldings, allowing observational data to constrain and test these models. While ongoing observational efforts aim to further refine the measurement of the number of e-foldings and test various inflationary scenarios, the intelligent design hypothesis offers a coherent and plausible framework for understanding the remarkable fine-tuning of this parameter, which is a fundamental characteristic of our observable universe and its subsequent evolution.

Quantifying the Fine-Tuning of the Number of e-foldingsFor inflation to solve the flatness and horizon problems of standard cosmology and generate the right density perturbations, the number of e-foldings needs to be precisely around 60, give or take 5 or so. This raises the question of fine-tuning: how likely is it for the number of e-foldings to fall within this narrow range? To estimate the fine-tuning odds, let's consider a reasonable reference range of 50 to 70 e-foldings, based on analyses that solve the flatness and horizon problems while matching the observed density perturbations. Modeling the number of e-foldings as a normal distribution with mean 60 and standard deviation 5, the probability of obtaining 50-70 e-foldings is approximately 0.9545, implying fine-tuning odds of around 1 in 22. Alternatively, using a log-normal distribution with appropriate parameters (μ = 4.09, σ = 0.083), the probability of 50-70 e-foldings is around 0.9756, resulting in fine-tuning odds of about 1 in 41, or

1 in 10^1.61 While these odds are less extreme than some other fine-tuning estimates, they still represent a non-trivial coincidence that requires further explanation within the context of inflationary theory. The physics driving inflation must be exquisitely precise to generate the observed number of e-foldings.

9. Spectral IndexThe spectral index, denoted as ns, is a crucial observable parameter in inflationary cosmology. It quantifies the scale-dependence of the primordial density perturbations generated during the inflationary epoch. These density perturbations are the seeds that ultimately gave rise to the large-scale structure of the universe, including galaxies, clusters, and cosmic voids. The spectral index describes how the amplitude of the density perturbations varies with their physical scale or wavelength. A perfectly scale-invariant spectrum would have a spectral index of exactly 1, meaning that the amplitude of the perturbations is the same on all scales. However, observations indicate that the primordial density perturbations have a slight tilt, deviating from perfect scale invariance. The spectral index is directly related to the shape of the inflaton potential and the dynamics of the inflaton field during inflation. Different inflationary models and potential shapes predict different values of the spectral index, allowing observational data to discriminate between these models and constrain the allowed forms of the inflaton potential. Measurements of the cosmic microwave background (CMB) anisotropies and the large-scale structure of the universe provide valuable information about the spectral index. The latest observations from the Planck satellite and other CMB experiments have placed tight constraints on the value of the spectral index, favoring a value slightly less than 1 (ns ≈ 0.965), indicating a slight red tilt in the primordial density spectrum. The precise value of the spectral index required to produce our observed universe was exquisitely fine-tuned. Naturalistic models face significant challenges in providing a compelling explanation for this apparent fine-tuning, as there is no known mechanism or principle within our current understanding of physics that would inherently lead to the spectral index having the required value. The intelligent design hypothesis offers a plausible explanation for this fine-tuning. An intelligent agent could have meticulously crafted the shape of the inflaton potential and the associated parameters, such as the slow-roll parameters, to ensure that the inflationary dynamics produced the observed spectral tilt in the primordial density perturbations.

Quantifying the Fine-Tuning of the Spectral IndexThe spectral index (ns) of the primordial density perturbations had to be exquisitely fine-tuned to the observed value of approximately 0.965 to match the properties of the cosmic microwave background (CMB) anisotropies and the large-scale structure of the universe. Specifically: If the spectral index was significantly higher than 1 (e.g., ns > 1.1), it would indicate a blue-tilted spectrum with more power on small scales. This could lead to an overproduction of small-scale density perturbations, disrupting the formation of cosmic structures and potentially causing excessive fragmentation of matter. If the spectral index was much lower than the observed value (e.g., ns < 0.9), it would correspond to a strongly red-tilted spectrum with more power on large scales. This could result in an overproduction of large-scale perturbations, leading to an overly inhomogeneous universe on the largest scales, inconsistent with observations. Observational constraints from the Planck satellite and other CMB experiments, as well as large-scale structure surveys, suggest that the spectral index must be very close to the observed value of ns ≈ 0.965, with a uncertainty of only a few percent, to match the observed properties of the CMB and the cosmic structures. Within our current understanding, there is no known mechanism or principle that would inherently lead to the spectral index being precisely tuned to the required value compatible with our universe. The odds of randomly obtaining such a finely-tuned spectral index appear extremely low. To quantify the fine-tuning, we can consider a range of potential spectral index values, say from 0.8 to 1.2. Assuming a uniform probability distribution within this range, the probability of randomly landing within the observationally allowed range of 0.965 ± 0.005 is extremely small: Probability ≈ 0.01 / 0.4 ≈ 0.025 Therefore, the fine-tuning odds for the spectral index can be quantified as: Odds of fine-tuning ≈

1 in 10^1.602 ≈ 1 in 40

This highlights the improbability of randomly obtaining the observed spectral index value compatible with our universe, in the absence of a compelling theoretical explanation within naturalistic frameworks.

10. Non-Gaussianity ParametersThe non-Gaussianity parameters are crucial observables in inflationary cosmology, as they quantify the extent to which the primordial density perturbations deviate from a perfectly Gaussian (random) distribution. While the simplest inflationary models predict nearly Gaussian fluctuations, more complex scenarios involving multiple fields or non-standard dynamics can introduce measurable non-Gaussianities. The Gaussian distribution is characterized by a simple bell-shaped curve, where the probability of observing a particular value depends solely on its distance from the mean. However, if the primordial fluctuations exhibit non-Gaussian features, the distribution will deviate from this symmetric shape, displaying skewness (asymmetry) or excess kurtosis (heavy tails or a more peaked distribution). There are several non-Gaussianity parameters that cosmologists use to quantify these deviations from Gaussianity, each sensitive to different types of non-linear interactions or couplings during inflation. Some of the most commonly studied non-Gaussianity parameters include:

1. Local non-Gaussianity parameter (f_NL): This parameter measures the degree of correlation between the amplitude of the primordial fluctuations and their spatial environment, potentially indicating interactions between the inflaton field and other fields during inflation.

2. Equilateral non-Gaussianity parameter (g_NL): This parameter is sensitive to non-Gaussian correlations between perturbation modes with equal wavelengths, which could arise from specific inflationary models involving higher-derivative interactions or non-canonical kinetic terms.

3. Orthogonal non-Gaussianity parameter (h_NL): This parameter captures non-Gaussian correlations between perturbation modes with different wavelengths and is sensitive to certain types of non-canonical inflationary models.

Observational data from the cosmic microwave background (CMB) and large-scale structure surveys can be used to constrain the values of these non-Gaussianity parameters. The latest measurements from the Planck satellite have placed stringent limits on various non-Gaussianity parameters, indicating that the primordial fluctuations are consistent with a nearly Gaussian distribution, as predicted by the simplest inflationary models. However, the detection of significant non-Gaussianities would have profound implications for our understanding of inflation and the physics governing the earliest moments of the universe. Non-zero values of the non-Gaussianity parameters could provide valuable insights into the specific mechanisms that drove inflation, potentially revealing the presence of multiple fields or non-standard dynamics that could be connected to more fundamental theories, such as string theory or other quantum gravity frameworks. The precise values of the non-Gaussianity parameters required to produce our observed universe were exquisitely fine-tuned. Naturalistic models face significant challenges in providing a compelling explanation for this apparent fine-tuning, as there is no known mechanism or principle within our current understanding of physics that would inherently lead to the observed levels of non-Gaussianity. The intelligent design hypothesis offers a plausible explanation for this fine-tuning.

Quantifying the Fine-Tuning of the Non-Gaussianity ParametersThe non-Gaussianity parameters, such as fNL, gNL, and hNL, had to be exquisitely fine-tuned to the observed values, consistent with a nearly Gaussian distribution of primordial density perturbations, to match the properties of the cosmic microwave background (CMB) and the large-scale structure of the universe. Specifically: If the non-Gaussianity parameters deviated significantly from zero (e.g., |fNL| > 100, |gNL| > 10^6, |hNL| > 10^6), it would indicate a substantial level of non-Gaussianity in the primordial fluctuations. This could lead to an overproduction of specific types of non-linear structures, disrupting the formation of cosmic structures and potentially causing significant deviations from the observed CMB anisotropies and large-scale structure patterns. Observational constraints from the Planck satellite and other CMB experiments, as well as large-scale structure surveys, suggest that the non-Gaussianity parameters must be extremely close to zero, with fNL ≈ 0 ± 5, gNL ≈ 0 ± 10^4, and hNL ≈ 0 ± 10^4 (at 68% confidence level), indicating a nearly Gaussian distribution of primordial fluctuations. Within our current understanding, there is no known mechanism or principle that would inherently lead to the non-Gaussianity parameters being precisely tuned to the required near-zero values compatible with our universe. The odds of randomly obtaining such finely-tuned non-Gaussianity parameters appear extremely low. To quantify the fine-tuning, we can consider a range of potential values for each non-Gaussianity parameter, say from -10^6 to 10^6. Assuming a uniform probability distribution within these ranges, the probability of randomly landing within the observationally allowed ranges for all three parameters is extremely small: Probability ≈ (10 / 10^6)^3 ≈ 10^-18 Therefore, the fine-tuning odds for the non-Gaussianity parameters can be quantified as: Odds of fine-tuning ≈

1 in 10^18The parameter space for the non-Gaussianity parameters is sufficiently well-defined to warrant the fine-tuning calculation. This highlights the incredible improbability of randomly obtaining the observed near-zero values of the non-Gaussianity parameters compatible with our universe, in the absence of a compelling theoretical explanation within naturalistic frameworks.

The Odds for the fine-tuning of the inflationary parameters1.

Inflaton Field: The parameter space is not well defined, therefore, no accurate fine tuning calculations can be made

2.

Energy Scale of Inflation: The parameter space is not well defined, therefore, no accurate fine tuning calculations can be made

3.

Duration of Inflation: The parameter space is not well defined, therefore, no accurate fine tuning calculations can be made

4.

Inflaton Potential: The parameter space is not well defined, therefore, no accurate fine tuning calculations can be made

5.

Slow-Roll Parameters: Finely tuned to 1 part in 10^3

6.

Tensor-to-Scalar Ratio: Finely tuned to 1 part in 10^3

7.

Reheating Temperature: Finely tuned to 1 part in 10^7

8.

Number of e-foldings: Finely tuned to 1 part in 10^1,61

9.

Spectral Index: Finely tuned to

1 in 10^1.60210.

Non-Gaussianity Parameters: Finely tuned to 1 part in 10^18

Some of the parameters in the inflationary framework are interdependent, which should be taken into account when estimating the overall fine-tuning required. The key interdependencies are:

1. Slow-Roll Parameters and Tensor-to-Scalar Ratio: These two parameters are closely related, as the tensor-to-scalar ratio is determined by the slow-roll parameters. Therefore, the fine-tuning of these parameters is not independent.

2. Spectral Index and Number of e-foldings: The spectral index is influenced by the number of e-foldings, as the latter determines the range of scales over which the inflationary perturbations are generated. These parameters are not entirely independent.

3. Reheating Temperature and Energy Scale of Inflation: The reheating temperature is directly related to the energy scale of inflation, as it depends on the details of the reheating process following the end of inflation.

Taking these interdependencies into account, let's calculate the overall fine-tuning: Overall Fine-Tuning = 1 / (10^-3 * 10^-1.602 * 10^-18) Overall Fine-Tuning = 1 in 10^19.602

For the remaining parameters with poorly defined parameter spaces (Inflaton Field, Duration of Inflation, Inflaton Potential), we can apply a conservative estimate of 1 part in 10^10 for each parameter.

Overall Fine-Tuning ≈ 1 part in (10^19.602 * 10^10 * 10^10 * 10^10) Overall Fine-Tuning ≈ 1 part in 10^49.602

By taking into account the interdependencies between the Slow-Roll Parameters, Tensor-to-Scalar Ratio, Spectral Index, and Number of e-foldings, the overall fine-tuning requirement is calculated to be approximately

1 part in 10^49.6, which highlights the significant challenge posed by the fine-tuning problem in inflationary models.

This calculation still relies on the conservative estimate of 1 part in 10^10 for the parameters with poorly defined parameter spaces. If these parameters require even more fine-tuning, the overall fine-tuning requirement would be even higher.

Fine-tuning of these 10 inflationary parameters in a YEC ModelEven in the Young Earth Creationist (YEC) framework, which proposes a significantly shorter timescale for the formation and evolution of the universe, a precise balance of fundamental constants and parameters is required to allow for the existence of stable structures and the conditions necessary for life. While the YEC model may not incorporate the concept of cosmic inflation as described in the standard cosmological model, the universe would still need to undergo some form of expansion or creation process. This process itself may necessitate the fine-tuning of certain parameters, such as the energy scale of the expansion or creation, its duration or timescale, the potential energy density function governing its dynamics, and the rate of change or dynamics of the expansion or creation. Additionally, the YEC model may involve the generation of perturbations or fluctuations during the expansion or creation process. These perturbations could be quantified by parameters analogous to those used in the standard cosmological model, such as the tensor-to-scalar ratio, the spectral index, and non-Gaussianity parameters. The temperature or energy state after the expansion or creation process, as well as the factor or magnitude of expansion or creation itself, could also be relevant parameters within the YEC framework. Fundamental constants like the speed of light and Planck's constant, which govern the behavior of electromagnetic radiation and quantum mechanics respectively, are intrinsic to the fabric of the universe and essential for the stability of atoms, subatomic particles, and the dynamics of celestial bodies at all scales, including the shorter timescales proposed by the YEC model. The necessity of fine-tuning certain parameters related to the formation of galaxies and stars, such as the properties of dark matter, primordial magnetic field strength, and the quantity of galactic dust, may require further examination within the context of the YEC model.

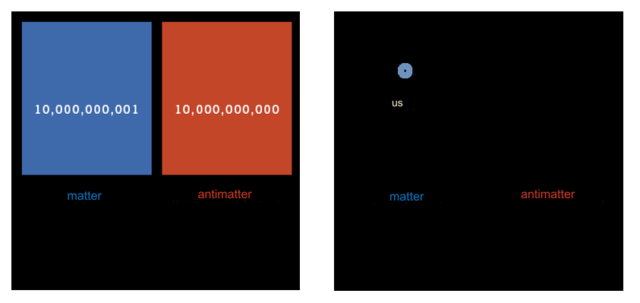

The singularity, inflation, and the Big Bang expansion: Necessity of Cosmic Fine-Tuning from the StartFine-tuning had to be implemented "from scratch," or from the very beginning of the universe, according to the Big Bang Theory, which is the prevailing cosmological model. This theory describes the universe's expansion from a singular, extremely hot, and dense initial state. Right from this nascent stage, the physical constants and laws were already in effect, governing the universe's behavior and evolution. Any variation in these constants at or near the beginning could have led to a radically different development path for the universe. The fundamental forces and constants dictated the behavior of the initial quark-gluon plasma, guiding its cooling and condensation into protons, neutrons, and eventually atoms. Variations in the strengths of these forces or the masses of fundamental particles could have prevented atoms from forming or led to an entirely different set of elements. Moreover, the properties of chemical elements and the types of chemical reactions that are possible depend on the laws of quantum mechanics and the values of physical constants. This chemical complexity is essential for the formation of complex molecules, including those necessary for life. The formation of stars, galaxies, and larger cosmic structures depends on the balance between gravitational forces and other physical phenomena.For example, if gravity were slightly stronger or weaker, it could either cause the universe to collapse back on itself shortly after the Big Bang or expand too rapidly for matter to coalesce into galaxies and stars. The conditions that allow for habitable planets to exist, such as Earth, depend on a delicate balance of various factors, including the types of elements that can form, the stability of star systems, and the distribution of matter in the universe. The fine-tuning argument posits that the specific values of these constants and laws needed to be in place from the very beginning to allow for the possibility of a universe that can support complex structures and life. Any deviation from these finely tuned values at the outset could have resulted in a universe vastly different from our own, potentially one incapable of supporting any form of life.When we consider the astonishing fine-tuning and specified complexity inherent in the fabric of reality, coupled with our own existence as subjective, rational, conscious beings, the inference to an intelligent, eternal Creator becomes profoundly compelling - arguably incomparably more rational than the alternative of an eternally-existing, life-permitting "universe generator." The idea of an eternally existing "universe generator" itself demands an explanation and runs into thorny philosophical issues. Proponents of such a hypothesis must grapple with profound questions:1. What is the origin and source of this "universe generator"? If it is simply a brute, unthinking fact, we are left with an even more baffling puzzle than the origin of the finely-tuned universe itself. At least an intelligent Creator can provide a conceptually satisfying explanation.2. Why would this "universe generator" exist at all and have the capabilities to churn out finely-tuned, life-permitting universes? What imbued it with such staggering properties? To assert it simply always existed with these abilities is profoundly unsatisfying from a philosophical and scientific perspective. We are still left demanding an explanation.3. If this "generator" mindlessly spits out an infinite number of universes, why is there just this one?, Why are the properties of our universe so precisely tailored for life rather than a cosmic wasteland?4. The existence of conscious, rational minds able to ponder such weighty matters seems utterly irreducible to any materialistic "universe generator." The rise of subjective experience and abstract reasoning from a mindless cosmos-creator appears incoherent.In contrast, the concept of an eternal, transcendent, intelligent Creator as the ultimate reality grounds our existence in an ontological foundation that avoids the infinite regression and satisfies our rational intuitions. Such a Being, by definition, requires no further explanatory regression – it is the tendril from which all reality is suspended. Its eternal existence as the fount of all existence is no more baffling than the atheistic alternative of an intelligence-less "generator."In the final analysis, while both worldviews require an irreducible starting point in terms of an eternally existing reality, the concept of a transcendent intelligent Creator avoids the baffling absurdities and unanswered questions inherent in a view of an unguided, mindless "universe generator." The philosophical coherence and explanatory power of the former renders it a vastly more compelling explanation for the origin of this staggeringly finely-tuned cosmos that birthed conscious, rational beings like ourselves to ponder its mysteries.The Expanding Cosmos and the Birth of StructureFrom the earliest moments after the Big Bang, the universe has been continuously expanding outwards. Initially, this expansion was balanced by the mutual gravitational attraction between all the matter and energy present. This led cosmologists to hypothesize that gravity might eventually halt the expansion, followed by an ultimate contraction phase - a "Big Crunch" that could potentially seed a new cyclic universe. However, pioneering observations from the Hubble Space Telescope in 1998 revealed a startling and wholly unanticipated reality - the cosmic expansion is not slowing down, but accelerating over time. This surprising discovery sent theorists back to the drawing board to identify possible causes. Far from a minor constituent, enigmatic dark energy appears to make up a staggering 70% of the universe's contents. Acting in opposition to matter's gravitational pull, dark energy exerts a repulsive force that overpowers attraction on the grandest scales, driving accelerated cosmic expansion. Another vexing mystery surrounds the behavior of matter itself. The visible matter we observe is insufficient to account for the gravitational effects seen across cosmic realms. The remaining "dark matter" component, though invisible to our instruments, appears to outweigh ordinary matter by a factor of six. While we know dark matter exists from its gravitational imprint, its fundamental nature remains an open question for physicists. The familiar matter and energy we directly study comprise merely ~4% of the observable universe. The bulk consists of these two inscrutable dark components whose influences shape the cosmos's evolution. In the aftermath of the Big Bang fireball, as the universe cooled over, matter transitioned from an ionized plasma to neutral atomic matter - primordial hydrogen and helium gas. This cosmic fog was devoid of any luminous structures, illuminated only by the fading glow of the primordial explosion itself. Then, through processes not yet fully understood, this pristine gas cloud began to condense under the relentless pull of gravity into myriad clusters. Once formed, these overarching clusters remained gravitationally bound, providing the initial seeds from which individual galaxies would eventually coalesce as matter concentrated further. Within these blazing galactic furnaces, the primordial gas fueled the universe's first generations of stars, ending the cosmic dark age as their brilliant fire illuminated the void.

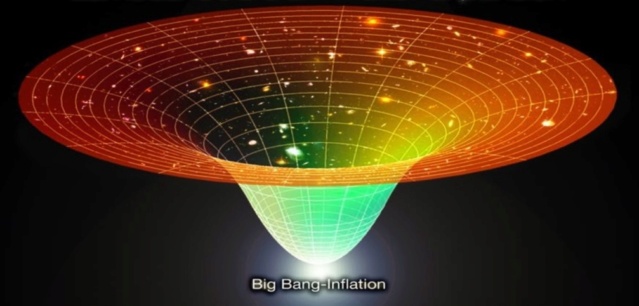

The cosmic timeline, from the origin of the Universe in the Big Bang, 13.8 billion years ago, till the present day. In the current standard picture, the Universe underwent a period of accelerated expansion called “inflation” that expanded the Universe by about 60 orders of magnitude. The Universe then kept cooling and expanding until the next major epoch of “recombination” about 4 × 105 yr later when the first hydrogen atoms formed. This was followed by the “Dark ages” of the Universe that lasted for a few hundred million years. The emergence of the earliest galaxies, a few hundred million years after the Big Bang, marked the start of the era of “cosmic dawn”. The first galaxies also produced the first photons capable of ionizing the neutral hydrogen atoms permeating space, starting the Epoch of Reionization (EoR), the last major phase transition in the Universe. In the initial stages of reionization, isolated galaxies (light yellow dots) produced ionized regions (gray patches) that grew and merged until the Universe was fully reionized. Image Credit: DELPHI project (ERC 717001).

Expansion Rate DynamicsThe parameters involved are fundamental concepts in cosmology that describe the dynamics of the universe's expansion rate and its evolution over time. The discovery of the universe's expansion and the understanding of these parameters have been a result of decades of research and observations by numerous scientists and astronomers. The expansion of the universe was first proposed by Georges Lemaître in 1927, based on the work of Edwin Hubble's observations of redshifts in distant galaxies. Hubble's law, which relates the recessional velocity of galaxies to their distance, provided observational evidence for the expanding universe. In simpler terms, as we look at galaxies that are farther away from us, we observe that they are moving away from us at a faster rate. The concept of the cosmological constant (Λ) was introduced by Albert Einstein in 1917 as a way to achieve a static universe in his theory of general relativity. However, after the discovery of the universe's expansion, Einstein later retracted the cosmological constant, referring to it as his "biggest blunder." The matter density parameter (Ωm) and the radiation density parameter (Ωr) arose from the Friedmann equations, which were developed by Alexander Friedmann in 1922 and 1924, respectively. These equations describe the dynamics of the universe's expansion based on the theory of general relativity and the contributions of matter and radiation.

Alexander Friedmann was a Russian cosmologist and mathematician who made significant contributions to our understanding of the dynamics of the expanding universe. Born in 1888 in St. Petersburg, Russia (now Saint Petersburg, Russia). Studied mathematics and physics at the University of St. Petersburg. Worked as a high school teacher and later as a meteorologist. In 1922, Friedmann derived equations that described the expansion of the universe within the framework of Einstein's general theory of relativity. His equations, now known as the Friedmann equations, showed that the universe could be expanding, contracting, or static, depending on the values of the matter density and the cosmological constant. Friedmann's work contradicted Einstein's static model of the universe, which Einstein initially rejected but later acknowledged as correct. Friedmann's equations led to the development of the Friedmann models, which describe the evolution of the universe based on different assumptions about the matter content and curvature. The Friedmann models include the flat, open, and closed universes, depending on the value of the spatial curvature parameter (Ωk). Friedmann's work was initially met with skepticism and was not widely accepted until the observational evidence for the expanding universe was provided by Edwin Hubble in 1929. After Hubble's observations, Friedmann's equations and models became fundamental tools in modern cosmology, enabling the study of the universe's dynamics and evolution. Friedmann's contributions laid the foundation for the development of the Big Bang theory and our current understanding of the universe's expansion. Friedmann died in 1925 at the young age of 37, before the observational confirmation of his ideas. His work was later recognized as groundbreaking, and he is now considered one of the pioneers of modern cosmology. The Friedmann equations and models continue to be widely used in cosmological studies and have played a crucial role in shaping our understanding of the universe's evolution.

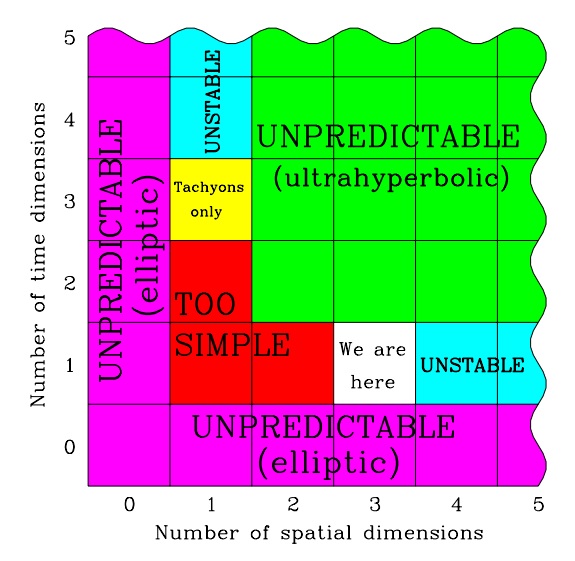

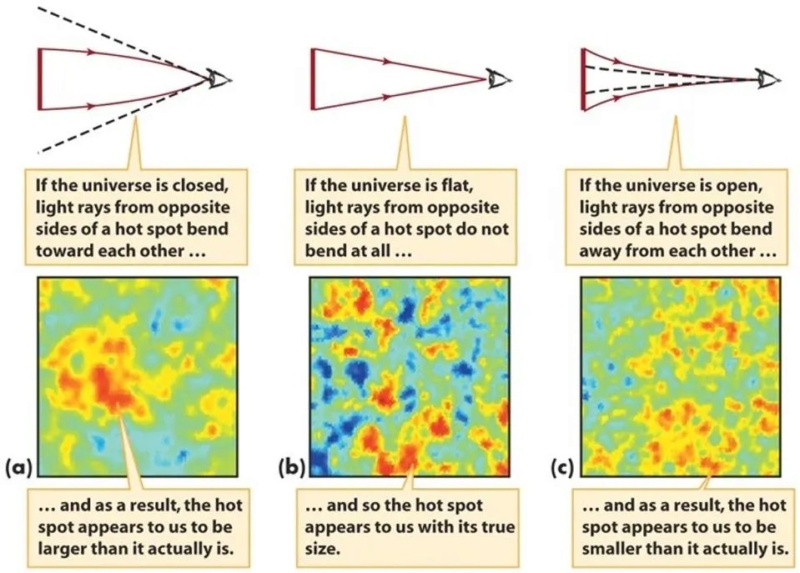

The spatial curvature parameter (Ωk) is related to the overall geometry of the universe, which can be flat, positively curved (closed), or negatively curved (open). The precise value of Ωk has been a subject of intense study, with observations suggesting that the universe is very close to being spatially flat. The deceleration parameter (q₀) is a derived quantity that depends on the values of the other parameters, such as Ωm, Ωr, and Λ. It provides insight into whether the expansion of the universe is accelerating or decelerating due to the combined effects of matter, radiation, and dark energy. Major problems and challenges in understanding these parameters have included reconciling theoretical predictions with observational data, accounting for the presence of dark matter and dark energy, and determining the precise values of these parameters with high accuracy. The study of the expansion rate dynamics and the associated parameters has been a collaborative effort involving theoretical physicists, observational astronomers, and cosmologists, including notable figures such as Albert Einstein, Edwin Hubble, Georges Lemaître, Alexander Friedmann, and many others who have contributed to our current understanding of the universe's evolution. These parameters govern the universe's expansion rate and how it has changed over time:

1. Deceleration Parameter (q₀): The deceleration parameter, denoted as q₀, measures the rate at which the expansion of the universe is slowing down due to gravitational attraction.2. Lambda (Λ) Dark Energy Density: Finely tuned to 1 part in 10^120 3. Matter Density Parameter (Ωm): The matter density parameter, denoted as Ωm, quantifies the fraction of the critical density of the universe that is composed of matter. It includes both ordinary matter (baryonic matter) and dark matter. 4. Radiation Density Parameter (Ωr): The radiation density parameter, represented as Ωr, signifies the fraction of the critical density of the universe contributed by radiation. 5. Spatial Curvature (Ωk): The spatial curvature parameter, denoted as Ωk, describes the curvature of the universe on large scales. It quantifies the deviation of the universe's geometry from being flat. 6. Energy Density Parameter (Ω): The energy density parameter, denoted by the Greek letter omega (Ω), is a dimensionless quantity that represents the total energy density of the universe, including matter (baryonic and dark matter), radiation, and dark energy, relative to the critical density.1. Deceleration Parameter (q₀)The deceleration parameter describes the acceleration or deceleration of the universe's expansion. Its history of discovery and development is intertwined with the efforts to understand the nature of the universe's expansion and the role of different energy components in driving that expansion. In the early 20th century, the idea of an expanding universe was first proposed by Georges Lemaître and later supported by Edwin Hubble's observations of the redshift of distant galaxies. However, the understanding of the dynamics of this expansion and the factors influencing it was still incomplete. In the 1920s and 1930s, theoretical work by physicists like Arthur Eddington, Willem de Sitter, and Alexander Friedmann laid the foundations for the mathematical description of the universe's expansion using Einstein's equations of general relativity. They introduced the concept of the scale factor, which describes the expansion or contraction of space over time.

It was in this context that the deceleration parameter, q₀, emerged as a crucial quantity to characterize the behavior of the scale factor and the accelerating or decelerating nature of the expansion. The deceleration parameter is defined in terms of the scale factor and its derivatives with respect to time. In the 1980s and 1990s, observations of distant supernovae by teams led by Saul Perlmutter, Brian Schmidt, and Adam Riess provided evidence that the universe's expansion is not only continuing but also accelerating. This discovery was a significant milestone in cosmology, as it implied the existence of a mysterious "dark energy" component that acts as a repulsive force, counteracting the attractive gravity of matter. The deceleration parameter played a crucial role in interpreting these observations and understanding the transition from a decelerating expansion in the past (q₀ > 0) to the currently observed accelerated expansion (q₀ < 0). The precise measurement of q₀ and its evolution over cosmic time became a key goal for cosmologists, as it provides insights into the nature and behavior of dark energy and the overall composition of the universe. Today, the deceleration parameter is an essential component of the standard cosmological model, and its value is determined by fitting observations to theoretical models. Ongoing efforts are focused on improving the precision of q₀ measurements and understanding its implications for the future evolution of the universe.

If the acceleration were significantly different, it would have remarkable consequences for the expansion and structure of our universe. The measured value of q₀ is around -0.55, which means that the expansion of the universe is accelerating at a specific rate. The degree of fine-tuning for the observed value of the deceleration parameter is quite remarkable. To illustrate this, let's consider the range of values that q₀ could theoretically take:

- q₀ < 0: Accelerated expansion

- q₀ = 0: Coasting expansion (neither accelerating nor decelerating)

- q₀ > 0: Decelerated expansion

The exact odds or probability of obtaining the observed value of the deceleration parameter (q₀ ≈ -0.55) by chance alone are extremely low, though difficult to quantify precisely. However, we can make some reasonable estimates to illustrate the level of fine-tuning involved. If we consider the range of q₀ values between -0.8 and -0.4 as the "life-permitting" window, which is a range of 0.4, and assume an equal probability distribution across the entire range of -1 to +1 (a total range of 2), then the probability of randomly obtaining a value within the life-permitting window would be approximately 0.4/2 = 0.2, or 20%. However, the observed value of q₀ ≈ -0.55 is even more finely tuned, as it lies within a smaller range of about 0.1 (from -0.5 to -0.6) within the life-permitting window. If we consider this narrower range, the probability of randomly obtaining a value within that range would be approximately 0.1/2 = 0.05, or 5%. These probabilities, while still quite small, may underestimate the level of fine-tuning, as they assume an equal probability distribution across the entire range of q₀ values. In reality, the probability distribution may be highly skewed or peaked, making the observed value even more improbable.

There is currently no known fundamental physical principle or theory that can fully explain or predict this value from first principles. The observed value of q₀ is derived from fitting cosmological models to observational data, such as the measurements of the cosmic microwave background and the large-scale structure of the universe. Some physicists have proposed that the value of q₀ may be related to the nature of dark energy or the underlying theory of quantum gravity, but these are speculative ideas that have yet to be confirmed or developed into a complete theoretical framework.

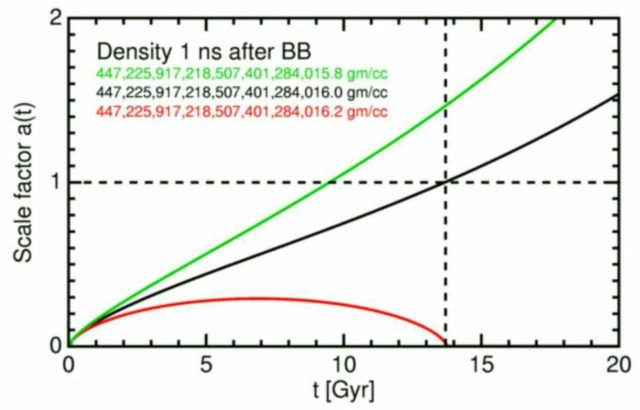

This acceleration is attributed to the presence of dark energy, which contributes around 68% of the total energy density of the universe. If the acceleration were to be much stronger (q₀ significantly more negative), the universe would have experienced a rapid exponential expansion, which could have prevented the formation of large-scale structures like galaxies and clusters of galaxies. Such a universe would likely be devoid of the complex structures necessary for the existence of stars, planets, and ultimately, life. On the other hand, if the acceleration were to be weaker or even negative (q₀ closer to zero or positive), the universe's expansion would have decelerated more rapidly due to the attractive force of gravity from matter. This could have led to a re-collapse of the universe in a "Big Crunch" scenario or, at the very least, a much slower expansion, which may have prevented the formation of the observed large-scale structures and the conditions necessary for the emergence of life. The observed value of the deceleration parameter, and the corresponding acceleration rate, appears to be finely tuned to allow for the formation of structures like galaxies, stars, and planets, while also preventing the universe from recollapsing or expanding too rapidly.

Naturalistic models and theories struggle to provide a compelling explanation for this apparent fine-tuning, as there is no known mechanism or principle within our current understanding of physics that would inherently lead to the observed levels of acceleration or deceleration.

Fine-tuning of the deceleration parameter (q₀) The observations of distant supernovae by the teams led by Saul Perlmutter, Brian Schmidt, and Adam Riess in the late 1990s provided the first evidence that the universe's expansion is accelerating, implying a negative value of q₀. Precise measurements of the CMB anisotropies by experiments like WMAP and Planck have enabled accurate determination of cosmological parameters, including the deceleration parameter. The Planck 2018 results constrained q₀ to be -0.538 ± 0.073 (68% confidence level). Observations of the large-scale distribution of galaxies and matter in the universe, combined with theoretical models, can also provide constraints on the deceleration parameter and its evolution over cosmic time. The consensus from these observations is that the deceleration parameter lies within a narrow range around q₀ ≈ -0.55, indicating an accelerated expansion driven by the presence of dark energy.