Fine-tuning of the initial conditions of the universe

https://reasonandscience.catsboard.com/t1964-fine-tuning-of-the-initial-conditions-of-the-universe

LUKE A. BARNES A Reasonable Little Question: A Formulation of the Fine-Tuning Argument 2019

Initial conditions or boundary conditions

• 2 constants for the Higgs field: the vacuum expectation value (vev) and the Higgs mass,

• 12 fundamental particle masses, relative to the Higgs vev (i.e., the Yukawa couplings): 6 quarks (u,d,s,c,t,b) and 6 leptons (e,µ,τ,νe ,νµ,ντ),

• 3 force coupling constants for the electromagnetic (α), weak (αw) and strong (αs) forces,

• 4 parameters that determine the Cabibbo-Kobayashi-Maskawa matrix, which describes the mixing of quark flavours by the weak force,

• 4 parameters of the Pontecorvo-Maki-Nakagawa-Sakata matrix, which describe neutrino mixing,

• 1 effective cosmological constant (Λ),

• 3 baryon (i.e., ordinary matter) / dark matter / neutrino mass per photon ratios,

• 1 scalar fluctuation amplitude (Q),

• 1 dimensionless spatial curvature (κ . 10−60).

This does not include 4 constants that are used to set a system of units of mass, time, distance and temperature:

Newton’s gravitational constant (G),

the speed of light c,

Planck’s constant ¯h, and

Boltzmann’s constant kB.

There are 25 constants from particle physics, and 6 from cosmology.3

https://philarchive.org/archive/BARARL-3

Ian Morison:A Journey through the Universepage 362

Had Ω not been in the range 0.999999999999999 to 1.000000000000001 one second after its origin the Universe could not be as it is now. This is incredibly fine tuning, and there is nothing in the standard Big Bang theory to explain why this should be so. This is called the ‘flatness’ problem.

The the galaxies formed as a result of fluctuations in the density of the primeval Universe – the so-called ‘ripples’ that are observed in the Cosmic Microwave Background. The parameter that defines the amplitude of the ripples has a value of ~10−5 . If this parameter were smaller the condensations of dark matter that took place soon after the Big Bang (and were crucial to the formation of the galaxies) would have been both smaller and more spread out, resulting in rather diffuse galaxy structures in which star formation would be very inefficient and planetary systems could not have formed. If the parameter had been less than 10−6 , galaxies would not have formed at all! But if this parameter were greater than 10−5 the scale of the ‘ripples’ would be greater and giant structures, far greater in scale than galaxies, would form and then collapse into super-massive black holes – a violent universe with no place for life!

https://3lib.net/book/2384366/fd7c64

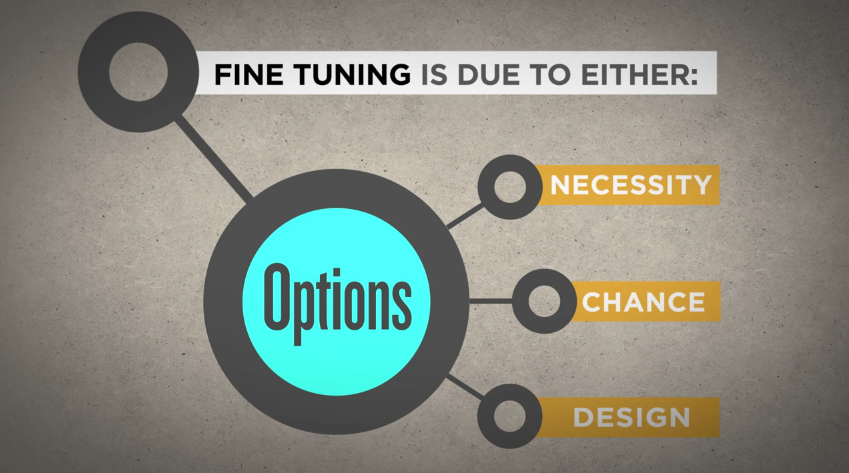

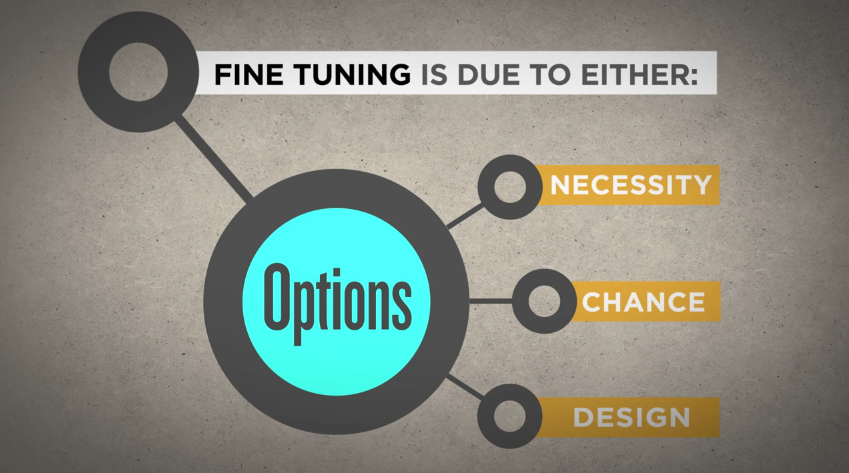

Initial Conditions and “Brute Facts”

Initial Conditions. Besides physical constants, there are initial or boundary conditions, which describe the conditions present at the beginning of the universe. 2

Initial conditions are independent of the physical constants. One way of summarizing the initial conditions is to speak of the extremely low entropy (that is, a highly ordered) initial state of the universe. This refers to the initial distribution of mass energy. In The Road to Reality, physicist Roger Penrose estimates that the odds of the initial low entropy state of our universe occurring by chance alone are on the order of 1 in 10 10(123). This ratio is vastly beyond our powers of comprehension. Since we know a life-bearing universe is intrinsically interesting, this ratio should be more than enough to raise the question: Why does such a universe exist? If someone is unmoved by this ratio, then they probably won’t be persuaded by additional examples of fine-tuning. In addition to initial conditions, there are a number of other, well-known features about the universe that too exhibit a high degree of fine-tuning. Among the fine-tuning is following:

Ratio of masses for protons and electrons

If it were slightly different, building blocks for life such as DNA could not be formed.

Velocity of light

If it were larger, stars would be too luminous. If it were smaller, stars would not be luminous enough.

Mass excess of neutron over proton

if it were greater, there would be too few heavy elements for life. If it were smaller, stars would quickly collapse as neutron stars or black holes.

Steve Meyer: The return of the God hypothesis, page 184

The density of the universe one nanosecond (a billionth of a second) after the beginning had to have the precise value of 1024 kilogram per cubic meter. If the density were larger or smaller by only 1 kilogram per cubic meter, galaxies would never have developed.18 This corresponds to a fine-tuning of 1 part in 10^24

Energy-Density is Finely-Tuned 1

The amount of matter (or more precisely energy density) in our universe at the Big Bang turns out to be finely tuned to about 1 part in 10^55. In other words, to get a life-permitting universe the amount of mass would have to be set to a precision of 55 decimal places. This fine-tuning arises because of the sensitivity to the initial conditions of the universe – the life-permitting density now is certainly much more flexible! If the initial energy density would have been slightly larger, gravity would have quickly slowed the expansion and then caused the universe to collapse too quickly for life to form. Conversely, if the density were a tad smaller, the universe would have expanded too quickly for galaxies, stars, or planets to form. Life could not originate without a long-lived, stable energy source such as a star. Thus, life would not be possible unless the density were just right – if we added or subtracted even just the mass of our body to that of the universe this would have been catastrophic!

There is, however, a potential dynamical solution to this problem based on a rapid early expansion of the universe known as cosmic inflation. There are 6 aspects of inflation that would have to be properly setup, some of which turn out to require fine-tuning. One significant aspect is that inflation must last for the proper amount of time – inflation is posited to have been an extremely brief but hyper-fast expansion of the early universe. If inflation had lasted a fraction of a nanosecond longer, the entire universe would have been merely a thin hydrogen soup, unsuitable for life. In a best-case scenario, about 1 in 1000 inflationary universes would avoid lasting too long. The biggest issue though is that for inflation to start, it needs a very special/rare state of an extremely smooth energy density.

Even if inflation solves this fine-tuning problem, one should not expect new physics discoveries to do away with other cases of fine-tuning: “Inflation represents a very special case… This is not true of the vast majority of fine-tuning cases. There is no known physical scale waiting in the life-permitting range of the quark masses, fundamental force strengths, or the dimensionality of spacetime. There can be no inflation-like dynamical solution to these fine-tuning problems because dynamical processes are blind to the requirements of intelligent life. What if, unbeknownst to us, there was such a fundamental parameter? It would need to fall into the life-permitting range. As such, we would be solving a fine-tuning problem by creating at least one more. And we would also need to posit a physical process able to dynamically drive the value of the quantity in our universe toward the new physical parameter.”

Where Is the Cosmic Density Fine-Tuning?

This word picture helps to demonstrate a number used to quantify that fine-tuning, namely 1 part in 10^60. Compared to the total mass of the observable universe, 1 part in 10^60 works out to about a tenth part of a dime.

Let's consider a universe that contains only matter. If the matter density is sufficiently large, gravity will overcome the expansion and cause the universe to collapse on itself. If the density is sufficiently small, the cosmos will continue to expand forever with negligible slowing. If the density is just right, the universe will expand forever, but continually slow down its expansion rate until it becomes static at an infinite time into the future. In a universe that contains only matter, this corresponds to a "flat" geometry for the universe. Life and flatness are related because only a flat universe meets two life-essential requirements. First, a flat universe survives long enough for an adequate number of generations of stars to form that will make the heavy elements and long-lived radiometric isotopes that advanced life requires. Second, a flat universe expands slowly enough for the matter to clump together to form galaxies, stars, and planets, but not so slowly as to form only black holes and neutron stars.

Until the mid-1990s, astrophysicists found it remarkable that the universe was so close to a flat geometry because such flatness is unstable with respect to time. Even though they could detect only about 4 percent of the mass required to make the universe flat, this required the early universe to be exquisitely close to "flat" to within one part in 1060. The previous statement holds true even given the uncertainties that existed twenty years ago (and to a lesser extent still do) in measurements of the cosmic mass density. Thus, in the absence of dark energy, the expansion rate would have changed so dramatically that the galaxies, stars, and planets necessary for physical life would never have formed.

Over the past fifteen years the picture has changed significantly. First, measurements of the radiation left over from the cosmic creation event, also known as the cosmic microwave background radiation, confirmed (with an error bar of about 3 percent1) that the universe is geometrically flat. Second, the concept of an extremely early epoch of cosmic inflation (a brief period of hyperexpansion of the universe when it was less than a quadrillionth of a quadrillionth of a second old) was developed into a scientifically testable hypothesis that later measurements partially confirmed.2 Third, astronomers discovered another density parameter for the universe, namely space energy density or what is now known as dark energy. For most astronomers and physicists an early epoch of cosmic inflation solves the one part in 1060 fine-tuning problem because such inflation in the early universe drives it exquisitely close to a flat geometry regardless of the universe's initial mass density.

A cosmic fine-tuning problem remains, however. The total cosmic mass density measured through several independent methods falls short by a little more than a factor of three from that required to make a flat-geometry universe,3 which measurements of the cosmic microwave background radiation have established. Dark energy comes to the rescue to make up the deficit, but not without a price. By any accounting, the source or sources of dark energy are at least 120 orders of magnitude larger than the amount detected. This implies that somehow the source(s) must cancel so as to leave just one part in 10^120 in order to match the small amount of dark energy detected by astronomers. Therefore, while inflation and dark energy can "eliminate" the one part in 10^60 fine-tuning in the mass density of the universe, they can only do so by introducing the far more exquisite one part in 10^120 fine-tuning in the dark energy density.

http://www.reasons.org/articles/where-is-the-cosmic-density-fine-tuning

Initial conditions of the universe

One other fundamental type of fine-tuning should be mentioned, that of the initial conditions of the universe. This refers to the fact that the initial distribution of mass energy – as measured by entropy – must fall within an exceedingly narrow range for life to occur. Some aspects of these initial conditions are expressed by various cosmic parameters, such as the mass density of the early universe, the strength of the explosion of the Big Bang, the strength of the density perturbations that led to star formation, the ratio of radiation density to the density of normal matter, and the like. Various arguments have been made that each of these must be fine-tuned for life to occur. Instead of focusing on these individual cases of fine-tuning, I shall focus on what is arguably the most outstanding special initial condition of our universe: its low entropy. According to Roger Penrose, one of Britain’s leading theoretical physicists, “In order to produce a universe resembling the one in which we live, the Creator would have to aim for an absurdly tiny volume of the phase space of possible universes”. How tiny is this volume? According to Penrose, if we let x = 10123, the volume of phase space would be about 1/10x of the entire volume. This is vastly smaller than the ratio of the volume of a proton – which is about 10−45 m3 – to the entire volume of the visible universe, which is approximately 1084 m3 . Thus, this precision is much, much greater than the precision that would be required to hit an individual proton if the entire visible universe were a dartboard! Others have calculated the volume to be zero.Now phase space is the space that physicists use to measure the various possible configurations of mass-energy of a system. For a system of particles in classical mechanics, this phase space consists of a space whose coordinates are the positions and momenta (i.e. mass × velocity) of the particles, or any other so-called “conjugate” pair of position and momenta variables within the Hamiltonian formulation of mechanics. Consistency requires that any probability measure over this phase space remain invariant regardless of which conjugate positions and momenta are chosen; further, consistency requires that the measure of a volume V(t0) of phase space at time t0 be the same as the measure that this volume evolves into at time t, V(t), given that the laws of physics are time-reversal invariant – that is, that they hold in the reverse time direction. One measure that meets this condition is the standard “equiprobability measure” in which the regions of phase space are assigned a probability corresponding to their volume given by their position and momenta (or conjugate position and momenta) coordinates. Moreover, if an additional assumption is made – that the system is ergodic – it is the only measure that meets this condition. This measure is called the standard measure of statistical mechanics, and forms the foundation for all the predictions of classical statistical mechanics. A related probability measure – an equiprobability distribution over the eigenstates of any quantum mechanical observable – forms the basis of quantum statistical mechanics. Statistical mechanics could be thought of as the third main branch of physics, besides the theory of relativity and quantum theory, and has been enormously successful. Under the orthodox view presented in physics texts and widely accepted among philosophers of physics, it is claimed to explain the laws of thermodynamics, such as the second law, which holds that the entropy of a system will increase towards its maximum with overwhelming probability.

1. https://crossexamined.org/fine-tuning-initial-conditions-support-life/

2. https://www.discovery.org/m/securepdfs/2018/12/List-of-Fine-Tuning-Parameters-Jay-Richards.pdf

https://reasonandscience.catsboard.com/t1964-fine-tuning-of-the-initial-conditions-of-the-universe

LUKE A. BARNES A Reasonable Little Question: A Formulation of the Fine-Tuning Argument 2019

Initial conditions or boundary conditions

• 2 constants for the Higgs field: the vacuum expectation value (vev) and the Higgs mass,

• 12 fundamental particle masses, relative to the Higgs vev (i.e., the Yukawa couplings): 6 quarks (u,d,s,c,t,b) and 6 leptons (e,µ,τ,νe ,νµ,ντ),

• 3 force coupling constants for the electromagnetic (α), weak (αw) and strong (αs) forces,

• 4 parameters that determine the Cabibbo-Kobayashi-Maskawa matrix, which describes the mixing of quark flavours by the weak force,

• 4 parameters of the Pontecorvo-Maki-Nakagawa-Sakata matrix, which describe neutrino mixing,

• 1 effective cosmological constant (Λ),

• 3 baryon (i.e., ordinary matter) / dark matter / neutrino mass per photon ratios,

• 1 scalar fluctuation amplitude (Q),

• 1 dimensionless spatial curvature (κ . 10−60).

This does not include 4 constants that are used to set a system of units of mass, time, distance and temperature:

Newton’s gravitational constant (G),

the speed of light c,

Planck’s constant ¯h, and

Boltzmann’s constant kB.

There are 25 constants from particle physics, and 6 from cosmology.3

https://philarchive.org/archive/BARARL-3

Ian Morison:A Journey through the Universepage 362

Had Ω not been in the range 0.999999999999999 to 1.000000000000001 one second after its origin the Universe could not be as it is now. This is incredibly fine tuning, and there is nothing in the standard Big Bang theory to explain why this should be so. This is called the ‘flatness’ problem.

The the galaxies formed as a result of fluctuations in the density of the primeval Universe – the so-called ‘ripples’ that are observed in the Cosmic Microwave Background. The parameter that defines the amplitude of the ripples has a value of ~10−5 . If this parameter were smaller the condensations of dark matter that took place soon after the Big Bang (and were crucial to the formation of the galaxies) would have been both smaller and more spread out, resulting in rather diffuse galaxy structures in which star formation would be very inefficient and planetary systems could not have formed. If the parameter had been less than 10−6 , galaxies would not have formed at all! But if this parameter were greater than 10−5 the scale of the ‘ripples’ would be greater and giant structures, far greater in scale than galaxies, would form and then collapse into super-massive black holes – a violent universe with no place for life!

https://3lib.net/book/2384366/fd7c64

Initial Conditions and “Brute Facts”

Initial Conditions. Besides physical constants, there are initial or boundary conditions, which describe the conditions present at the beginning of the universe. 2

Initial conditions are independent of the physical constants. One way of summarizing the initial conditions is to speak of the extremely low entropy (that is, a highly ordered) initial state of the universe. This refers to the initial distribution of mass energy. In The Road to Reality, physicist Roger Penrose estimates that the odds of the initial low entropy state of our universe occurring by chance alone are on the order of 1 in 10 10(123). This ratio is vastly beyond our powers of comprehension. Since we know a life-bearing universe is intrinsically interesting, this ratio should be more than enough to raise the question: Why does such a universe exist? If someone is unmoved by this ratio, then they probably won’t be persuaded by additional examples of fine-tuning. In addition to initial conditions, there are a number of other, well-known features about the universe that too exhibit a high degree of fine-tuning. Among the fine-tuning is following:

Ratio of masses for protons and electrons

If it were slightly different, building blocks for life such as DNA could not be formed.

Velocity of light

If it were larger, stars would be too luminous. If it were smaller, stars would not be luminous enough.

Mass excess of neutron over proton

if it were greater, there would be too few heavy elements for life. If it were smaller, stars would quickly collapse as neutron stars or black holes.

Steve Meyer: The return of the God hypothesis, page 184

The density of the universe one nanosecond (a billionth of a second) after the beginning had to have the precise value of 1024 kilogram per cubic meter. If the density were larger or smaller by only 1 kilogram per cubic meter, galaxies would never have developed.18 This corresponds to a fine-tuning of 1 part in 10^24

Energy-Density is Finely-Tuned 1

The amount of matter (or more precisely energy density) in our universe at the Big Bang turns out to be finely tuned to about 1 part in 10^55. In other words, to get a life-permitting universe the amount of mass would have to be set to a precision of 55 decimal places. This fine-tuning arises because of the sensitivity to the initial conditions of the universe – the life-permitting density now is certainly much more flexible! If the initial energy density would have been slightly larger, gravity would have quickly slowed the expansion and then caused the universe to collapse too quickly for life to form. Conversely, if the density were a tad smaller, the universe would have expanded too quickly for galaxies, stars, or planets to form. Life could not originate without a long-lived, stable energy source such as a star. Thus, life would not be possible unless the density were just right – if we added or subtracted even just the mass of our body to that of the universe this would have been catastrophic!

There is, however, a potential dynamical solution to this problem based on a rapid early expansion of the universe known as cosmic inflation. There are 6 aspects of inflation that would have to be properly setup, some of which turn out to require fine-tuning. One significant aspect is that inflation must last for the proper amount of time – inflation is posited to have been an extremely brief but hyper-fast expansion of the early universe. If inflation had lasted a fraction of a nanosecond longer, the entire universe would have been merely a thin hydrogen soup, unsuitable for life. In a best-case scenario, about 1 in 1000 inflationary universes would avoid lasting too long. The biggest issue though is that for inflation to start, it needs a very special/rare state of an extremely smooth energy density.

Even if inflation solves this fine-tuning problem, one should not expect new physics discoveries to do away with other cases of fine-tuning: “Inflation represents a very special case… This is not true of the vast majority of fine-tuning cases. There is no known physical scale waiting in the life-permitting range of the quark masses, fundamental force strengths, or the dimensionality of spacetime. There can be no inflation-like dynamical solution to these fine-tuning problems because dynamical processes are blind to the requirements of intelligent life. What if, unbeknownst to us, there was such a fundamental parameter? It would need to fall into the life-permitting range. As such, we would be solving a fine-tuning problem by creating at least one more. And we would also need to posit a physical process able to dynamically drive the value of the quantity in our universe toward the new physical parameter.”

Where Is the Cosmic Density Fine-Tuning?

This word picture helps to demonstrate a number used to quantify that fine-tuning, namely 1 part in 10^60. Compared to the total mass of the observable universe, 1 part in 10^60 works out to about a tenth part of a dime.

Let's consider a universe that contains only matter. If the matter density is sufficiently large, gravity will overcome the expansion and cause the universe to collapse on itself. If the density is sufficiently small, the cosmos will continue to expand forever with negligible slowing. If the density is just right, the universe will expand forever, but continually slow down its expansion rate until it becomes static at an infinite time into the future. In a universe that contains only matter, this corresponds to a "flat" geometry for the universe. Life and flatness are related because only a flat universe meets two life-essential requirements. First, a flat universe survives long enough for an adequate number of generations of stars to form that will make the heavy elements and long-lived radiometric isotopes that advanced life requires. Second, a flat universe expands slowly enough for the matter to clump together to form galaxies, stars, and planets, but not so slowly as to form only black holes and neutron stars.

Until the mid-1990s, astrophysicists found it remarkable that the universe was so close to a flat geometry because such flatness is unstable with respect to time. Even though they could detect only about 4 percent of the mass required to make the universe flat, this required the early universe to be exquisitely close to "flat" to within one part in 1060. The previous statement holds true even given the uncertainties that existed twenty years ago (and to a lesser extent still do) in measurements of the cosmic mass density. Thus, in the absence of dark energy, the expansion rate would have changed so dramatically that the galaxies, stars, and planets necessary for physical life would never have formed.

Over the past fifteen years the picture has changed significantly. First, measurements of the radiation left over from the cosmic creation event, also known as the cosmic microwave background radiation, confirmed (with an error bar of about 3 percent1) that the universe is geometrically flat. Second, the concept of an extremely early epoch of cosmic inflation (a brief period of hyperexpansion of the universe when it was less than a quadrillionth of a quadrillionth of a second old) was developed into a scientifically testable hypothesis that later measurements partially confirmed.2 Third, astronomers discovered another density parameter for the universe, namely space energy density or what is now known as dark energy. For most astronomers and physicists an early epoch of cosmic inflation solves the one part in 1060 fine-tuning problem because such inflation in the early universe drives it exquisitely close to a flat geometry regardless of the universe's initial mass density.

A cosmic fine-tuning problem remains, however. The total cosmic mass density measured through several independent methods falls short by a little more than a factor of three from that required to make a flat-geometry universe,3 which measurements of the cosmic microwave background radiation have established. Dark energy comes to the rescue to make up the deficit, but not without a price. By any accounting, the source or sources of dark energy are at least 120 orders of magnitude larger than the amount detected. This implies that somehow the source(s) must cancel so as to leave just one part in 10^120 in order to match the small amount of dark energy detected by astronomers. Therefore, while inflation and dark energy can "eliminate" the one part in 10^60 fine-tuning in the mass density of the universe, they can only do so by introducing the far more exquisite one part in 10^120 fine-tuning in the dark energy density.

http://www.reasons.org/articles/where-is-the-cosmic-density-fine-tuning

Initial conditions of the universe

One other fundamental type of fine-tuning should be mentioned, that of the initial conditions of the universe. This refers to the fact that the initial distribution of mass energy – as measured by entropy – must fall within an exceedingly narrow range for life to occur. Some aspects of these initial conditions are expressed by various cosmic parameters, such as the mass density of the early universe, the strength of the explosion of the Big Bang, the strength of the density perturbations that led to star formation, the ratio of radiation density to the density of normal matter, and the like. Various arguments have been made that each of these must be fine-tuned for life to occur. Instead of focusing on these individual cases of fine-tuning, I shall focus on what is arguably the most outstanding special initial condition of our universe: its low entropy. According to Roger Penrose, one of Britain’s leading theoretical physicists, “In order to produce a universe resembling the one in which we live, the Creator would have to aim for an absurdly tiny volume of the phase space of possible universes”. How tiny is this volume? According to Penrose, if we let x = 10123, the volume of phase space would be about 1/10x of the entire volume. This is vastly smaller than the ratio of the volume of a proton – which is about 10−45 m3 – to the entire volume of the visible universe, which is approximately 1084 m3 . Thus, this precision is much, much greater than the precision that would be required to hit an individual proton if the entire visible universe were a dartboard! Others have calculated the volume to be zero.Now phase space is the space that physicists use to measure the various possible configurations of mass-energy of a system. For a system of particles in classical mechanics, this phase space consists of a space whose coordinates are the positions and momenta (i.e. mass × velocity) of the particles, or any other so-called “conjugate” pair of position and momenta variables within the Hamiltonian formulation of mechanics. Consistency requires that any probability measure over this phase space remain invariant regardless of which conjugate positions and momenta are chosen; further, consistency requires that the measure of a volume V(t0) of phase space at time t0 be the same as the measure that this volume evolves into at time t, V(t), given that the laws of physics are time-reversal invariant – that is, that they hold in the reverse time direction. One measure that meets this condition is the standard “equiprobability measure” in which the regions of phase space are assigned a probability corresponding to their volume given by their position and momenta (or conjugate position and momenta) coordinates. Moreover, if an additional assumption is made – that the system is ergodic – it is the only measure that meets this condition. This measure is called the standard measure of statistical mechanics, and forms the foundation for all the predictions of classical statistical mechanics. A related probability measure – an equiprobability distribution over the eigenstates of any quantum mechanical observable – forms the basis of quantum statistical mechanics. Statistical mechanics could be thought of as the third main branch of physics, besides the theory of relativity and quantum theory, and has been enormously successful. Under the orthodox view presented in physics texts and widely accepted among philosophers of physics, it is claimed to explain the laws of thermodynamics, such as the second law, which holds that the entropy of a system will increase towards its maximum with overwhelming probability.

1. https://crossexamined.org/fine-tuning-initial-conditions-support-life/

2. https://www.discovery.org/m/securepdfs/2018/12/List-of-Fine-Tuning-Parameters-Jay-Richards.pdf