Chapter 1

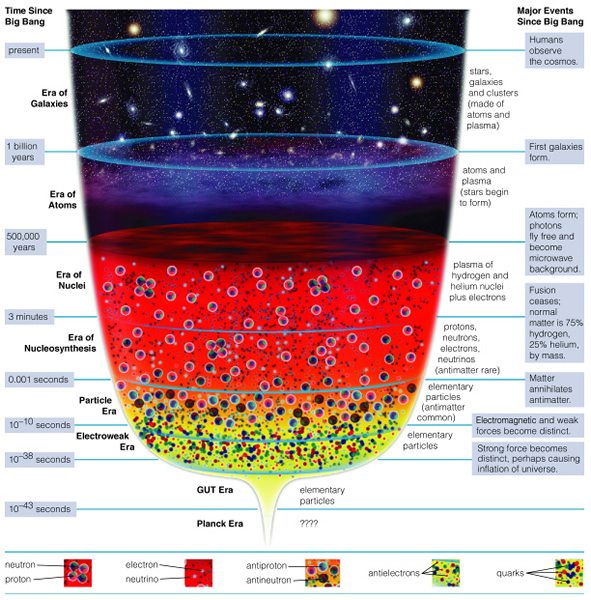

Reasons to believe in God related to cosmology and physics

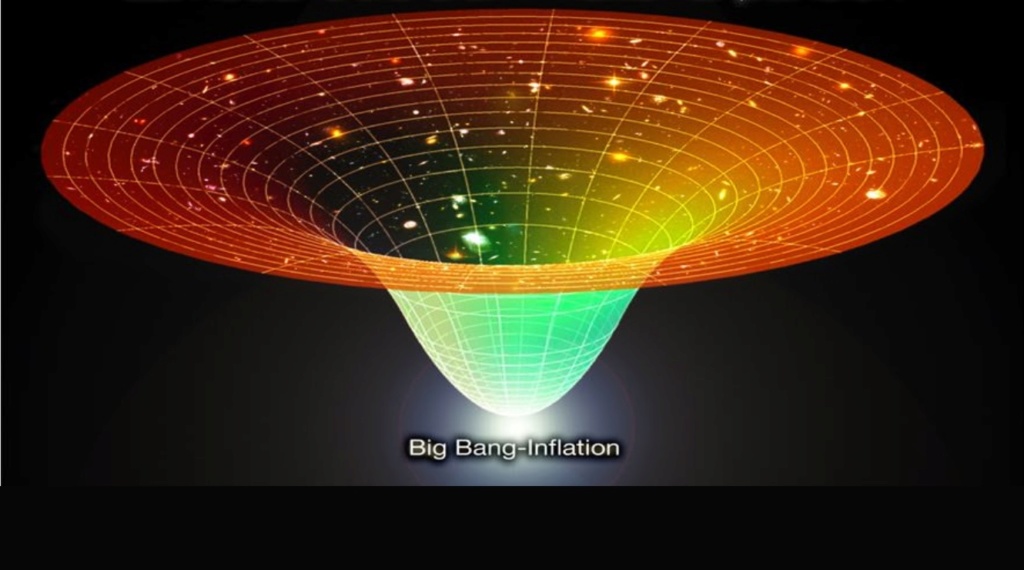

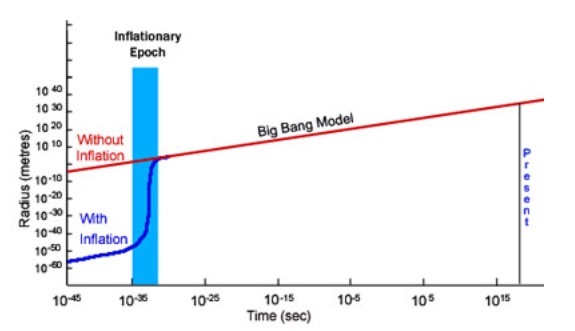

The Inflation and Big Bang Model for the Beginning of the Universe

Chapter 2

The Laws of Physics

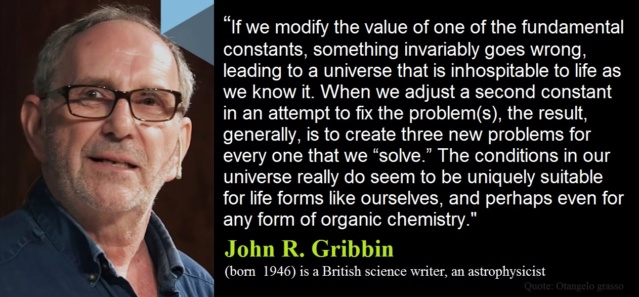

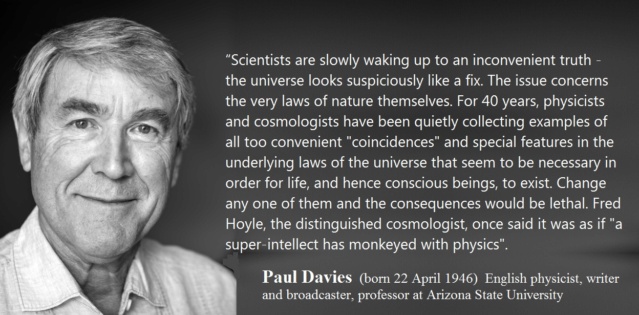

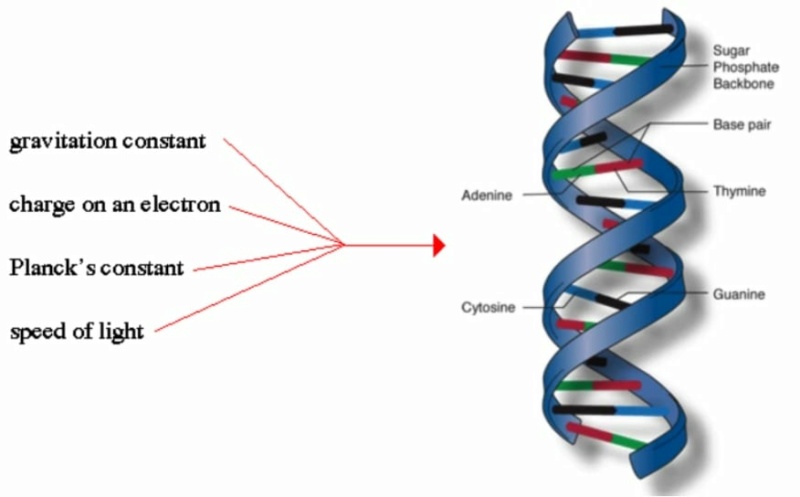

The Fine-Tuning of Universal Constants: An Argument for Intelligent Design

Chapter 3

Fine-tuning of the universe

Answering objections to the fine-tuning argument

Chapter 4

Overview of the Fine-tune Parameters

Conditions for Life on Earth

Chapter 5

Fine-tuning of the Fundamental Forces

Chapter 6

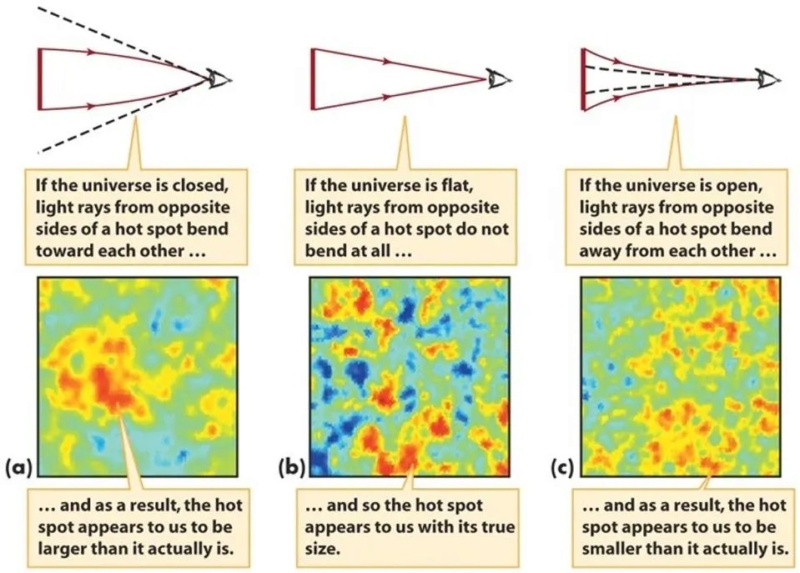

Cosmic Inflation at the beginning of the Universe

What is matter made of?

Chapter 7

Atoms

Nucleosynthesis - evidence of design

What defines the stability of Atoms?

The heavier Elements, Essential for Life on Earth

Stellar Compositions & Spectroscopy

Chapter 8

Star Formation

The Solar System: A Cosmic Symphony of Finely Tuned Conditions

The sun - just right for life

Chapter 9

The origin and formation of the Earth

The electromagnetic spectrum, fine-tuned for life

The Multiverse hypotheses

Introduction

The Heavens Declare the Glory of God

Psalms 19:1-2: The heavens are telling of the glory of God, And their expanse is declaring the work of His hands. Day to day pours forth speech, And night to night reveals knowledge.

Jeremiah 33:25-26: Thus says the Lord, 'If My covenant for day and night stand not, and the fixed patterns of heaven and earth I have not established, then I would reject the of Jacob and David My servant,

not taking from his descendant's rulers (future messiah) over the descendants of Abraham, Isaac and Jacob. But I will restore their fortunes and will have mercy on them.'"

These powerful scriptural passages underscore the connection between the physical universe and the glory of its divine Creator. The psalmist declares that the very heavens themselves testify to God's majesty and creative power. The "expanse" of the cosmos, with its designed patterns and fixed laws, reveals the handiwork of the Almighty. The prophet Jeremiah emphasizes that the constancy and reliability of the physical world reflect the immutability of God's eternal covenant. The unwavering "fixed patterns of heaven and earth" are a testament to the faithfulness of the Lord, who has promised to preserve His chosen people and the coming Messiah from the line of David. These biblical passages provide a powerful theological framework for understanding the fine-tuned universe and the implications it holds for the existence of an intelligent, rational, and sovereign Creator. The precision and order observed in cosmology and physics echo the declarations of Scripture, inviting readers to consider the profound spiritual truths that the physical world proclaims.

Epistemology in a Multidisciplinary World

Comparing worldviews - there are basically just two

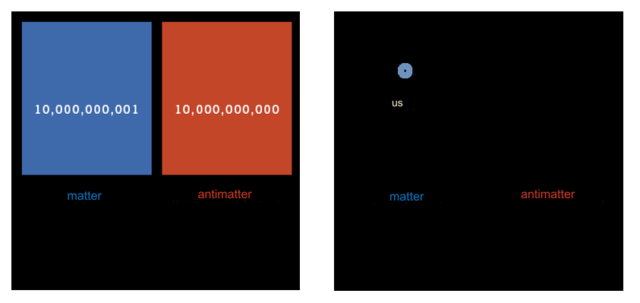

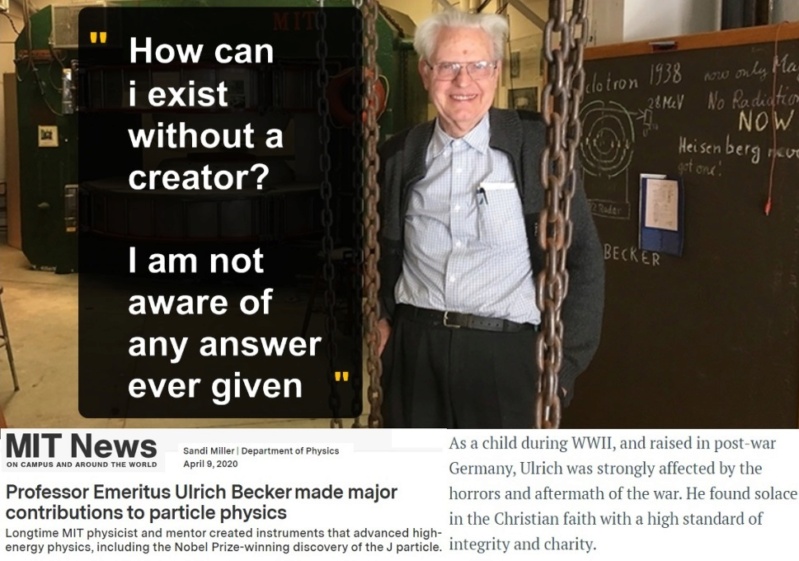

In exploring the vast variety of human belief systems, one finds a myriad of perspectives on the nature of existence, the origins of the physical world, the universe, and the role of the divine within it. At the heart of these worldviews lies a fundamental dichotomy: the belief in a higher power or the conviction that the material universe, or a multiverse is all there is. For proponents of theism, the universe is not a random assembly of matter but a creation with purpose and intent. This perspective sees a divine hand in the nature and the complexities of life, pointing to the fact, and considering that the universe is permeated by order, beauty, complexity, and in special, information that dictates its order, cannot be mere products of chance. Theism, in its various forms, suggests that a higher intelligence, a God, or Gods, is responsible for the creation and sustenance of the universe. This belief is not just a relic of ancient thought but is supported by contemporary arguments from philosophy, theology, and several scientific fields extending from cosmology to chemistry, biochemistry, and biology, pointing to instantiation by purposeful creation.

On the other side of the spectrum, atheism, and materialism present a worldview grounded in the physical realm, denying the existence of a divine creator. From this viewpoint, the universe and all its phenomena can be explained through natural unguided processes. Evolution, as a cornerstone of this perspective, posits that life emerged and diversified through natural selection, without the need for a divine creator.

Pantheism offers a different perspective, blurring the lines between the creator and the creation by positing that the divine permeates every part of the universe. This view sees the sacred in the natural world, positing that everything is a manifestation of the divine. Uniformitarianism and polytheism, while seemingly diverse, share the common thread of recognizing a divine influence in the world, albeit in different capacities. Uniformitarianism, often linked with theistic evolution, acknowledges divine intervention in the natural processes, while polytheism venerates multiple deities, each with specific roles and powers. While pantheism blurs the distinction between the creator and the creation by asserting that the divine is inherent in all aspects of the universe, it still falls within the category of worldviews that acknowledge the existence of a deity or divine force. Pantheism offers a unique perspective by viewing the entire cosmos as sacred and imbued with divine presence, transcending traditional concepts of a separate, transcendent creator.

Our worldview might align with naturalism and materialism, where the universe and everything within it, including the concept of multiverses, the steady-state model, oscillating universes, and the phenomena of virtual particles, can be explained by natural processes without invoking a supernatural cause. This perspective holds that the Big Bang, the formation of celestial bodies, the origin of life, the evolution of species, and even morality can be understood through the lens of random, unguided events. Alternatively, our worldview can be rooted in theism and creationism, where we believe in a timeless, all-present, and all-knowing Creator who purposefully designed the universe and all its complexities. This view encompasses the belief that the universe, galaxies, stars, planets, and all forms of life were intentionally brought into existence by divine intelligence, with humans being a unique creation made in the image of this Creator, endowed with consciousness, free will, moral understanding, and cognitive abilities. Life's origins are debated as either stemming from the spontaneous assembly of atoms, driven by random events and natural processes without any guiding intelligence or as the result of deliberate creation by an intelligent entity. The first view posits that life emerged from simple chemical reactions and physical forces, evolving through chance and environmental influences into complex, organized systems without any purposeful direction. The alternative perspective suggests that life was intentionally designed by a conscious being endowed with creativity, intent, and foresight, orchestrating the universe's complexity and life within it according to a specific plan. There are only 2 options: 1) God did it or 2) there was no cause. Either nature is the product of pointless happenstance of no existential value or the display of God's sublime grandeur and intellect. Either all is natural and has always been, or there was a supernatural entity that created the natural world. How we answer these fundamental Questions has enormous implications for how we understand ourselves, our relation to others, and our place in the universe. Remarkably, however, many people today don’t give this question nearly the attention it deserves; they live as though it doesn’t matter to everyday life.

Claim: You are presenting a false dichotomy. There are more possibilities beyond the God and the Not-God world.

Reply: At the most fundamental level, every worldview must address the question of whether there exists an eternal, powerful, conscious, and intelligent being (or beings) that can be described as "God" or not. This is not a false dichotomy, but rather a true dichotomy that arises from the nature of the question itself. All propositions, belief systems, and worldviews can be categorized into one of these two basic categories or "buckets":

1. The "God world": This category encompasses worldviews and propositions that affirm the existence of an eternal, powerful, conscious, and intelligent being (or beings) that can be described as "God." This can take various forms, such as a singular deity, a plurality of gods, or even a more abstract concept of a divine or transcendent force or principle. The common thread is the affirmation of a supreme, intelligent, and purposeful entity or entities that transcend the natural world.

2. The "Not-God world": This category includes all worldviews and propositions that deny or reject the existence of any eternal, powerful, conscious, and intelligent being that can be described as "God." This can include naturalistic, materialistic, or atheistic worldviews that attribute the origin and functioning of the universe to purely natural, impersonal, and non-intelligent processes or principles. While there may be variations and nuances within each of these categories, such as different conceptions of God or different naturalistic explanations, they ultimately fall into one of these two fundamental categories: either affirming or denying the existence of a supreme, intelligent, and purposeful being or force behind the universe. The beauty of this dichotomy lies in its simplicity and comprehensiveness. It cuts through the complexities and nuances of various belief systems and gets to the heart of the matter: Is there an eternal, powerful, conscious, and intelligent being (or beings) that can be described as "God," or not? By framing the question in this way, we acknowledge that all worldviews and propositions must ultimately grapple with this fundamental question, either explicitly or implicitly. Even those who claim agnosticism or uncertainty about the existence of God are effectively placing themselves in the "Not-God world" category, at least temporarily, until they arrive at a definitive affirmation or rejection of such a being. This dichotomy is not a false one, but rather a true and inescapable one that arises from the nature of the question itself. It provides a clear and concise framework for categorizing and evaluating all worldviews and propositions based on their stance on this fundamental issue. While there may be variations and nuances within each category, the dichotomy between the "God world" and the "Not-God world" remains a valid and useful way of understanding and organizing the vast landscape of human thought and belief regarding the ultimate nature of reality and existence.

Atheist: Right now the only evidence we have of intelligent design is by humans. Why would anyone assume to know an unknowable answer regarding origins?

Reply: Some atheists often prioritize making demands rooted in ignorance rather than establishing a robust epistemological framework for inquiry. Abiogenesis, for instance, serves as a test for materialism, yet after nearly seventy years of experimental attempts, scientists have failed to recreate even the basic building blocks of life in the lab. Similarly, evolution has been rigorously tested through studies such as 70,000 generations of bacteria, yet no transition to a new organismal form or increase in complexity has been observed. The existence of God, like many concepts in historical science, is inferred through various criteria such as abductive reasoning and eliminative inductions. However, instead of engaging in meaningful dialogue, some atheists persist in making nonsensical demands for demonstrations of God's existence. Comparatively, the widely credited multiverse theory faces similar challenges. How does one "test" for the multiverse? It's an endeavor that remains elusive, even for honest physicists who acknowledge this limitation. In essence, the existence of God stands on par with theories like the multiverse, string theory, abiogenesis, and macroevolution—each subject to scrutiny and inference rather than direct empirical demonstration. It's important to move beyond the stagnant echo chamber of demands and engage in a constructive dialogue rooted in critical thinking and open-minded inquiry.

Claim: You are presenting a false dichotomy. There are more possibilities beyond the God and the Not-God world.

Reply: At the most fundamental level, every worldview must address the question of whether there exists an eternal, powerful, conscious, and intelligent being (or beings) that can be described as "God" or not. This is not a false dichotomy, but rather a true dichotomy that arises from the nature of the question itself. All propositions, belief systems, and worldviews can be categorized into one of these two basic categories or "buckets":

1. The "God world": This category encompasses worldviews and propositions that affirm the existence of an eternal, powerful, conscious, and intelligent being (or beings) that can be described as "God." This can take various forms, such as a singular deity, a plurality of gods, or even a more abstract concept of a divine or transcendent force or principle. The common thread is the affirmation of a supreme, intelligent, and purposeful entity or entities that transcend the natural world.

2. The "Not-God world": This category includes all worldviews and propositions that deny or reject the existence of any eternal, powerful, conscious, and intelligent being that can be described as "God." This can include naturalistic, materialistic, or atheistic worldviews that attribute the origin and functioning of the universe to purely natural, impersonal, and non-intelligent processes or principles. While there may be variations and nuances within each of these categories, such as different conceptions of God or different naturalistic explanations, they ultimately fall into one of these two fundamental categories: either affirming or denying the existence of a supreme, intelligent, and purposeful being or force behind the universe. The beauty of this dichotomy lies in its simplicity and comprehensiveness. It cuts through the complexities and nuances of various belief systems and gets to the heart of the matter: Is there an eternal, powerful, conscious, and intelligent being (or beings) that can be described as "God," or not? By framing the question in this way, we acknowledge that all worldviews and propositions must ultimately grapple with this fundamental question, either explicitly or implicitly. Even those who claim agnosticism or uncertainty about the existence of God are effectively placing themselves in the "Not-God world" category, at least temporarily, until they arrive at a definitive affirmation or rejection of such a being. This dichotomy is not a false one, but rather a true and inescapable one that arises from the nature of the question itself. It provides a clear and concise framework for categorizing and evaluating all worldviews and propositions based on their stance on this fundamental issue. While there may be variations and nuances within each category, the dichotomy between the "God world" and the "Not-God world" remains a valid and useful way of understanding and organizing the vast landscape of human thought and belief regarding the ultimate nature of reality and existence.

Eliminative Inductions

Eliminative induction is a method of reasoning which supports the validity of a proposition by demonstrating the falsity of all alternative propositions. This method rests on the principle that the original proposition and its alternatives form a comprehensive and mutually exclusive set; thus, disproving all other alternatives necessarily confirms the original proposition as true. This approach aligns with the principle encapsulated in Sherlock Holmes's famous saying: by ruling out all that is impossible, whatever remains, even if it is not entirely understood but is within the realm of logical possibility, must be accepted as the truth. In essence, what begins as a process of elimination through induction transforms into a form of deduction, where the conclusion is seen as a logical consequence of the elimination of all other possibilities. This method hinges on the exhaustive exploration of all conceivable alternatives and the systematic dismissal of each, leaving only the viable proposition standing as the deduced truth.

Agnosticism

Some may shy away from the concept of a divine entity because it implies a moral framework that limits certain behaviors, which they may perceive as an infringement on their personal freedom. Similarly, the idea of strict naturalism, which posits that everything can be explained through natural processes without any supernatural intervention, might seem unsatisfying or incomplete to those who ponder deeper existential questions. As a result, agnosticism becomes an appealing stance for those who find themselves in the middle, reluctant to fully embrace either theism or atheism. Agnosticism allows individuals to navigate a middle path, not fully committing to the existence or non-existence of a higher power, while also entertaining the possibility of naturalistic explanations for the universe. This position can provide a sense of intellectual flexibility, enabling one to explore various philosophical and theological ideas without the pressure of adhering to a definitive standpoint. However, this approach is sometimes criticized as being a convenient way to avoid taking a clear position on significant existential questions. Critics might argue that some agnostics, under the guise of promoting skepticism and rationalism, avoid deeper commitments to any particular worldview. They might be seen as using their stance as a way to appear intellectually superior, rather than engaging earnestly with the complex questions at hand. The criticism extends to accusing such individuals of ultracrepidarianism, a term for those who give opinions beyond their knowledge, and falling prey to the Dunning-Kruger effect, where one's lack of knowledge leads to overestimation of one's own understanding. The proverbial wisdom that "the one who is wise in his own eyes is a fool to others" suggests that true wisdom involves recognizing the limits of one's knowledge and being open to learning and growth. The path to wisdom, according to this viewpoint, involves moving beyond a superficial engagement with these profound questions and adopting a more humble and inquisitive attitude. Whether through a deepening of spiritual faith, a more rigorous exploration of naturalism, or a thoughtful examination of agnosticism, the journey involves a sincere search for understanding and meaning beyond mere appearances or social posturing.

Limited causal alternatives do not justify claiming of " not knowing "

Hosea 4:6: People are destroyed for lack of knowledge.

Dismissing known facts and logical reasoning, especially when the information is readily available, can be seen as more than just willful ignorance; it borders on folly. This is particularly true in discussions about origins and worldviews, where the implications might extend to one's eternal destiny. While uncertainty may be understandable in situations with numerous potential explanations, the question of God's existence essentially boils down to two possibilities: either God exists, or God does not. Given the abundance of evidence available, it is possible to reach reasoned and well-supported conclusions on this matter.

If the concept of God is not seen as the ultimate, eternal, and necessary foundation for all existence, including the natural world, human personality, consciousness, and rational thought, then what could possibly serve as this foundational entity, and why would it be a more convincing explanation? Without an eternal, purposeful force to bring about the existence of the physical universe and conscious beings within it, how could a non-conscious alternative serve as a plausible explanation? This question becomes particularly pressing when considering the nature of consciousness itself, which appears to be a fundamental, irreducible aspect of the mind that cannot be fully explained by physical laws alone. The idea that the electrons in our brains can produce consciousness, while those in an inanimate object like a light bulb cannot, seems to contradict the principles of quantum physics, which suggest that all electrons are identical and indistinguishable, possessing the same properties.

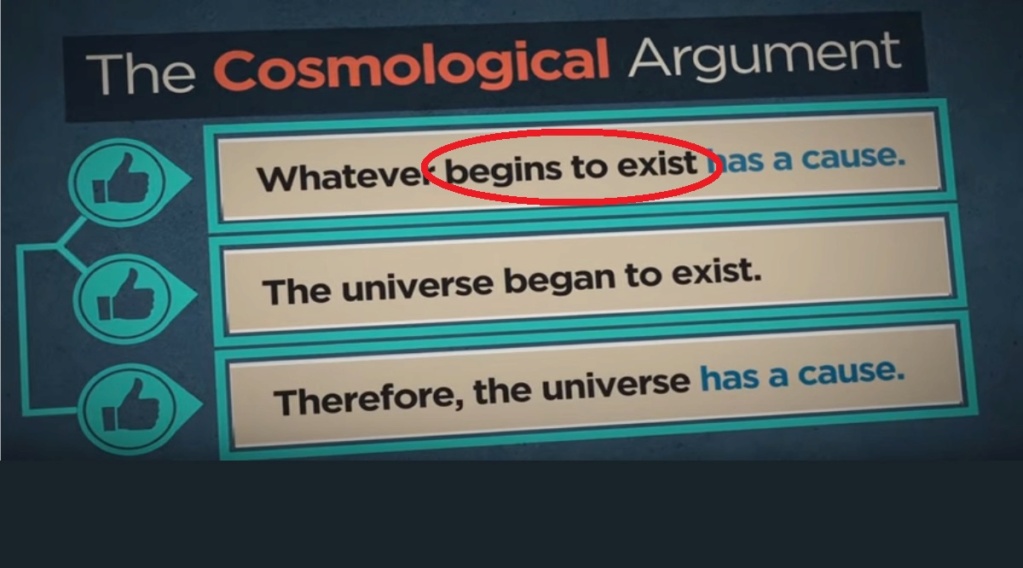

Either there is a God - creator and causal agency of the universe, or not. God either exists or he doesn’t, and there is no halfway house. These are the only two possible explanations. Upon the logic of mutual exclusion, they are mutually exclusive (it was one or the other) so we can use eliminative logic: if no God is highly improbable, then the existence of God is highly probable.

Naturalism:

- Multiverse

- Virtual particles

- Big Bang

- Accretion theory

- Abiogenesis

- Common ancestry

- Evolution

Theism:

- Transcendent eternal God/Creator

- created the universe and stretched it out

- Created the Galaxies, Stars, Planets, the earth, and the moon

- Created life in all its variants and forms

- Created man and woman as a special creation, upon his image

- Theology and philosophy: Both lead to an eternal, self-existent, omnipresent transcendent, conscious, intelligent, personal, and moral Creator.

- The Bible: The Old Testament is a catalog of fulfilled prophecies of Jesus Christ, and his mission, death, and resurrection foretold with specificity.

- Archaeology: Demonstrates that all events described in the Bible are historical facts.

- History: Historical evidence reveals that Jesus Christ really did come to this earth, and did physically rise from the dead

- The Bible's witnesses: There are many testimonies of Jesus doing miracles still today, and Jesus appearing to people all over the globe, still today.

- End times: The signs of the end times that were foretold in the Bible are occurring in front of our eyes. New world order, microchip implant, etc.

- After-life experiences: Credible witnesses have seen the afterlife and have come back and reported to us that the afterlife is real.

1. If the Christian perspective appears to be more plausible or coherent than atheism or any other religion, exceeding a 50% threshold of credibility,

then choosing to embrace Christianity and adopting its principles for living becomes a logical decision.

2. It can be argued that Christianity holds a probability of being correct that is at least equal to or greater than 50%.

3. Consequently, it follows logically to adopt a Christian way of life based on this assessment of its plausibility.

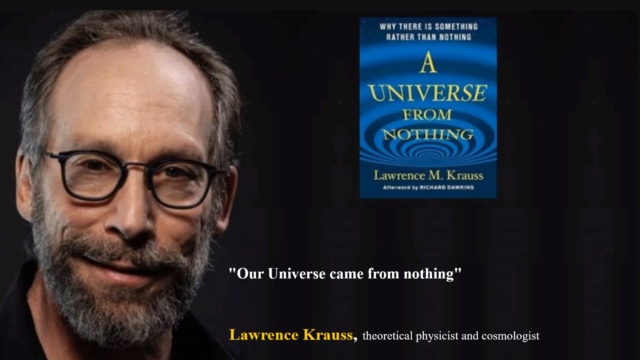

Claim: We replace God with honesty by saying "we don't know" and there is absolutely nothing wrong with that... The fact that we don't currently know does not mean we will never know because we have science, the best method we have for answering questions about things we don't know. Simply saying "God did it" is making up an answer because we are too lazy to try to figure out the real truth. Science still can't explain where life came from and is honest about it.No atheist believes "the universe came from nothing". Science doesn't even wastes its time trying to study what came before the big bang and creation of the universe (based on the first law of the thermodynamics, many think matter and energy are atemporal, and before the Big Bang, everything was a singularity, but very few people are interested in studying that because it won't change anything in our knowledge about the universe).

Answer: We can make an inference to the best explanation of origins, based on the wealth of scientific information, philosophy, theology, and using sound abductive, inductive, and deductive reasoning. Either there is a God, or not. So there are only two hypotheses from which to choose. Atheists, rather than admit a creator as the only rational response to explain our existence, prefer to confess ignorance despite the wealth of scientific information, that permits to reach informed conclusions.

John Lennox:There are not many options. Essentially, just two. Either human intelligence owes its origin to mindless matter, or there is a Creator. It's strange that some people claim that all it is their intelligence that leads to prefer the first to the second.

Luke A. Barnes: “I don’t know which one of these two statements is true” is a very different state of knowledge from “I don’t know which one of these trillion statements is true”. Our probabilities can and should reflect the size of the set of possibilities.

Greg Koukl observed that while it’s certainly true atheists lack a belief in God, they don’t lack beliefs about God. When it comes to the truth of any given proposition, one only has three logical options: affirm it, deny it, or withhold judgment (due to ignorance or the inability to weigh competing evidences). As applied to the proposition “God exists,” those who affirm the truth of this proposition are called theists, those who deny it are called atheists, and those who withhold judgment are called agnostics. Only agnostics, who have not formed a belief, lack a burden to demonstrate the truth of their position. Are those who want to define atheism as a lack of belief in God devoid of beliefs about God? Almost never! They have a belief regarding God’s existence, and that belief is that God’s existence is improbable or impossible. While they may not be certain of this belief (certainty is not required), they have certainly made a judgment. They are not intellectually neutral. At the very least, they believe God’s existence is more improbable than probable, and thus they bear a burden to demonstrate why God’s existence is improbable. So long as the new brand of atheists has formed a belief regarding the truth or falsity of the proposition “God exists,” then they have beliefs about God, and must defend that belief even if atheism is defined as the lack of belief in God.

The irrationality of atheists making absolute claims of God's nonexistence

Claim: One can't hate something that never happened. Gods are fictional beings created at the dawn of humanity to explain what they didn't have the intelligence to understand. Of the 1000s of gods humans wrongfully worship, none exist.

Reply: Asserting that none of the myriad deities humanity has revered over time exist is a definitive statement that lacks empirical support. Proving the non-existence of all deities is as elusive as confirming the existence of any single deity. This is primarily due to the transcendent nature attributed to deities, positioning them beyond the tangible universe and, consequently, beyond the reach of standard empirical investigation. For someone to categorically affirm or deny the existence of any deity, they would need an exhaustive understanding of the universe, encompassing all dimensions, realms, and the essence of reality beyond our observable universe. Deities are often conceptualized as entities that reside beyond the physical domain, making them inherently unobservable through conventional empirical means. Achieving such a comprehensive grasp on reality would also necessitate an omniscient awareness of all conceivable forms of evidence and methodologies for interpreting said evidence. Given the inherent limitations in human sensory and cognitive capacities, attaining such a level of knowledge is beyond our capability.

Therefore, making absolute declarations about the existence or absence of deities demands omniscience and an ability to perceive beyond the physical, criteria that are unattainable for humans, rendering such assertions unfounded.

Additionally, the challenge in disproving the existence of deities often lies in their definitions, which are typically structured to be non-falsifiable. For instance, defining a deity as an omnipotent, omniscient entity existing outside space and time makes it inherently immune to empirical scrutiny, thereby precluding conclusive disproof of such an entity's existence. Moreover, suggesting that all deities are merely mythological constructs devised to explain the inexplicable oversimplifies the diverse roles and representations of deities across different cultures. While some deities were indeed created to personify natural phenomena, others serve as paragons of moral virtue or are intertwined with specific historical narratives, indicating a complexity that goes beyond mere mythological explanations for natural events.

Why it`s an irrational demand to ask for proof of his existence

Claiming that the lack of direct sensory perception or irrefutable proof of God's existence equates to evidence of non-existence is a significant epistemological error.

Claim: You're asserting that "the god of the bible is truthful". We don't have proof of his existence and know that this character lies in the bible. You wouldn't believe the great god Cheshire was good if you didn't even think he was real.

Response: Atheists cannot prove either that the physical world is all there is. While it's true that there is no objective proof of the existence of God, the belief in a higher power is a matter of faith for many people. As for the character of God in the Bible, it's important to consider the historical and cultural context in which it was written, as well as the interpretation and translation of the text over time. Additionally, many people view the Bible as a metaphorical or symbolic representation of God's teachings rather than a literal account of his actions.

Furthermore, the analogy to the Cheshire Cat is flawed, as the Cheshire Cat is a fictional character created for a children's story, while God is a concept that has been a central aspect of human spirituality and religion for thousands of years. While we may never be able to definitively prove the existence or non-existence of God, many people find comfort, guidance, and purpose in their faith.

Atheist: All that theists ever offer is arguments sans any demonstration whatsoever. Provide verifiable evidence for any God, demonstrating his existence.

Answer: Many atheists subscribe to concepts like multiverses, abiogenesis, and macroevolution, extending from a common ancestor to humans, despite these phenomena not being directly observable. Yet, they often reject the existence of God on the grounds of invisibility, which might seem like a double standard. It's also worth noting that neither atheism nor theism can conclusively prove their stance on the nature of reality. Science, as a tool, may not be able to fully explain the origins of existence or validate the presence of a divine entity or the exclusivity of the material world. Thus, both worldviews inherently involve a degree of faith. From a philosophical standpoint, if there were no God, the universe might be seen as entirely random, with no underlying order or permanence to the laws of physics, suggesting that anything could happen at any moment without reason. The concept of a singular, ultimate God provides a foundation for consistency and for securing stability and intelligibility within the universe. The notion of divine hiddenness is proposed as a means for preserving human freedom. If God's presence were undeniable, it would constrain the ability to live freely according to one's wishes, similar to how a criminal would feel constrained in a police station. This hiddenness allows for the exercise of free will, offering "enough light" for seekers and "enough darkness" for skeptics. The pursuit of truth, according to this view, should be an open-minded journey, guided by evidence, even if the conclusions challenge personal beliefs. The biblical verses Matthew 7:8 and Revelation 3:20 are cited to illustrate the idea that those who earnestly seek will ultimately find truth, or rather, that truth will find them.

Why does God not simply show himself to us?

If God were to constantly reveal His presence and intervene to prevent evil, many would argue that their freedom to live apart from God would be compromised. Even those who oppose God might find existence under constant divine surveillance intolerable, akin to living in a perpetual police state. Atheists often misunderstand God's desire for worship as egotism. The reality is that humans possess the freedom to choose what to worship, not whether to worship. If God were overtly visible, even this choice would vanish. God represents the essence of truth, beauty, life, and love—encountering Him would be like standing before the breathtaking grandeur of nature and the cosmos combined. Philosopher Michael Murray suggests that God's hiddenness allows people the autonomy to either respond to His call or remain independent. This echoes the story of Adam and Eve in the Garden of Eden, where God's immediate presence wasn't overtly evident. The essence of character is often revealed when one believes they are unobserved.

Perhaps, as Blaise Pascal proposed, God reveals Himself enough to offer a choice of belief. There is "enough light for those who desire to see and enough darkness for those of a contrary disposition." God values human free will over His desires. For those truly seeking truth, maintaining an open mind and following evidence wherever it leads is essential, even if it leads to uncomfortable conclusions. In understanding God's limitations, consider an intelligent software entity unable to directly interact with humans. Similarly, God relies on physical manifestations to communicate with us, much like angels appearing human-like to interact within the physical realm. The notion of a Godless universe is a philosophical theory, not a scientific fact, built upon a chain of beliefs. God's concealed existence serves to prevent chaos and rebellion that could lead to humanity's destruction. Those in covenantal relationship with God find solace in His omnipresence and omniscience, while for those who resist, such attributes would be akin to hell on earth. To force God's overt presence upon an unregenerated world would lead to rebellion, as many would bend their knees out of fear rather than genuine love. God's wisdom is rooted in love, which must be freely given by both parties. However, free humanity often inclines towards loving sin over God, thus revealing Himself overtly would likely destroy that world.

Demand: No one has ever produced any verifiable evidence for any God, demonstrating his existence. All religions make that claim for their specific God. Well, I want some proof, hard verifiable proof.

Answer: Every worldview, regardless of its nature, is fundamentally rooted in faith—a collection of beliefs adopted as truth by its adherents. With this perspective, the notion of absolute "proof" becomes impractical, as no individual possesses such certainty for the worldview they hold. Instead of demanding irrefutable proof, we engage in examining the available evidence, which should guide us toward the worldview that best aligns with that evidence. One common demand from atheists is for proof of God's existence, often accompanied by the claim that there is no evidence to support it. However, what they typically mean is that there is no empirically verifiable proof. Yet, this demand reveals a lack of epistemological sophistication, as it implicitly admits that there is no proof for the assertion that the natural world is all there is. When someone claims there is no proof of God's existence, they essentially concede that there is also no proof that the natural world is all-encompassing. To assert otherwise would require omniscience—an impossible feat. Therefore, their stance lacks substantive reasoning. The challenge to "show me God" parallels the impossibility of physically demonstrating one's thoughts or memories to another. While we can discuss these concepts, their intrinsic nature eludes empirical verification. To navigate through worldviews and arrive at meaningful conclusions about origins and reality, we must adopt a methodological approach grounded in a carefully constructed epistemological framework. This can involve various methodologies such as rationalism, empiricism, pragmatism, authority, and revelation. While empiricism plays a crucial role in the scientific method, disregarding philosophy and theology outright is a misguided approach adopted by many unbelievers. Some skeptics reject the idea of God's existence beyond the confines of space-time due to a lack of empirical evidence. However, they simultaneously embrace the default position that there is no God, despite its unverifiability. Yet, God's existence can be logically inferred and is evident. In the absence of a viable alternative, chance or luck cannot serve as a potent causal agent for the universe's existence. Given that the universe began to exist, the necessity of a creator becomes apparent, as nothingness cannot bring about something. Thus, there must have always been a being, and this being serves as the cause of the universe.

Can you demonstrate that your mental state of affairs exists? That you are a real person and not a preprogrammed artificial intelligence seeded by aliens? How can I know that your cognitive faculties including consciousness, perception, thinking, judgment, memory, reasoning, thoughts, imagination, recognition, appreciation, feelings, and emotions are real? Can you demonstrate that your qualia, the substance of your mind is real? Could it be, that aliens from a distant planet use some unknown communication system and use your eyes, ears, brain, etc, that you are a programmed bot, and all your answers are in reality given by them? You can't demonstrate this not to be the case.

C.S. Lewis (1947):: “Granted that Reason is before matter and that the light of the primal Reason illuminates finite minds, I can understand how men should come, by observation and inference, to know a lot about the universe they live in. If, on the other hand, I swallow the scientific cosmology as a whole [i.e. materialism], then not only can I not fit in Christianity, but I cannot even fit in science. If minds are wholly dependent on brains, and brains on biochemistry, and biochemistry (in the long run) on the meaningless flux of the atoms, I cannot understand how the thought of those minds should have any more significance than the sound of the wind in the trees.” One absolutely central inconsistency ruins [the naturalistic worldview].... The whole picture professes to depend on inferences from observed facts. Unless the inference is valid, the whole picture disappears... Unless Reason is an absolute--all is in ruins. Yet those who ask me to believe this world picture also ask me to believe that Reason is simply the unforeseen and unintended by-product of mindless matter at one stage of its endless and aimless becoming. Here is a flat contradiction. They ask me at the same moment to accept a conclusion and to discredit the only testimony on which that conclusion can be based. 1

Asking for empirical proof of God's existence is a flawed epistemological approach that reveals a lack of understanding on the part of the unbeliever regarding how to derive sound conclusions about origins. It's important to acknowledge that there is no empirical proof either for or against the existence of God, just as there is no empirical proof that the known universe exhausts all existence. To assert definitively that God does not exist would require omniscience, which we do not possess. Thus, the burden of proof cannot be met by either side. Instead of demanding empirical demonstrations, we can engage in philosophical inquiry to either affirm or deny the existence of a creator based on circumstantial evidence, logic, and reason. Reason itself does not provide concrete evidence but can only imply potentialities, probabilities, and possibilities, particularly when venturing beyond the physical realm.

The seeker of truth must approach the evidence with open-mindedness, setting aside biases and prejudices as much as possible. A rational approach, grounded in scientific reasoning and logic, involves observing, hypothesizing, testing where feasible, and arriving at well-founded conclusions. When examining the natural world, the question shifts from "how something works" (the domain of empirical science) to "what mechanism explains best the origin of X." This approach advances our understanding by considering the intricacies of biochemical reality, intracellular actions, and the molecular world. Darwin's era lacked the depth of knowledge we now possess regarding the complexity of biochemical processes. Today, our understanding continues to expand, with each day contributing to our comprehension of the mechanisms underlying existence.

Empirical evidence alone cannot confirm the existence of:

1. The laws of logic, despite our reliance on them daily.

2. The laws of science, although scientists constantly utilize them.

3. The concept of cause and effect, even though we perceive it regularly.

Some assert the truism "Seeing is believing." However, if one subscribes to this belief, did they actually:

1. "See" this truth?

2. "Feel" it in the dark?

3. "Smell" it in the air?

4. "Taste" it in their dinner?

5. "Hear" it in the middle of the night?

If not, then the notion of "Seeing is believing" cannot be empirically proven to be true. Thus, empirical proof encounters significant challenges and may not always serve as the most reliable form of evidence.

Arguing, that, because we cannot see or sense God, nor having He proven his existence beyond any doubt, there is no evidence of His existence, is the greatest epistemological foolishness someone can commit.

Last edited by Otangelo on Thu Apr 18, 2024 5:36 am; edited 64 times in total

the cosmological constant

the cosmological constant