There was no prebiotic selection to get life originating

https://reasonandscience.catsboard.com/t3121-there-was-no-prebiotic-selection-to-get-life-originating

The four basic building blocks of life are DNA, RNA, proteins, and lipids. These molecules are essential for the function and replication of living cells, and their origin is a key question in the study of abiogenesis.

One challenge for naturalistic explanations of the origin of these molecules is the lack of a selection mechanism. In biological systems, natural selection acts on organisms with heritable traits, favoring those that are better adapted to their environment and promoting the propagation of their genes. However, in the early stages of life on Earth, there were no organisms with heritable traits to select for or against.

This presents a problem for explaining how the four basic building blocks of life could have arisen through purely naturalistic processes. Without a selection mechanism to favor certain molecules over others, it is difficult to see how the complex, information-rich molecules that we see in modern cells could have emerged from the chemical soup of early Earth.

Some researchers have proposed that other factors, such as self-organization or autocatalysis, may have played a role in the origin of life. However, these ideas are still largely speculative and face their own challenges and limitations.

Synonym for selecting is: choosing, picking, handpicking, sorting out, discriminating, choosing something from among others, and giving preference to something over another.

Andrew H. Knoll: FUNDAMENTALS OF GEOBIOLOGY 2012

The emergence of natural selection

Molecular selection, the process by which a few key molecules earned key roles in life’s origins, proceeded on many fronts. (Comment: observe the unwarranted claim) Some molecules were inherently unstable or highly reactive and so they quickly disappeared from the scene. Other molecules easily dissolved in the oceans and so were effectively removed from contention. Still, other molecular species may have sequestered themselves by bonding strongly to surfaces of chemically unhelpful minerals or clumped together into tarry masses of little use to emerging biology. In every geochemical environment, each kind of organic molecule had its dependable sources and its inevitable sinks. For a time, perhaps for hundreds of millions of years, a kind of molecular equilibrium was maintained as the new supply of each species was balanced by its loss. Such equilibrium features nonstop competition among molecules, to be sure, but the system does not evolve. 6

Comment: That is the key sentence. There would not have been a complexification to higher order into machine-like structures, but these molecules would either quickly disappear, dissolve in the ocean, or clumped together into tarry masses of little use to emerging biology. The system does not evolve. In other words, chemical selection would never take place.

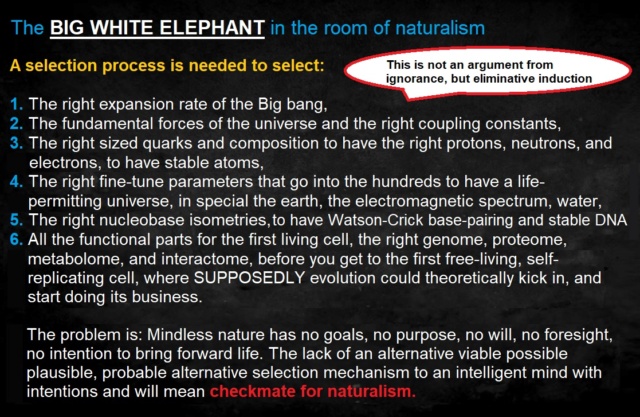

We know that we, as intelligent beings, do make choices to get the desired outcome all the time - and there is no alternative to conscious intelligent action. Therefore, it is logical and plausible, and probable, that an intelligent creator was in action, choosing the parameters of the laws of physics, the right equations, the right adjustments in the universe, the right building blocks of life, the right machinery to have given life a first go. And he was remarkably good at that.

1. Life requires the use of a limited set of complex biomolecules, a universal convention, and unity which is composed of the four basic building blocks of life ( RNA and DNA's, amino acids, phospholipids, and carbohydrates). They are of a very specific complex functional composition and made by cells in extremely sophisticated orchestrated metabolic pathways, which were not extant on the early earth. If abiogenesis were true, these biomolecules had to be prebiotically available and naturally occurring ( in non-enzyme-catalyzed ways by natural means ) and then somehow join in an organized way and form the first living cells. They had to be available in big quantities and concentrated at one specific building site.

2. Making things for a specific purpose, for a distant goal, requires goal-directedness. And that's a big problem for naturalistic explanations of the origin of life. There was a potentially unlimited variety of molecules on the prebiotic earth. Competition and selection among them would never have occurred at all, to promote a separation of those molecules that are used in life, from those that are useless. Selection is a scope and powerless mechanism to explain all of the living order, and even the ability to maintain order in the short term and to explain the emergence, overall organization, and long-term persistence of life from non-living precursors. It is an error of false conceptual reduction to suppose that competition and selection will thereby be the source of explanation for all relevant forms of the living order.

3. We know that a) unguided random purposeless events are unlikely to the extreme to make specific purposeful elementary components to build large integrated macromolecular systems, and b) intelligence has goal-directedness. Bricks do not form from clay by themselves, and then line up to make walls. Someone made them. Phospholipids do not form from glycerol, a phosphate group, and two fatty acid chains by themselves, and line up to make cell membranes. Someone made them. That is God.

If a machine has to be made out of certain components, then the components have to be made first.'

Molecules have nothing to gain by becoming the building blocks of life. They are "happy" to lay on the ground or float in the prebiotic ocean and that's it. Being incredulous that they would concentrate at one building site in the right mixture, and in the right complex form, that would permit them to complexify in an orderly manner and assembly into complex highly efficient molecular machines and self-replicating cell factories, is not only justified but warranted and sound reasoning. That fact alone destroys materialism & naturalism. Being credulous towards such a scenario means to stick to blind belief. And claiming that "we don't know (yet), but science is working on it, but the expectation is that the explanation will be a naturalistic one ( No God required) is a materialism of the gaps argument.

A Few Experimental Suggestions Using Minerals to Obtain Peptides with a High Concentration of L-Amino Acids and Protein Amino Acids 10 December 2020

The prebiotic seas contained L- and D-amino acids, and non-Polar AAs and Polar AAs, and minerals could adsorb all these molecules. Besides amino acids, other molecules could be found in the primitive seas that competed for mineral adsorption sites. Here, we have a huge problem that could be a double-edged sword for prebiotic chemistry. On the one hand, this may lead to more complex prebiotic chemistry, due to the large variety of species, which could mean more possibilities for the formation of different and more complex molecules. On the other hand, this complex mixture of molecules may not lead to the formation of any important molecule or biopolymer in high concentration to be used for molecular evolution. Schwartz, in his article “Intractable mixtures and the origin of life”, has already addressed this problem, denominating this mixture the “gunk”. 5

Intractable Mixtures and the Origin of Life 2007

A problem which is familiar to organic chemists is the production of unwanted byproducts in synthetic reactions. For prebiotic chemistry, where the goal is often the simulation of conditions on the prebiotic Earth and the modeling of a spontaneous reaction, it is not surprising – but nevertheless frustrating – that the unwanted products may consume most of the starting material and lead to nothing more than an intractable mixture, or -gunk.. The most well-known examples of the phenomenon can be summarized quickly: Although the Miller –Urey reaction produces an impressive set of amino acids and other biologically significant compounds, a large fraction of the starting material goes into a brown, tar-like residue that remains uncharacterized; i.e., gunk. While 15% of the carbon can be traced to specific organic molecules, the rest seems to be largely intractable

Even if we focus only on the soluble products, we still have to deal with an extremely complex mixture of compounds. The carbonaceous chondrites, which represent an alternative source of starting material for prebiotic chemistry on Earth, and must have added enormous quantities of organic material to the Earth at the end of the Late Heavy Bombardment (LHB), do not offer a solution to the problem just referred to. The organic material present in carbonaceous meteorites is a mixture of such complexity that much ingenuity has gone into the design of suitable extraction methods, to isolate the most important classes of soluble (or solubilized) components for analysis.

Whatever the exact nature of an RNA precursor which may have become the first selfreplicating molecule, how could the chemical homogeneity which seems necessary to permit this kind of mechanism to even come into existence have been achieved? What mechanism would have selected for the incorporation of only threose, or ribose, or any particular building block, into short oligomers which might later have undergone chemically selective oligomerization? Virtually all model prebiotic syntheses produce mixtures. 6

Life: What A Concept! https://jsomers.net/life.pdf

Craig Venter: To me the key thing about Darwinian evolution is selection. Biology is a hundred percent dependent on selection. No matter what we do in synthetic biology, synthetic genomes, we're doing selection. It's just not

natural selection anymore. It's an intelligently designed selection, so it's a unique subset. But selection is always part of it.

My comment:

What natural mechanisms lack, is goal-directedness. And that's a big problem for naturalistic explanations of the origin of life. There was a potentially unlimited variety of molecules on the prebiotic earth. Why should competition and selection among them have occurred at all, to promote a separation of those molecules that are used in life, from those that are useless? Selection is a scope and powerless mechanism to explain all of the living order, and even the ability to maintain order in the short term, and to explain the emergence, overall organization, and long-term persistence of life from non-living precursors. It is an error of false conceptual reduction to suppose that competition and selection will thereby be the source of explanation for all relevant forms of order.

The problem of lack of a selection mechanism extends to the homochirality problem.

A. G. CAIRNS-SMITH Seven clues to the origin of life, page 40:

It is one of the most singular features of the unity of biochemistry that this mere convention is universal. Where did such agreement come from? You see non-biological processes do not as a rule show any bias one way or the other, and it has proved particularly difficult to see any realistic way in which any of the constituents of a 'prebiotic soup' would have had predominantly 'left-handed' or right-handed' molecules. It is thus particularly difficult to see this feature as having been imposed by initial conditions.

In regards to the prebiotic synthesis of the basic building blocks of life, I list 23 problems directly related to the lack of a selection mechanism on the prebiotic earth. This is one of the unsolvable problems of abiogenesis.

Selecting the right materials is absolutely essential. But a prebiotic soup of mixtures of impure chemicals would never purify and select those that are required for life. Chemicals and physical reactions have no "urge" to join, group, and start interacting in a purpose and goal-oriented way to produce molecules, that later on would perform specific functions, and generate self-replicating factories, full of machines, directed by specified, complex assembly information. This is not an argument from ignorance, incredulity, or gaps of knowledge.

William Dembski: The problem is that nature has too many options and without design couldn’t sort through all those options. The problem is that natural mechanisms are too unspecific to determine any particular outcome. Natural processes could theoretically form a protein, but also compatible with the formation of a plethora of other molecular assemblages, most of which have no biological significance. Nature allows them full freedom of arrangement. Yet it’s precisely that freedom that makes nature unable to account for specified outcomes of small probability. Nature, in this case, rather than being intent on doing only one thing, is open to doing any number of things. Yet when one of those things is a highly improbable specified event, design becomes the more compelling, better inference. Occam's razor also boils down to an argument from ignorance: in the absence of better information, you use a heuristic to accept one hypothesis over the other.

http://www.discovery.org/a/1256

Out of the 27 listed problems of prebiotic RNA synthesis, 8 are directly related to the lack of a mechanism to select the right ingredients.

1.How would prebiotic processes have purified the starting molecules to make RNA and DNA which were grossly impure? They would have been present in complex mixtures that contained a great variety of reactive molecules.

2.How did fortuitous accidents select the five just-right nucleobases to make DNA and RNA, Two purines, and three pyrimidines?

3.How did unguided random events select purines with two rings, with nine atoms, forming the two rings: 5 carbon atoms and 4 nitrogen atoms, amongst almost unlimited possible configurations?

4.How did stochastic coincidence select pyrimidines with one ring, with six atoms, forming its ring: 4 carbon atoms and 2 nitrogen atoms, amongst an unfathomable number of possible configurations?

5.How would these functional bases have been separated from the confusing jumble of similar molecules that would also have been made?

6.How could the ribose 5 carbon sugar rings which form the RNA and DNA backbone have been selected, if 6 or 4 carbon rings, or even more or less, are equally possible but non-functional?

7.How were the correct nitrogen atom of the base and the correct carbon atom of the sugar selected to be joined together?

8.How could right-handed configurations of RNA and DNA have been selected in a racemic pool of right and left-handed molecules? Ribose must have been in its D form to adopt functional structures ( The homochirality problem )

Out of the 27 listed problems of prebiotic amino acid synthesis, 13 are directly related to the lack of a mechanism to select the right ingredients.

1. How did unguided stochastic coincidence select the right amongst over 500 that occur naturally on earth?

2. How were bifunctional monomers, that is, molecules with two functional groups, so they combine with two others selected, and unifunctional monomers (with only one functional group) sorted out?

3. How could achiral precursors of amino acids have produced/selected and concentrated only left-handed amino acids? ( The homochirality problem )

4. How did the transition from prebiotic enantiomer selection to the enzymatic reaction of transamination occur that had to be extant when cellular self-replication and life began?

5. How would natural causes have selected twenty, and not more or less amino acids to make proteins?

6. How did natural events have foreknowledge that the selected amino acids are best suited to enable the formation of soluble structures with close-packed cores, allowing the presence of ordered binding pockets inside proteins?

7. How were bifunctional monomers, that is, molecules with two functional groups so they combine with two others selected, and unifunctional monomers (with only one functional group) sorted out?

8. How could achiral precursors of amino acids have produced and concentrated/selected only left-handed amino acids? (The homochirality problem)

9. How did the transition from prebiotic enantiomer selection to the enzymatic reaction of transamination occur that had to be extant when cellular self-replication and life began?

10. How would natural causes have selected twenty, and not more or less amino acids to make proteins?

11. How did natural events have foreknowledge that the selected amino acids are best suited to enable the formation of soluble structures with close-packed cores, allowing the presence of ordered binding pockets inside proteins?

12. How did nature "know" that the set of amino acids selected appears to be near ideal and optimal?

Out of the 12 listed problems of prebiotic cell membrane synthesis, 2 are directly related to the lack of a mechanism to select the right ingredients.

1. How did prebiotic processes select hydrocarbon chains which must be in the range of 14 to 18 carbons in length? There was no physical necessity to form carbon chains of the right length nor hindrance to join chains of varying lengths. So they could have been existing of any size on the early earth.

2. How would random events start to produce biological membranes which are not composed of pure phospholipids, but instead are mixtures of several phospholipid species, often with a sterol admixture such as cholesterol? There is no feasible prebiotic mechanism to select/join the right mixtures.

Claim: Even if we take your unknowns as true unknowns or even unknowable, the answer is always going to be “We don’t know yet.”

Reply: Science HATES saying confessing "we don't know". Science is about knowing and getting knowledge and understanding. The scientists mind is all about getting knowledge and diminishing ignorance. Confessing of not knowing, when there is good reason for it, is ok. But claiming of not knowing, despite the evident facts easy at hand and having the ability to come to informed well-founded conclusions based on sound reasoning, and through known facts and evidence, is not only willful ignorance but plain foolishness. In special, when the issues in the discussion are related to origins and worldviews, and eternal destiny is at stake. If there were hundreds of possible statements, then claiming of not knowing which makes most sense could be justified. In the quest of origins and God, there are just two possible explanations. Either there is a God, or there is not a God. That's it. There is however a wealth of evidence in the natural world, which can lead us to informed, well-justified conclusions. We know for example that nature has no "urge" to select things and to complexify, but its natural course is to act upon the laws of thermodynamics, and molecules disintegrate. That is their normal course of action. To become less complex. Systems, given energy and left to themselves, DEVOLVE to give uselessly complex mixtures, “asphalts”. The literature reports (to our knowledge) exactly ZERO CONFIRMED OBSERVATIONS where evolution emerged spontaneously from a devolving chemical system. it is IMPOSSIBLE for any non-living chemical system to escape devolution to enter into the Darwinian world of the “living”. Such statements of impossibility apply even to macromolecules. Both monomers and polymers can undergo a variety of decomposition reactions that must be taken into account because biologically relevant molecules would undergo similar decomposition processes in the prebiotic environment.

CAIRNS-SMITH genetic takeover, page 70

Suppose that by chance some particular coacervate droplet in a primordial ocean happened to have a set of catalysts, etc. that could convert carbon dioxide into D-glucose. Would this have been a major step forward

towards life? Probably not. Sooner or later the droplet would have sunk to the bottom of the ocean and never have been heard of again. It would not have mattered how ingenious or life-like some early system was; if it

lacked the ability to pass on to offspring the secret of its success then it might as well never have existed. So I do not see life as emerging as a matter of course from the general evolution of the cosmos, via chemical evolution, in one grand gradual process of complexification. Instead, following Muller (1929) and others, I would take a genetic View and see the origin of life as hinging on a rather precise technical puzzle. What would have been the easiest way that hereditary machinery could have formed on the primitive Earth?

Claim: That’s called the Sherlock fallacy. It's a false dichotomy

Reply: No. Life is either due to chance, or design. There are no other options.

One of the few biologists, Eugene Koonin, Senior Investigator at the National Center for Biotechnology Information, a recognized expert in the field of evolutionary and computational biology, is honest enough to recognize that abiogenesis research has failed. He wrote in his book: The Logic of Chance page 351:

" Despite many interesting results to its credit, when judged by the straightforward criterion of reaching (or even approaching) the ultimate goal, the origin of life field is a failure—we still do not have even a plausible coherent model, let alone a validated scenario, for the emergence of life on Earth. Certainly, this is due not to a lack of experimental and theoretical effort, but to the extraordinary intrinsic difficulty and complexity of the problem. A succession of exceedingly unlikely steps is essential for the origin of life, from the synthesis and accumulation of nucleotides to the origin of translation; through the multiplication of probabilities, these make the final outcome seem almost like a miracle.

Eliminative inductions argue for the truth of a proposition by demonstrating that competitors to that proposition are false. Either the origin of the basic building blocks of life and self-replicating cells are the result of the creative act by an intelligent designer, or the result of unguided random chemical reactions on the early earth. Science, rather than coming closer to demonstrate how life could have started, has not advanced and is further away to generating living cells starting with small molecules. Therefore, most likely, cells were created by an intelligent designer.

I have listed 27 open questions in regard to the origin of RNA and DNA on the early earth, 27 unsolved problems in regard to the origin of amino acids on the early earth, 12 in regard to phospholipid synthesis, and also unsolved problems in regard to carbohydrate production. The open problems are in reality far greater. This is just a small list. It is not just an issue of things that have not yet been figured out by abiogenesis research, but deep conceptual problems, like the fact that there were no natural selection mechanisms in place on the early earth.

https://reasonandscience.catsboard.com/t1279p75-abiogenesis-is-mathematically-impossible#7759

1. https://onlinelibrary.wiley.com/doi/abs/10.1002/cbdv.200790167

2. https://sci-hub.ren/10.1007/978-1-4939-1468-5_27

3. https://arxiv.org/ftp/arxiv/papers/1511/1511.00698.pdf

4. https://link.springer.com/book/10.1007%2Fb136268

5. https://www.mdpi.com/2073-8994/12/12/2046

6. Andrew H. Knoll: FUNDAMENTALS OF GEOBIOLOGY 2012

https://reasonandscience.catsboard.com/t3121-there-was-no-prebiotic-selection-to-get-life-originating

The four basic building blocks of life are DNA, RNA, proteins, and lipids. These molecules are essential for the function and replication of living cells, and their origin is a key question in the study of abiogenesis.

One challenge for naturalistic explanations of the origin of these molecules is the lack of a selection mechanism. In biological systems, natural selection acts on organisms with heritable traits, favoring those that are better adapted to their environment and promoting the propagation of their genes. However, in the early stages of life on Earth, there were no organisms with heritable traits to select for or against.

This presents a problem for explaining how the four basic building blocks of life could have arisen through purely naturalistic processes. Without a selection mechanism to favor certain molecules over others, it is difficult to see how the complex, information-rich molecules that we see in modern cells could have emerged from the chemical soup of early Earth.

Some researchers have proposed that other factors, such as self-organization or autocatalysis, may have played a role in the origin of life. However, these ideas are still largely speculative and face their own challenges and limitations.

Synonym for selecting is: choosing, picking, handpicking, sorting out, discriminating, choosing something from among others, and giving preference to something over another.

Andrew H. Knoll: FUNDAMENTALS OF GEOBIOLOGY 2012

The emergence of natural selection

Molecular selection, the process by which a few key molecules earned key roles in life’s origins, proceeded on many fronts. (Comment: observe the unwarranted claim) Some molecules were inherently unstable or highly reactive and so they quickly disappeared from the scene. Other molecules easily dissolved in the oceans and so were effectively removed from contention. Still, other molecular species may have sequestered themselves by bonding strongly to surfaces of chemically unhelpful minerals or clumped together into tarry masses of little use to emerging biology. In every geochemical environment, each kind of organic molecule had its dependable sources and its inevitable sinks. For a time, perhaps for hundreds of millions of years, a kind of molecular equilibrium was maintained as the new supply of each species was balanced by its loss. Such equilibrium features nonstop competition among molecules, to be sure, but the system does not evolve. 6

Comment: That is the key sentence. There would not have been a complexification to higher order into machine-like structures, but these molecules would either quickly disappear, dissolve in the ocean, or clumped together into tarry masses of little use to emerging biology. The system does not evolve. In other words, chemical selection would never take place.

We know that we, as intelligent beings, do make choices to get the desired outcome all the time - and there is no alternative to conscious intelligent action. Therefore, it is logical and plausible, and probable, that an intelligent creator was in action, choosing the parameters of the laws of physics, the right equations, the right adjustments in the universe, the right building blocks of life, the right machinery to have given life a first go. And he was remarkably good at that.

1. Life requires the use of a limited set of complex biomolecules, a universal convention, and unity which is composed of the four basic building blocks of life ( RNA and DNA's, amino acids, phospholipids, and carbohydrates). They are of a very specific complex functional composition and made by cells in extremely sophisticated orchestrated metabolic pathways, which were not extant on the early earth. If abiogenesis were true, these biomolecules had to be prebiotically available and naturally occurring ( in non-enzyme-catalyzed ways by natural means ) and then somehow join in an organized way and form the first living cells. They had to be available in big quantities and concentrated at one specific building site.

2. Making things for a specific purpose, for a distant goal, requires goal-directedness. And that's a big problem for naturalistic explanations of the origin of life. There was a potentially unlimited variety of molecules on the prebiotic earth. Competition and selection among them would never have occurred at all, to promote a separation of those molecules that are used in life, from those that are useless. Selection is a scope and powerless mechanism to explain all of the living order, and even the ability to maintain order in the short term and to explain the emergence, overall organization, and long-term persistence of life from non-living precursors. It is an error of false conceptual reduction to suppose that competition and selection will thereby be the source of explanation for all relevant forms of the living order.

3. We know that a) unguided random purposeless events are unlikely to the extreme to make specific purposeful elementary components to build large integrated macromolecular systems, and b) intelligence has goal-directedness. Bricks do not form from clay by themselves, and then line up to make walls. Someone made them. Phospholipids do not form from glycerol, a phosphate group, and two fatty acid chains by themselves, and line up to make cell membranes. Someone made them. That is God.

If a machine has to be made out of certain components, then the components have to be made first.'

Molecules have nothing to gain by becoming the building blocks of life. They are "happy" to lay on the ground or float in the prebiotic ocean and that's it. Being incredulous that they would concentrate at one building site in the right mixture, and in the right complex form, that would permit them to complexify in an orderly manner and assembly into complex highly efficient molecular machines and self-replicating cell factories, is not only justified but warranted and sound reasoning. That fact alone destroys materialism & naturalism. Being credulous towards such a scenario means to stick to blind belief. And claiming that "we don't know (yet), but science is working on it, but the expectation is that the explanation will be a naturalistic one ( No God required) is a materialism of the gaps argument.

A Few Experimental Suggestions Using Minerals to Obtain Peptides with a High Concentration of L-Amino Acids and Protein Amino Acids 10 December 2020

The prebiotic seas contained L- and D-amino acids, and non-Polar AAs and Polar AAs, and minerals could adsorb all these molecules. Besides amino acids, other molecules could be found in the primitive seas that competed for mineral adsorption sites. Here, we have a huge problem that could be a double-edged sword for prebiotic chemistry. On the one hand, this may lead to more complex prebiotic chemistry, due to the large variety of species, which could mean more possibilities for the formation of different and more complex molecules. On the other hand, this complex mixture of molecules may not lead to the formation of any important molecule or biopolymer in high concentration to be used for molecular evolution. Schwartz, in his article “Intractable mixtures and the origin of life”, has already addressed this problem, denominating this mixture the “gunk”. 5

Intractable Mixtures and the Origin of Life 2007

A problem which is familiar to organic chemists is the production of unwanted byproducts in synthetic reactions. For prebiotic chemistry, where the goal is often the simulation of conditions on the prebiotic Earth and the modeling of a spontaneous reaction, it is not surprising – but nevertheless frustrating – that the unwanted products may consume most of the starting material and lead to nothing more than an intractable mixture, or -gunk.. The most well-known examples of the phenomenon can be summarized quickly: Although the Miller –Urey reaction produces an impressive set of amino acids and other biologically significant compounds, a large fraction of the starting material goes into a brown, tar-like residue that remains uncharacterized; i.e., gunk. While 15% of the carbon can be traced to specific organic molecules, the rest seems to be largely intractable

Even if we focus only on the soluble products, we still have to deal with an extremely complex mixture of compounds. The carbonaceous chondrites, which represent an alternative source of starting material for prebiotic chemistry on Earth, and must have added enormous quantities of organic material to the Earth at the end of the Late Heavy Bombardment (LHB), do not offer a solution to the problem just referred to. The organic material present in carbonaceous meteorites is a mixture of such complexity that much ingenuity has gone into the design of suitable extraction methods, to isolate the most important classes of soluble (or solubilized) components for analysis.

Whatever the exact nature of an RNA precursor which may have become the first selfreplicating molecule, how could the chemical homogeneity which seems necessary to permit this kind of mechanism to even come into existence have been achieved? What mechanism would have selected for the incorporation of only threose, or ribose, or any particular building block, into short oligomers which might later have undergone chemically selective oligomerization? Virtually all model prebiotic syntheses produce mixtures. 6

Life: What A Concept! https://jsomers.net/life.pdf

Craig Venter: To me the key thing about Darwinian evolution is selection. Biology is a hundred percent dependent on selection. No matter what we do in synthetic biology, synthetic genomes, we're doing selection. It's just not

natural selection anymore. It's an intelligently designed selection, so it's a unique subset. But selection is always part of it.

My comment:

What natural mechanisms lack, is goal-directedness. And that's a big problem for naturalistic explanations of the origin of life. There was a potentially unlimited variety of molecules on the prebiotic earth. Why should competition and selection among them have occurred at all, to promote a separation of those molecules that are used in life, from those that are useless? Selection is a scope and powerless mechanism to explain all of the living order, and even the ability to maintain order in the short term, and to explain the emergence, overall organization, and long-term persistence of life from non-living precursors. It is an error of false conceptual reduction to suppose that competition and selection will thereby be the source of explanation for all relevant forms of order.

The problem of lack of a selection mechanism extends to the homochirality problem.

A. G. CAIRNS-SMITH Seven clues to the origin of life, page 40:

It is one of the most singular features of the unity of biochemistry that this mere convention is universal. Where did such agreement come from? You see non-biological processes do not as a rule show any bias one way or the other, and it has proved particularly difficult to see any realistic way in which any of the constituents of a 'prebiotic soup' would have had predominantly 'left-handed' or right-handed' molecules. It is thus particularly difficult to see this feature as having been imposed by initial conditions.

In regards to the prebiotic synthesis of the basic building blocks of life, I list 23 problems directly related to the lack of a selection mechanism on the prebiotic earth. This is one of the unsolvable problems of abiogenesis.

Selecting the right materials is absolutely essential. But a prebiotic soup of mixtures of impure chemicals would never purify and select those that are required for life. Chemicals and physical reactions have no "urge" to join, group, and start interacting in a purpose and goal-oriented way to produce molecules, that later on would perform specific functions, and generate self-replicating factories, full of machines, directed by specified, complex assembly information. This is not an argument from ignorance, incredulity, or gaps of knowledge.

William Dembski: The problem is that nature has too many options and without design couldn’t sort through all those options. The problem is that natural mechanisms are too unspecific to determine any particular outcome. Natural processes could theoretically form a protein, but also compatible with the formation of a plethora of other molecular assemblages, most of which have no biological significance. Nature allows them full freedom of arrangement. Yet it’s precisely that freedom that makes nature unable to account for specified outcomes of small probability. Nature, in this case, rather than being intent on doing only one thing, is open to doing any number of things. Yet when one of those things is a highly improbable specified event, design becomes the more compelling, better inference. Occam's razor also boils down to an argument from ignorance: in the absence of better information, you use a heuristic to accept one hypothesis over the other.

http://www.discovery.org/a/1256

Out of the 27 listed problems of prebiotic RNA synthesis, 8 are directly related to the lack of a mechanism to select the right ingredients.

1.How would prebiotic processes have purified the starting molecules to make RNA and DNA which were grossly impure? They would have been present in complex mixtures that contained a great variety of reactive molecules.

2.How did fortuitous accidents select the five just-right nucleobases to make DNA and RNA, Two purines, and three pyrimidines?

3.How did unguided random events select purines with two rings, with nine atoms, forming the two rings: 5 carbon atoms and 4 nitrogen atoms, amongst almost unlimited possible configurations?

4.How did stochastic coincidence select pyrimidines with one ring, with six atoms, forming its ring: 4 carbon atoms and 2 nitrogen atoms, amongst an unfathomable number of possible configurations?

5.How would these functional bases have been separated from the confusing jumble of similar molecules that would also have been made?

6.How could the ribose 5 carbon sugar rings which form the RNA and DNA backbone have been selected, if 6 or 4 carbon rings, or even more or less, are equally possible but non-functional?

7.How were the correct nitrogen atom of the base and the correct carbon atom of the sugar selected to be joined together?

8.How could right-handed configurations of RNA and DNA have been selected in a racemic pool of right and left-handed molecules? Ribose must have been in its D form to adopt functional structures ( The homochirality problem )

Out of the 27 listed problems of prebiotic amino acid synthesis, 13 are directly related to the lack of a mechanism to select the right ingredients.

1. How did unguided stochastic coincidence select the right amongst over 500 that occur naturally on earth?

2. How were bifunctional monomers, that is, molecules with two functional groups, so they combine with two others selected, and unifunctional monomers (with only one functional group) sorted out?

3. How could achiral precursors of amino acids have produced/selected and concentrated only left-handed amino acids? ( The homochirality problem )

4. How did the transition from prebiotic enantiomer selection to the enzymatic reaction of transamination occur that had to be extant when cellular self-replication and life began?

5. How would natural causes have selected twenty, and not more or less amino acids to make proteins?

6. How did natural events have foreknowledge that the selected amino acids are best suited to enable the formation of soluble structures with close-packed cores, allowing the presence of ordered binding pockets inside proteins?

7. How were bifunctional monomers, that is, molecules with two functional groups so they combine with two others selected, and unifunctional monomers (with only one functional group) sorted out?

8. How could achiral precursors of amino acids have produced and concentrated/selected only left-handed amino acids? (The homochirality problem)

9. How did the transition from prebiotic enantiomer selection to the enzymatic reaction of transamination occur that had to be extant when cellular self-replication and life began?

10. How would natural causes have selected twenty, and not more or less amino acids to make proteins?

11. How did natural events have foreknowledge that the selected amino acids are best suited to enable the formation of soluble structures with close-packed cores, allowing the presence of ordered binding pockets inside proteins?

12. How did nature "know" that the set of amino acids selected appears to be near ideal and optimal?

Out of the 12 listed problems of prebiotic cell membrane synthesis, 2 are directly related to the lack of a mechanism to select the right ingredients.

1. How did prebiotic processes select hydrocarbon chains which must be in the range of 14 to 18 carbons in length? There was no physical necessity to form carbon chains of the right length nor hindrance to join chains of varying lengths. So they could have been existing of any size on the early earth.

2. How would random events start to produce biological membranes which are not composed of pure phospholipids, but instead are mixtures of several phospholipid species, often with a sterol admixture such as cholesterol? There is no feasible prebiotic mechanism to select/join the right mixtures.

Claim: Even if we take your unknowns as true unknowns or even unknowable, the answer is always going to be “We don’t know yet.”

Reply: Science HATES saying confessing "we don't know". Science is about knowing and getting knowledge and understanding. The scientists mind is all about getting knowledge and diminishing ignorance. Confessing of not knowing, when there is good reason for it, is ok. But claiming of not knowing, despite the evident facts easy at hand and having the ability to come to informed well-founded conclusions based on sound reasoning, and through known facts and evidence, is not only willful ignorance but plain foolishness. In special, when the issues in the discussion are related to origins and worldviews, and eternal destiny is at stake. If there were hundreds of possible statements, then claiming of not knowing which makes most sense could be justified. In the quest of origins and God, there are just two possible explanations. Either there is a God, or there is not a God. That's it. There is however a wealth of evidence in the natural world, which can lead us to informed, well-justified conclusions. We know for example that nature has no "urge" to select things and to complexify, but its natural course is to act upon the laws of thermodynamics, and molecules disintegrate. That is their normal course of action. To become less complex. Systems, given energy and left to themselves, DEVOLVE to give uselessly complex mixtures, “asphalts”. The literature reports (to our knowledge) exactly ZERO CONFIRMED OBSERVATIONS where evolution emerged spontaneously from a devolving chemical system. it is IMPOSSIBLE for any non-living chemical system to escape devolution to enter into the Darwinian world of the “living”. Such statements of impossibility apply even to macromolecules. Both monomers and polymers can undergo a variety of decomposition reactions that must be taken into account because biologically relevant molecules would undergo similar decomposition processes in the prebiotic environment.

CAIRNS-SMITH genetic takeover, page 70

Suppose that by chance some particular coacervate droplet in a primordial ocean happened to have a set of catalysts, etc. that could convert carbon dioxide into D-glucose. Would this have been a major step forward

towards life? Probably not. Sooner or later the droplet would have sunk to the bottom of the ocean and never have been heard of again. It would not have mattered how ingenious or life-like some early system was; if it

lacked the ability to pass on to offspring the secret of its success then it might as well never have existed. So I do not see life as emerging as a matter of course from the general evolution of the cosmos, via chemical evolution, in one grand gradual process of complexification. Instead, following Muller (1929) and others, I would take a genetic View and see the origin of life as hinging on a rather precise technical puzzle. What would have been the easiest way that hereditary machinery could have formed on the primitive Earth?

Claim: That’s called the Sherlock fallacy. It's a false dichotomy

Reply: No. Life is either due to chance, or design. There are no other options.

One of the few biologists, Eugene Koonin, Senior Investigator at the National Center for Biotechnology Information, a recognized expert in the field of evolutionary and computational biology, is honest enough to recognize that abiogenesis research has failed. He wrote in his book: The Logic of Chance page 351:

" Despite many interesting results to its credit, when judged by the straightforward criterion of reaching (or even approaching) the ultimate goal, the origin of life field is a failure—we still do not have even a plausible coherent model, let alone a validated scenario, for the emergence of life on Earth. Certainly, this is due not to a lack of experimental and theoretical effort, but to the extraordinary intrinsic difficulty and complexity of the problem. A succession of exceedingly unlikely steps is essential for the origin of life, from the synthesis and accumulation of nucleotides to the origin of translation; through the multiplication of probabilities, these make the final outcome seem almost like a miracle.

Eliminative inductions argue for the truth of a proposition by demonstrating that competitors to that proposition are false. Either the origin of the basic building blocks of life and self-replicating cells are the result of the creative act by an intelligent designer, or the result of unguided random chemical reactions on the early earth. Science, rather than coming closer to demonstrate how life could have started, has not advanced and is further away to generating living cells starting with small molecules. Therefore, most likely, cells were created by an intelligent designer.

I have listed 27 open questions in regard to the origin of RNA and DNA on the early earth, 27 unsolved problems in regard to the origin of amino acids on the early earth, 12 in regard to phospholipid synthesis, and also unsolved problems in regard to carbohydrate production. The open problems are in reality far greater. This is just a small list. It is not just an issue of things that have not yet been figured out by abiogenesis research, but deep conceptual problems, like the fact that there were no natural selection mechanisms in place on the early earth.

https://reasonandscience.catsboard.com/t1279p75-abiogenesis-is-mathematically-impossible#7759

1. https://onlinelibrary.wiley.com/doi/abs/10.1002/cbdv.200790167

2. https://sci-hub.ren/10.1007/978-1-4939-1468-5_27

3. https://arxiv.org/ftp/arxiv/papers/1511/1511.00698.pdf

4. https://link.springer.com/book/10.1007%2Fb136268

5. https://www.mdpi.com/2073-8994/12/12/2046

6. Andrew H. Knoll: FUNDAMENTALS OF GEOBIOLOGY 2012

Last edited by Otangelo on Sat Dec 30, 2023 2:12 pm; edited 7 times in total