Fine-tuning of the cosmological constant

https://reasonandscience.catsboard.com/t1885-fine-tuning-of-the-cosmological-constant

Geoff Brumfiel Outrageous fortune 04 January 2006

In much the same way as Kepler worried about planetary orbits, cosmologists now puzzle over numbers such as the cosmological constant, which describes how quickly the Universe expands. The observed value is so much smaller than existing theories suggest, and yet so precisely constrained by observations, that theorists are left trying to figure out a deeper meaning for why the cosmological constant has the value it does. To explain the perfectly adjusted cosmological constant one would need at least 1060 universes

https://www.nature.com/articles/439010a

The Cosmological constant (which controls the expansion speed of the universe) refers to the balance of the attractive force of gravity with a hypothesized repulsive force of space observable only at very large size scales. It must be very close to zero, that is, these two forces must be nearly perfectly balanced. To get the right balance, the cosmological constant must be fine-tuned to something like 1 part in 10^120. If it were just slightly more positive, the universe would fly apart; slightly negative, and the universe would collapse. As with the cosmological constant, the ratios of the other constants must be fine-tuned relative to each other. Since the logically possible range of strengths of some forces is potentially infinite, to get a handle on the precision of fine-tuning, theorists often think in terms of the range of force strengths, with gravity the weakest, and the strong nuclear force the strongest. The strong nuclear force is 10^40 times stronger than gravity, that is, ten thousand, billion, billion, billion, billion times the strength of gravity. Think of that range as represented by a ruler stretching across the entire observable universe, about 15 billion light-years. If we increased the strength of gravity by just 1 part in 10^34 of the range of force strengths (the equivalent of moving less than one inch on the universe-long ruler), the universe couldn’t have life-sustaining planets.

Neil A. Manson The Fine-Tuning Argument 4/1 (2009)

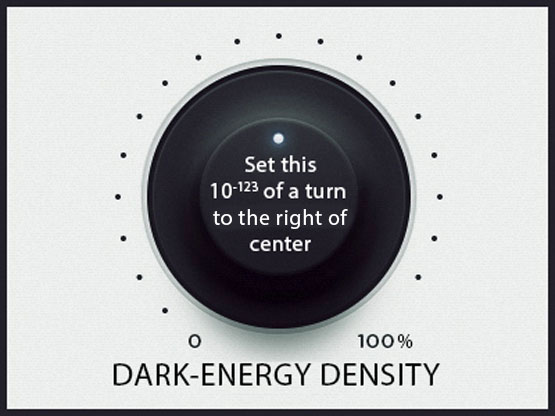

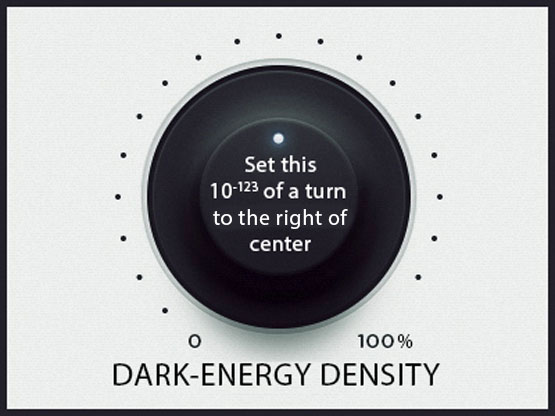

The universe would not have been the sort of place in which life could emerge – not just the very form of life we observe here on Earth, but any conceivable form of life, if the mass of the proton, the mass of the neutron, the speed of light, or the Newtonian gravitational constant were different. In many cases, the cosmic parameters were like the just-right settings on an old-style radio dial: if the knob were turned just a bit, the clear signal would turn to static. As a result, some physicists started describing the values of the parameters as ‘fine-tuned’ for life. To give just one of many possible examples of fine-tuning, the cosmological constant (symbolized by the Greek letter ‘Λ’) is a crucial term in Einstein’s equations for the General Theory of Relativity. When Λ is positive, it acts as a repulsive force, causing space to expand. When Λ is negative, it acts as an attractive force, causing space to contract. If Λ were not precisely what it is, either space would expand at such an enormous rate that all matter in the universe would fly apart, or the universe would collapse back in on itself immediately after the Big Bang. Either way, life could not possibly emerge anywhere in the universe. Some calculations put the odds that ½ took just the right value at well below one chance in a trillion trillion trillion trillion. Similar calculations have been made showing that the odds of the universe’s having carbon-producing stars (carbon is essential to life), or of not being millions of degrees hotter than it is, or of not being shot through with deadly radiation, are likewise astronomically small. Given this extremely improbable fine-tuning, say, proponents of FTA, we should think it much more likely that God exists than we did before we learned about fine-tuning. After all, if we believe in God, we will have an explanation of fine-tuning, whereas if we say the universe is fine-tuned by chance, we must believe something incredibly improbable happened.

http://home.olemiss.edu/~namanson/Fine%20tuning%20argument.pdf

Stephen C. Meyer: The return of the God hypothesis, page 185

The cosmological constant requires an even greater degree of fine-tuning. (Remember that the cosmological constant is a constant in Einstein’s field equations. It represents the energy density of space that contributes to the outward expansion of space in opposition to gravitational attraction.) The most conservative estimate for that fine-tuning is 1 part in 10^53 , but the number 1 part in 10^120 is more frequently cited. Physicists now commonly agree that the degree of fine-tuning for the cosmological constant is no less than 1 part in 10^90 .

To get a sense of what this number means, imagine searching the vastness of the visible universe for one specially marked subatomic particle. Then consider that the visible universe contains about 200 billion galaxies each with about 100 billion stars along with a panoply of asteroids, planets, moons, comets, and interstellar dust associated with each of those stars. Now assume that you have the special power to move instantaneously anywhere in the universe to select—blindfolded and at random—any subatomic particle you wish. The probability of your finding a specially marked subatomic particle—1 chance in 10^80—is still 10 billion times better than the probability—1 part in 10^90—that the universe would have happened upon a life-permitting strength for the cosmological constant.

Sean Carroll: The Cosmological Constant 7 February 2001 8

We happen to live in that brief era, cosmologically speaking, when both matter and vacuum are of comparable magnitude. This scenario staggers under the burden of its unnaturalness. A major challenge to cosmologists and physicists in the years to come will be to understand whether these apparently distasteful aspects of our universe are simply surprising coincidences, or actually reflect a beautiful underlying structure

we do not as yet comprehend.

My comment: Or the data may lead to the conclusion that God hat to be involved in creating the universe, and set the constants just right.

The most fine-tuned of these parameters seems to be the cosmological constant, a concept that Albert Einstein proposed to provide an outward-pushing pressure that he thought was needed to prevent gravity from causing the universe's matter from collapsing onto itself. 7

On the largest scales, there’s also a cosmic tug-of-war between the attractive force of gravity and the repulsive dark or vacuum energy. Often called the cosmological constant, which is theorized to be the result of a nonzero vacuum energy detectable at cosmological scales, it’s one of the few cosmological parameters that determine the dynamics of the universe as a whole. By observing Type Ia supernovae, astronomers have determined that today it contributes about as much to the dynamics of the universe as the gravitational attractive force from visible and dark matter combined. This coincidence remains unexplained, but some cosmologists suspect it’s amenable to “anthropic explanation.” 6

There’s only one “special” time in the history of the universe when the vacuum and matter-energy densities are the same, and we’re living very near it. If the vacuum energy had become prominent a few billion years earlier than it did in our universe, there would have been no galaxies. If it had overtaken gravity a little earlier still, there would have been no individual stars. A few billion years might seem like a lot of room to manoeuvre, but there’s an even more striking level of fine-tuning here. The second “cosmological constant problem” is that the observed value of the vacuum energy is between 10^53 and 10^123 times smaller than that expected from theory. The vacuum energy density is, basically, the energy density of spacetime in the absence of fields resulting from matter.28 Until the Type Ia supernovae results demonstrated a few years ago that the cosmological constant is something other than zero, most cosmologists hoped that some undiscovered law of physics required it to be exactly zero. They already knew that its observational upper limit was much smaller than the “natural” values expected from various particle fields and other theoretical fields. These particle fields require an extraordinary degree of fine-tuning— at least to one part in 10^53—to get such a small, positive, nonzero value for the vacuum energy. At the same time, its value must be large enough in the early universe to cause the newborn universe to expand exponentially, as inflation theory postulates. How the present value of the vacuum energy relates to the early expansion is yet another issue of debate.

Max Tegmark:

“How far could you rotate the dark-energy knob before the “Oops!” moment? If rotating it…by a full turn would vary the density across the full range, then the actual knob setting for our Universe is about 10^123 of a turn away from the halfway point. That means that if you want to tune the knob to allow galaxies to form, you have to get the angle by which you rotate it right to 123 decimal places!

That means that the probability that our universe contains galaxies is akin to exactly 1 possibility in 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,

000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 . Unlikely doesn’t even begin to describe these odds. There are “only” 10^81 atoms in the observable universe, after all.

http://www.secretsinplainsight.com/2015/09/19/cosmic-lottery/

The cosmological constant

The smallness of the cosmological constant is widely regarded as the single the greatest problem confronting current physics and cosmology The cosmological constant is a term in Einstein’s equation that, when positive, acts as a repulsive force, causing space to expand and, when negative, acts as an attractive force, causing space to contract. Apart from some sort of extraordinarily precise fine-tuning or new physical principle, today’s theories of fundamental physics and cosmology lead one to expect that the vacuum that is, the state of space-time free of ordinary matter fields—has an extraordinarily large energy density. This energy density, in turn, acts as an effective cosmological constant, thus leading one to expect an extraordinarily large effective cosmological constant, one so large that it would, if positive, cause space to expand at such an enormous rate that almost every object in the Universe would fly apart, and would, if negative, cause the Universe to collapse almost instantaneously back in on itself. This would clearly, make the evolution of intelligent life impossible. What makes it so difficult to avoid postulating some sort of highly precise fine-tuning of the cosmological constant is that almost every type of field in current physics—the electromagnetic field, the Higgs fields associated with the weak force, the inflaton field hypothesized by inflationary cosmology, the dilaton field hypothesized by superstring theory, and the fields associated with elementary particles such as electrons—contributes to the vacuum energy. Although no one knows how to calculate the energy density of the vacuum, when physicists make estimates of the contribution to the vacuum energy from these fields, they get values of the energy density anywhere from 10^53 to 10^120 higher than its maximum life-permitting value, max.6 (Here, max is expressed in terms of the energy density of empty space.)

GOD AND DESIGN The teleological argument and modern science , page 180

Max Tegmark:

“How far could you rotate the dark-energy knob before the “Oops!” moment? The current setting of the knob, corresponding to the dark-energy density we’ve actually measured, is about 10−27 kilograms per cubic meter, which is almost ridiculously close to zero compared to the available range: the natural maximum value for the dial is a dark-energy density around 1097 kilograms per cubic meter, which is when the quantum fluctuations fill space with tiny black holes, and the minimum value is the same with a minus sign in front. If rotating the dark-energy knob…by a full turn would vary the density across the full range, then the actual knob setting for our Universe is about 10^123 of a turn away from the halfway point. That means that if you want to tune the knob to allow galaxies to form, you have to get the angle by which you rotate it right to 123 decimal places!

That means that the probability that our universe contains galaxies is akin to exactly 1 possibility in 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,

000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 . Unlikely doesn’t even begin to describe these odds. There are “only” 10^81 atoms in the observable universe, after all. 4

The Balance of the Bang: In order for life to be possible in the universe, the explosive power of the Big Bang needed to be extremely closely matched to the amount of mass and balanced with the force of gravity, so that the expansion-speed is very precise. 1 This very exact expansion-speed of the universe, is called the "Cosmological Constant." If the force of the bang was slightly too weak, the expanding matter would have collapsed back in on itself before any planets suitable for life (or stars) had a chance to form, ---but if the bang was slightly too strong, the resultant matter would have been only hydrogen gas that was so diffuse and expanding so fast, that no stars or planets could have formed at all.

Science writer Gregg Easterbrook explains the required explosive power-balance of the Big Bang, saying that, "Researchers have calculated that, if the ratio of matter and energy to the volume of space ...had not been within about one-quadrillionth of one percent of ideal at the moment of the Big Bang, the incipient universe would have collapsed back on itself or suffered runaway relativity effects" (My emphasis.) (ref. G.Easterbrook, "Science Sees the Light", The New Republic, Oct.12, 1998, p.26).

In terms of the expansion rate of the universe as a result of the Big Bang: "What's even more amazing is how delicately balanced that expansion rate must be for life to exist. It cannot differ by more than one part in 10^55 from the actual rate." (My emphasis.) (Ref: H.Ross, 1995, as cited above, p.116). (Note: 10^55 is the number 1 with 55 zeros after it ---and 10^55 is about the number of atoms that make up planet earth).

THE PROBABILITY: The chances we can conservatively assign to this: It was about one chance out of 1021 that the force of the Big Bang could have randomly been properly balanced with the mass & gravity of the universe, in order for stars and planets to form, so that life could exist here in our cosmos.

Leonard Susskind The Cosmic Landscape:

"To make the first 119 decimal places of the vacuum energy zero is most certainly no accident." (The vacuum energy relates to the cosmological constant.) 2

“Logically, it is possible that the laws of physics conspire to create an almost but not quite perfect cancellation [of the energy involved in the quantum fluctuations]. But then it would be an extraordinary coincidence that that level of cancellation—119 powers of ten, after all—just happened by chance to be what is needed to bring about a universe fit for life. How much chance can we buy in scientific explanation? One measure of what is involved can be given in terms of coin flipping: odds of 10^120 to one is like getting heads no fewer than four hundred times in a row. if the existence of life in the universe is completely independent of the big fix mechanism—if it’s just a coincidence—then those are the odds against our being here. That level of flukiness seems too much to swallow.”

The Case of Negative λ

So far I have told you about the repulsive effects that accompany positive vacuum energy. But suppose that the contribution of fermions outweighed that of bosons: then the net vacuum energy would be a negative number. Is this possible? If so, how does it affect Weinberg’s arguments? The answer to the first question is yes, it can happen very easily. All you need is a few more fermion-type particles than bosons and the cosmological constant can be made negative. The second question has an equally simple answer—changing the sign of λ switches the repulsive effects of a cosmological constant to a universal attraction: not the usual gravitational attractive force but a force that increases with distance. To argue convincingly that a large cosmological constant would automatically render the universe uninhabitable, we need to show that life could not form if the cosmological constant were large and negative. What would the universe be like if the laws of nature were unaltered except for a negative cosmological constant? The answer is even easier than the case of positive λ. The additional attractive force would eventually overwhelm the outward motion of the Hubble expansion: the universe would reverse its motion and start to collapse like a punctured balloon. Galaxies, stars, planets, and all life would be crushed in an ultimate “big crunch.” If the negative cosmological constant were too large, the crunch would not allow the billions of years necessary for life like ours to evolve. Thus, there is an anthropic bound on negative λ to match Weinberg’s positive bound. In fact, the numbers are fairly similar. If the cosmological constant is negative, it must also not be much bigger than 10^120 Units if life is to have any possibility of evolving. Nothing we have said precludes there being pocket universes far from our own with either a large positive or large negative cosmological constant. But they are not places where life is possible. In the ones with large positive λ, everything flies apart so quickly that there is no chance for matter to assemble itself into structures like galaxies, stars, planets, atoms, or even nuclei. In the pockets with large negative λ, the expanding universe quickly turns around and crushes any hope of life.

https://3lib.net/book/2472017/1d5be1

Cover America with coins in a column reaching to the moon (380,000 km or 236,000 miles away), then do the same for a billion other continents of the same size. Paint one coin red and put it somewhere in one billion of the piles. Blindfold a friend and ask her to pick the coin. The odds of her picking it are 1 in 10^37

Stephen Hawking writes in A Brief History of Time, p. 125:

"The remarkable fact is that the values of these numbers (i.e. the constants of physics) seem to have been very finely adjusted to make possible the development of life" (p. 125)

Extreme Fine Tuning - the Cosmological Constant 3

The recent Nature study popularized in the press regarding the nature of the universe has confirmed some of the original studies involving supernovae type 1.1 The supernovae results suggested that there was a "springiness" to space, called the "cosmological constant," that causes the universe to expand at a faster rate the more it has expanded. Often described as an "anti-gravity" force, it doesn't really oppose matter, but only affects matter as it is associated with the fabric of space.

The balloon-borne microwave telescope (called "Boomerang") examined the cosmic background radiation left over from the Big Bang.2 The angular power spectrum showed a peak value at exactly the value predicted by the inflationary hot Big Bang model dominated by cold dark matter. This model predicts a smaller second peak, which seems to be there, but cannot be fully resolved with the initial measurements. The presence of the second peak would all but seal the reliability of the Big Bang model as the mechanism by which the universe came into existence.

How does this study impact the Christian faith? The Bible says that the universe was created in finite time from that which is not visible.3 In addition, the Bible describes an expanding universe model. The Bible describes the Creator being personally involved in the design of the universe, so that we would expect to see this kind of design in His creation.4

How does this discovery impact atheists? Those who favor naturalism had long sought to find the simplest explanation for the universe, hoping to avoid any evidence for design. A Big Bang model in which there was just enough matter to equal the critical density to account for a flat universe would have provided that. However, for many years, it has been evident that there is less than half of the amount of matter in the universe to account for a flat universe. A cosmological constant would provide an energy density to make up for the missing matter density but would require an extreme amount of fine tuning. The supernovae studies demonstrated that there was an energy density to the universe (but did not define the size of this energy density), and the recent Boomerang study demonstrated that this energy density is exactly what one would expect to get a flat universe. How finely tuned must this energy density be to get a flat universe? One part in 10120,5 which is:

1000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000

What do atheist think about this level of design? Here is a quote from a recent article:

Atheists see a conflict because this level of design is something that one would not expect by chance from a universe that began through a purely naturalistic mechanism. "Common wisdom" is common only to those who must exclude a supernatural explanation for the creation of the universe. In fact, a purely naturalistic cause for the universe is extremely unlikely and, therefore, illogical. The Bible says that the fear of the Lord is the beginning of wisdom,6 and that He created the universe.7 When a model doesn't work, scientists must be willing to give up their model for a model that fits the facts better. In this case, the supernatural design model fits the data much better than naturalistic random chance model.

Guillermo Gonzalez, Jay W. Richards: The Privileged Planet: How Our Place in the Cosmos Is Designed for Discovery 2004 page 205

on the largest scales, there’s also a cosmic tug-of-war between the attractive force of gravity and the repulsive dark or vacuum energy. Often called the cosmological constant, which is theorized to be the result of nonzero vacuum energy detectable at cosmological scales, it’s one of the few cosmological parameters that determine the dynamics of the universe as a whole. By observing Type Ia supernovae, astronomers have determined that today it contributes about as much to the dynamics of the universe as the gravitational attractive force from visible and dark matter combined. This coincidence remains unexplained, but some cosmologists suspect it’s amenable to “anthropic explanation.”27 There’s only one “special” time in the history of the universe when the vacuum and matter-energy densities are the same, and we’re living very near it. If the vacuum energy had become prominent a few billion years earlier than it did in our universe, there would have been no galaxies. If it had overtaken gravity a little earlier still, there would have been no individual stars. A few billion years might seem like a lot of room to maneuver, but there’s an even more striking level of fine-tuning here. The second “cosmological constant problem” is that the observed value of the vacuum energy is between 1053 and 10123 times smaller than that expected from theory. The vacuum energy density is, basically, the energy density of space-time in the absence of fields resulting from matter.28 Until the Type Ia supernovae results demonstrated a few years ago that the cosmological constant is something other than zero, most cosmologists hoped that some undiscovered law of physics required it to be exactly zero. They already knew that its observational upper limit was much smaller than the “natural” values expected from various particle fields and other theoretical fields. These particle fields require an extraordinary degree of fine-tuning— at least to one part in 1053—to get such a small, positive, nonzero value for the vacuum energy. At the same time, its value must be large enough in the early universe to cause the newborn universe to expand exponentially, as inflation theory postulates. How the present value of the vacuum energy relates to the early expansion is yet another issue of debate.

https://3lib.net/book/5102561/45e43d

1. http://worldview3.50webs.com/mathprfcosmos.html

2. http://www.uncommondescent.com/intelligent-design/coin-flips-do-matter/

3. http://web.archive.org/web/20090730174024/http://geocities.com/CapeCanaveral/Lab/6562/apologetics/cosmoconstant.html

4. http://www.secretsinplainsight.com/2015/09/19/cosmic-lottery/

5. GOD AND DESIGN The teleological argument and modern science , page 180

6. Guillermo Gonzalez and Jay W. Richards THE PRIVILEGED PLANET HOW OUR PLACE IN THE COSMOS IS DESIGNED FOR DISCOVERY page 205

7. https://www.insidescience.org/news/more-finely-tuned-universe

8. https://sci-hub.ren/10.12942/lrr-2001-1

https://reasonandscience.catsboard.com/t1885-fine-tuning-of-the-cosmological-constant

Geoff Brumfiel Outrageous fortune 04 January 2006

In much the same way as Kepler worried about planetary orbits, cosmologists now puzzle over numbers such as the cosmological constant, which describes how quickly the Universe expands. The observed value is so much smaller than existing theories suggest, and yet so precisely constrained by observations, that theorists are left trying to figure out a deeper meaning for why the cosmological constant has the value it does. To explain the perfectly adjusted cosmological constant one would need at least 1060 universes

https://www.nature.com/articles/439010a

The Cosmological constant (which controls the expansion speed of the universe) refers to the balance of the attractive force of gravity with a hypothesized repulsive force of space observable only at very large size scales. It must be very close to zero, that is, these two forces must be nearly perfectly balanced. To get the right balance, the cosmological constant must be fine-tuned to something like 1 part in 10^120. If it were just slightly more positive, the universe would fly apart; slightly negative, and the universe would collapse. As with the cosmological constant, the ratios of the other constants must be fine-tuned relative to each other. Since the logically possible range of strengths of some forces is potentially infinite, to get a handle on the precision of fine-tuning, theorists often think in terms of the range of force strengths, with gravity the weakest, and the strong nuclear force the strongest. The strong nuclear force is 10^40 times stronger than gravity, that is, ten thousand, billion, billion, billion, billion times the strength of gravity. Think of that range as represented by a ruler stretching across the entire observable universe, about 15 billion light-years. If we increased the strength of gravity by just 1 part in 10^34 of the range of force strengths (the equivalent of moving less than one inch on the universe-long ruler), the universe couldn’t have life-sustaining planets.

Neil A. Manson The Fine-Tuning Argument 4/1 (2009)

The universe would not have been the sort of place in which life could emerge – not just the very form of life we observe here on Earth, but any conceivable form of life, if the mass of the proton, the mass of the neutron, the speed of light, or the Newtonian gravitational constant were different. In many cases, the cosmic parameters were like the just-right settings on an old-style radio dial: if the knob were turned just a bit, the clear signal would turn to static. As a result, some physicists started describing the values of the parameters as ‘fine-tuned’ for life. To give just one of many possible examples of fine-tuning, the cosmological constant (symbolized by the Greek letter ‘Λ’) is a crucial term in Einstein’s equations for the General Theory of Relativity. When Λ is positive, it acts as a repulsive force, causing space to expand. When Λ is negative, it acts as an attractive force, causing space to contract. If Λ were not precisely what it is, either space would expand at such an enormous rate that all matter in the universe would fly apart, or the universe would collapse back in on itself immediately after the Big Bang. Either way, life could not possibly emerge anywhere in the universe. Some calculations put the odds that ½ took just the right value at well below one chance in a trillion trillion trillion trillion. Similar calculations have been made showing that the odds of the universe’s having carbon-producing stars (carbon is essential to life), or of not being millions of degrees hotter than it is, or of not being shot through with deadly radiation, are likewise astronomically small. Given this extremely improbable fine-tuning, say, proponents of FTA, we should think it much more likely that God exists than we did before we learned about fine-tuning. After all, if we believe in God, we will have an explanation of fine-tuning, whereas if we say the universe is fine-tuned by chance, we must believe something incredibly improbable happened.

http://home.olemiss.edu/~namanson/Fine%20tuning%20argument.pdf

Stephen C. Meyer: The return of the God hypothesis, page 185

The cosmological constant requires an even greater degree of fine-tuning. (Remember that the cosmological constant is a constant in Einstein’s field equations. It represents the energy density of space that contributes to the outward expansion of space in opposition to gravitational attraction.) The most conservative estimate for that fine-tuning is 1 part in 10^53 , but the number 1 part in 10^120 is more frequently cited. Physicists now commonly agree that the degree of fine-tuning for the cosmological constant is no less than 1 part in 10^90 .

To get a sense of what this number means, imagine searching the vastness of the visible universe for one specially marked subatomic particle. Then consider that the visible universe contains about 200 billion galaxies each with about 100 billion stars along with a panoply of asteroids, planets, moons, comets, and interstellar dust associated with each of those stars. Now assume that you have the special power to move instantaneously anywhere in the universe to select—blindfolded and at random—any subatomic particle you wish. The probability of your finding a specially marked subatomic particle—1 chance in 10^80—is still 10 billion times better than the probability—1 part in 10^90—that the universe would have happened upon a life-permitting strength for the cosmological constant.

Sean Carroll: The Cosmological Constant 7 February 2001 8

We happen to live in that brief era, cosmologically speaking, when both matter and vacuum are of comparable magnitude. This scenario staggers under the burden of its unnaturalness. A major challenge to cosmologists and physicists in the years to come will be to understand whether these apparently distasteful aspects of our universe are simply surprising coincidences, or actually reflect a beautiful underlying structure

we do not as yet comprehend.

My comment: Or the data may lead to the conclusion that God hat to be involved in creating the universe, and set the constants just right.

The most fine-tuned of these parameters seems to be the cosmological constant, a concept that Albert Einstein proposed to provide an outward-pushing pressure that he thought was needed to prevent gravity from causing the universe's matter from collapsing onto itself. 7

On the largest scales, there’s also a cosmic tug-of-war between the attractive force of gravity and the repulsive dark or vacuum energy. Often called the cosmological constant, which is theorized to be the result of a nonzero vacuum energy detectable at cosmological scales, it’s one of the few cosmological parameters that determine the dynamics of the universe as a whole. By observing Type Ia supernovae, astronomers have determined that today it contributes about as much to the dynamics of the universe as the gravitational attractive force from visible and dark matter combined. This coincidence remains unexplained, but some cosmologists suspect it’s amenable to “anthropic explanation.” 6

There’s only one “special” time in the history of the universe when the vacuum and matter-energy densities are the same, and we’re living very near it. If the vacuum energy had become prominent a few billion years earlier than it did in our universe, there would have been no galaxies. If it had overtaken gravity a little earlier still, there would have been no individual stars. A few billion years might seem like a lot of room to manoeuvre, but there’s an even more striking level of fine-tuning here. The second “cosmological constant problem” is that the observed value of the vacuum energy is between 10^53 and 10^123 times smaller than that expected from theory. The vacuum energy density is, basically, the energy density of spacetime in the absence of fields resulting from matter.28 Until the Type Ia supernovae results demonstrated a few years ago that the cosmological constant is something other than zero, most cosmologists hoped that some undiscovered law of physics required it to be exactly zero. They already knew that its observational upper limit was much smaller than the “natural” values expected from various particle fields and other theoretical fields. These particle fields require an extraordinary degree of fine-tuning— at least to one part in 10^53—to get such a small, positive, nonzero value for the vacuum energy. At the same time, its value must be large enough in the early universe to cause the newborn universe to expand exponentially, as inflation theory postulates. How the present value of the vacuum energy relates to the early expansion is yet another issue of debate.

Max Tegmark:

“How far could you rotate the dark-energy knob before the “Oops!” moment? If rotating it…by a full turn would vary the density across the full range, then the actual knob setting for our Universe is about 10^123 of a turn away from the halfway point. That means that if you want to tune the knob to allow galaxies to form, you have to get the angle by which you rotate it right to 123 decimal places!

That means that the probability that our universe contains galaxies is akin to exactly 1 possibility in 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,

000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 . Unlikely doesn’t even begin to describe these odds. There are “only” 10^81 atoms in the observable universe, after all.

http://www.secretsinplainsight.com/2015/09/19/cosmic-lottery/

The cosmological constant

The smallness of the cosmological constant is widely regarded as the single the greatest problem confronting current physics and cosmology The cosmological constant is a term in Einstein’s equation that, when positive, acts as a repulsive force, causing space to expand and, when negative, acts as an attractive force, causing space to contract. Apart from some sort of extraordinarily precise fine-tuning or new physical principle, today’s theories of fundamental physics and cosmology lead one to expect that the vacuum that is, the state of space-time free of ordinary matter fields—has an extraordinarily large energy density. This energy density, in turn, acts as an effective cosmological constant, thus leading one to expect an extraordinarily large effective cosmological constant, one so large that it would, if positive, cause space to expand at such an enormous rate that almost every object in the Universe would fly apart, and would, if negative, cause the Universe to collapse almost instantaneously back in on itself. This would clearly, make the evolution of intelligent life impossible. What makes it so difficult to avoid postulating some sort of highly precise fine-tuning of the cosmological constant is that almost every type of field in current physics—the electromagnetic field, the Higgs fields associated with the weak force, the inflaton field hypothesized by inflationary cosmology, the dilaton field hypothesized by superstring theory, and the fields associated with elementary particles such as electrons—contributes to the vacuum energy. Although no one knows how to calculate the energy density of the vacuum, when physicists make estimates of the contribution to the vacuum energy from these fields, they get values of the energy density anywhere from 10^53 to 10^120 higher than its maximum life-permitting value, max.6 (Here, max is expressed in terms of the energy density of empty space.)

GOD AND DESIGN The teleological argument and modern science , page 180

Max Tegmark:

“How far could you rotate the dark-energy knob before the “Oops!” moment? The current setting of the knob, corresponding to the dark-energy density we’ve actually measured, is about 10−27 kilograms per cubic meter, which is almost ridiculously close to zero compared to the available range: the natural maximum value for the dial is a dark-energy density around 1097 kilograms per cubic meter, which is when the quantum fluctuations fill space with tiny black holes, and the minimum value is the same with a minus sign in front. If rotating the dark-energy knob…by a full turn would vary the density across the full range, then the actual knob setting for our Universe is about 10^123 of a turn away from the halfway point. That means that if you want to tune the knob to allow galaxies to form, you have to get the angle by which you rotate it right to 123 decimal places!

That means that the probability that our universe contains galaxies is akin to exactly 1 possibility in 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,

000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 . Unlikely doesn’t even begin to describe these odds. There are “only” 10^81 atoms in the observable universe, after all. 4

The Balance of the Bang: In order for life to be possible in the universe, the explosive power of the Big Bang needed to be extremely closely matched to the amount of mass and balanced with the force of gravity, so that the expansion-speed is very precise. 1 This very exact expansion-speed of the universe, is called the "Cosmological Constant." If the force of the bang was slightly too weak, the expanding matter would have collapsed back in on itself before any planets suitable for life (or stars) had a chance to form, ---but if the bang was slightly too strong, the resultant matter would have been only hydrogen gas that was so diffuse and expanding so fast, that no stars or planets could have formed at all.

Science writer Gregg Easterbrook explains the required explosive power-balance of the Big Bang, saying that, "Researchers have calculated that, if the ratio of matter and energy to the volume of space ...had not been within about one-quadrillionth of one percent of ideal at the moment of the Big Bang, the incipient universe would have collapsed back on itself or suffered runaway relativity effects" (My emphasis.) (ref. G.Easterbrook, "Science Sees the Light", The New Republic, Oct.12, 1998, p.26).

In terms of the expansion rate of the universe as a result of the Big Bang: "What's even more amazing is how delicately balanced that expansion rate must be for life to exist. It cannot differ by more than one part in 10^55 from the actual rate." (My emphasis.) (Ref: H.Ross, 1995, as cited above, p.116). (Note: 10^55 is the number 1 with 55 zeros after it ---and 10^55 is about the number of atoms that make up planet earth).

THE PROBABILITY: The chances we can conservatively assign to this: It was about one chance out of 1021 that the force of the Big Bang could have randomly been properly balanced with the mass & gravity of the universe, in order for stars and planets to form, so that life could exist here in our cosmos.

Leonard Susskind The Cosmic Landscape:

"To make the first 119 decimal places of the vacuum energy zero is most certainly no accident." (The vacuum energy relates to the cosmological constant.) 2

“Logically, it is possible that the laws of physics conspire to create an almost but not quite perfect cancellation [of the energy involved in the quantum fluctuations]. But then it would be an extraordinary coincidence that that level of cancellation—119 powers of ten, after all—just happened by chance to be what is needed to bring about a universe fit for life. How much chance can we buy in scientific explanation? One measure of what is involved can be given in terms of coin flipping: odds of 10^120 to one is like getting heads no fewer than four hundred times in a row. if the existence of life in the universe is completely independent of the big fix mechanism—if it’s just a coincidence—then those are the odds against our being here. That level of flukiness seems too much to swallow.”

The Case of Negative λ

So far I have told you about the repulsive effects that accompany positive vacuum energy. But suppose that the contribution of fermions outweighed that of bosons: then the net vacuum energy would be a negative number. Is this possible? If so, how does it affect Weinberg’s arguments? The answer to the first question is yes, it can happen very easily. All you need is a few more fermion-type particles than bosons and the cosmological constant can be made negative. The second question has an equally simple answer—changing the sign of λ switches the repulsive effects of a cosmological constant to a universal attraction: not the usual gravitational attractive force but a force that increases with distance. To argue convincingly that a large cosmological constant would automatically render the universe uninhabitable, we need to show that life could not form if the cosmological constant were large and negative. What would the universe be like if the laws of nature were unaltered except for a negative cosmological constant? The answer is even easier than the case of positive λ. The additional attractive force would eventually overwhelm the outward motion of the Hubble expansion: the universe would reverse its motion and start to collapse like a punctured balloon. Galaxies, stars, planets, and all life would be crushed in an ultimate “big crunch.” If the negative cosmological constant were too large, the crunch would not allow the billions of years necessary for life like ours to evolve. Thus, there is an anthropic bound on negative λ to match Weinberg’s positive bound. In fact, the numbers are fairly similar. If the cosmological constant is negative, it must also not be much bigger than 10^120 Units if life is to have any possibility of evolving. Nothing we have said precludes there being pocket universes far from our own with either a large positive or large negative cosmological constant. But they are not places where life is possible. In the ones with large positive λ, everything flies apart so quickly that there is no chance for matter to assemble itself into structures like galaxies, stars, planets, atoms, or even nuclei. In the pockets with large negative λ, the expanding universe quickly turns around and crushes any hope of life.

https://3lib.net/book/2472017/1d5be1

Cover America with coins in a column reaching to the moon (380,000 km or 236,000 miles away), then do the same for a billion other continents of the same size. Paint one coin red and put it somewhere in one billion of the piles. Blindfold a friend and ask her to pick the coin. The odds of her picking it are 1 in 10^37

Stephen Hawking writes in A Brief History of Time, p. 125:

"The remarkable fact is that the values of these numbers (i.e. the constants of physics) seem to have been very finely adjusted to make possible the development of life" (p. 125)

Extreme Fine Tuning - the Cosmological Constant 3

The recent Nature study popularized in the press regarding the nature of the universe has confirmed some of the original studies involving supernovae type 1.1 The supernovae results suggested that there was a "springiness" to space, called the "cosmological constant," that causes the universe to expand at a faster rate the more it has expanded. Often described as an "anti-gravity" force, it doesn't really oppose matter, but only affects matter as it is associated with the fabric of space.

The balloon-borne microwave telescope (called "Boomerang") examined the cosmic background radiation left over from the Big Bang.2 The angular power spectrum showed a peak value at exactly the value predicted by the inflationary hot Big Bang model dominated by cold dark matter. This model predicts a smaller second peak, which seems to be there, but cannot be fully resolved with the initial measurements. The presence of the second peak would all but seal the reliability of the Big Bang model as the mechanism by which the universe came into existence.

How does this study impact the Christian faith? The Bible says that the universe was created in finite time from that which is not visible.3 In addition, the Bible describes an expanding universe model. The Bible describes the Creator being personally involved in the design of the universe, so that we would expect to see this kind of design in His creation.4

How does this discovery impact atheists? Those who favor naturalism had long sought to find the simplest explanation for the universe, hoping to avoid any evidence for design. A Big Bang model in which there was just enough matter to equal the critical density to account for a flat universe would have provided that. However, for many years, it has been evident that there is less than half of the amount of matter in the universe to account for a flat universe. A cosmological constant would provide an energy density to make up for the missing matter density but would require an extreme amount of fine tuning. The supernovae studies demonstrated that there was an energy density to the universe (but did not define the size of this energy density), and the recent Boomerang study demonstrated that this energy density is exactly what one would expect to get a flat universe. How finely tuned must this energy density be to get a flat universe? One part in 10120,5 which is:

1000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000

What do atheist think about this level of design? Here is a quote from a recent article:

"This type of universe, however, seems to require a degree of fine tuning of the initial conditions that is in apparent conflict with 'common wisdom'."1

Atheists see a conflict because this level of design is something that one would not expect by chance from a universe that began through a purely naturalistic mechanism. "Common wisdom" is common only to those who must exclude a supernatural explanation for the creation of the universe. In fact, a purely naturalistic cause for the universe is extremely unlikely and, therefore, illogical. The Bible says that the fear of the Lord is the beginning of wisdom,6 and that He created the universe.7 When a model doesn't work, scientists must be willing to give up their model for a model that fits the facts better. In this case, the supernatural design model fits the data much better than naturalistic random chance model.

Guillermo Gonzalez, Jay W. Richards: The Privileged Planet: How Our Place in the Cosmos Is Designed for Discovery 2004 page 205

on the largest scales, there’s also a cosmic tug-of-war between the attractive force of gravity and the repulsive dark or vacuum energy. Often called the cosmological constant, which is theorized to be the result of nonzero vacuum energy detectable at cosmological scales, it’s one of the few cosmological parameters that determine the dynamics of the universe as a whole. By observing Type Ia supernovae, astronomers have determined that today it contributes about as much to the dynamics of the universe as the gravitational attractive force from visible and dark matter combined. This coincidence remains unexplained, but some cosmologists suspect it’s amenable to “anthropic explanation.”27 There’s only one “special” time in the history of the universe when the vacuum and matter-energy densities are the same, and we’re living very near it. If the vacuum energy had become prominent a few billion years earlier than it did in our universe, there would have been no galaxies. If it had overtaken gravity a little earlier still, there would have been no individual stars. A few billion years might seem like a lot of room to maneuver, but there’s an even more striking level of fine-tuning here. The second “cosmological constant problem” is that the observed value of the vacuum energy is between 1053 and 10123 times smaller than that expected from theory. The vacuum energy density is, basically, the energy density of space-time in the absence of fields resulting from matter.28 Until the Type Ia supernovae results demonstrated a few years ago that the cosmological constant is something other than zero, most cosmologists hoped that some undiscovered law of physics required it to be exactly zero. They already knew that its observational upper limit was much smaller than the “natural” values expected from various particle fields and other theoretical fields. These particle fields require an extraordinary degree of fine-tuning— at least to one part in 1053—to get such a small, positive, nonzero value for the vacuum energy. At the same time, its value must be large enough in the early universe to cause the newborn universe to expand exponentially, as inflation theory postulates. How the present value of the vacuum energy relates to the early expansion is yet another issue of debate.

https://3lib.net/book/5102561/45e43d

1. http://worldview3.50webs.com/mathprfcosmos.html

2. http://www.uncommondescent.com/intelligent-design/coin-flips-do-matter/

3. http://web.archive.org/web/20090730174024/http://geocities.com/CapeCanaveral/Lab/6562/apologetics/cosmoconstant.html

4. http://www.secretsinplainsight.com/2015/09/19/cosmic-lottery/

5. GOD AND DESIGN The teleological argument and modern science , page 180

6. Guillermo Gonzalez and Jay W. Richards THE PRIVILEGED PLANET HOW OUR PLACE IN THE COSMOS IS DESIGNED FOR DISCOVERY page 205

7. https://www.insidescience.org/news/more-finely-tuned-universe

8. https://sci-hub.ren/10.12942/lrr-2001-1

Last edited by Otangelo on Wed Jul 28, 2021 3:45 pm; edited 18 times in total