https://reasonandscience.catsboard.com/t1866-fine-tuning-of-the-big-bang

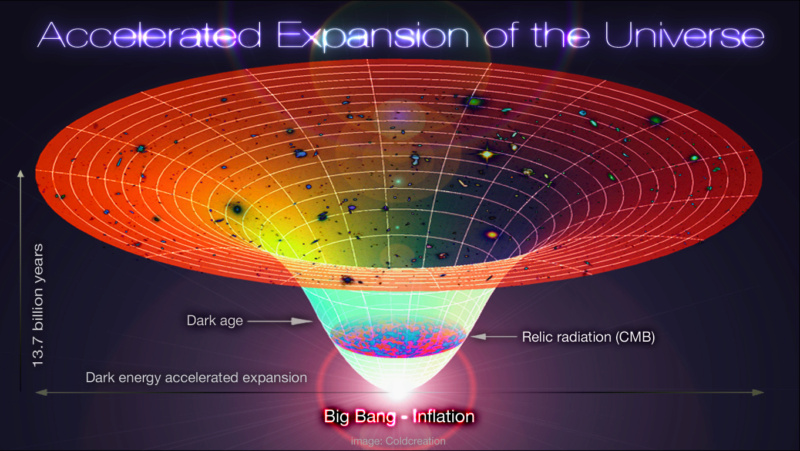

The first thing that had to be finely tuned in the universe was the Big bang. Fast-forward a nanosecond or two and in the beginning you had this cosmic soup of elementary stuff - electrons and quarks and neutrinos and photons and gravitons and muons and gluons and Higgs bosons (plus corresponding anti-particles like the positron) - a real vegetable soup. There had to have been a mechanism to produce this myriad of fundamentals instead of just one thing. There could have been a cosmos where the sum total of mass was pure neutrinos and all of the energy was purely kinetic.

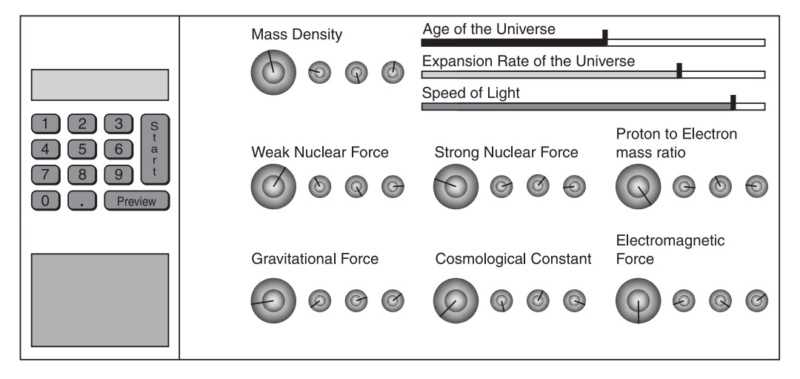

In the paper Do We Live in the Best of All Possible Worlds? The Fine-Tuning of the Constants of Nature, states: The evolution of the Universe is characterized by a delicate balance of its inventory, a balance between attraction and repulsion, between expansion and contraction. It mentions 4 parameters that must be finely tuned. There is a fifth, which is mentioned in Martin Rees book: Just six numbers ). Matter-antimatter symmetry. Physicist Roger Penrose estimated that the odds of the initial low entropy state of our universe occurring by chance alone are on the order of 1 in 10 10^123.

1. Gravitational constant G: 1/10^60

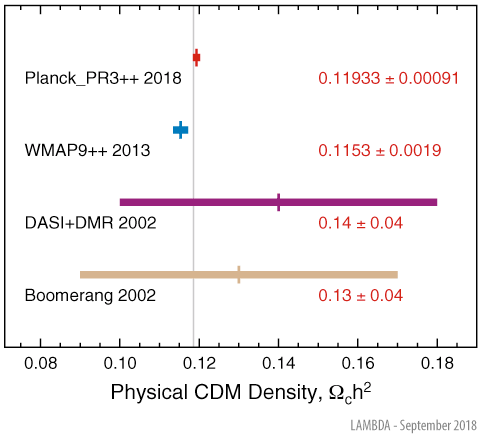

2. Omega Ω, the density of dark matter: 1/10^62 or less

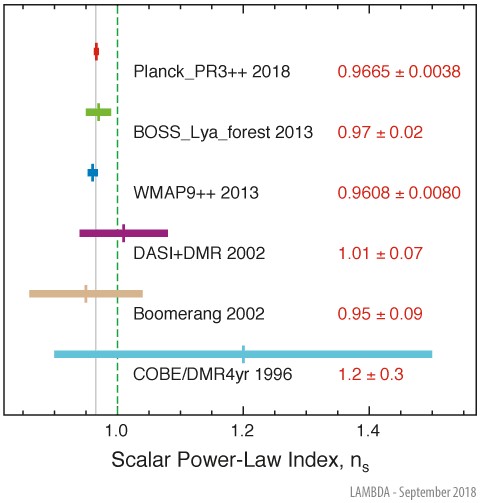

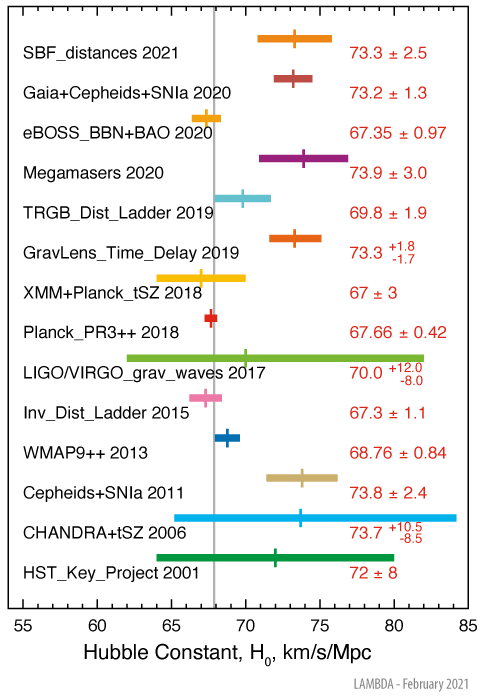

3. Hubble constant H0: 1 part in 10^60

4. Lambda: the cosmological constant: 10^122

5. Primordial Fluctuations Q: 1/100,000

6. Matter-antimatter symmetry: 1 in 10,000,000,000

7. The low-entropy state of the universe: 1 in 10^10^123

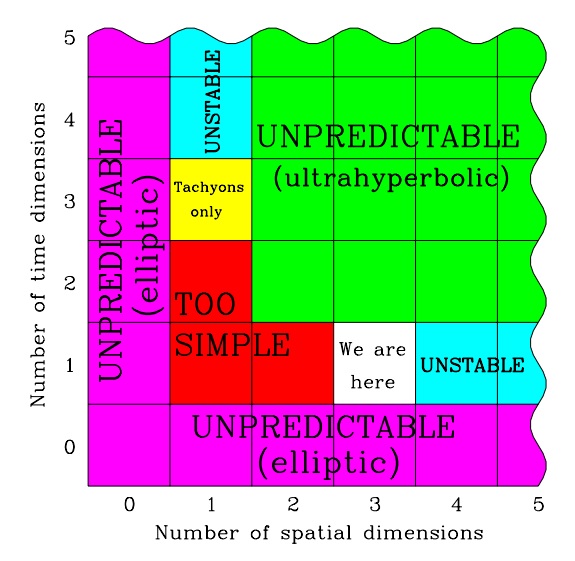

8. The universe would require 3 dimensions of space, and time, to be life-permitting.

Hawking: If the overall density of the universe were changed by even 0.0000000000001 percent, no stars or galaxies could be formed. If the rate of expansion one second after the Big Bang had been smaller by even one part in a hundred thousand million million, the universe would have recollapsed before it reached its present size

Stephen C. Meyer: The return of the God hypothesis, page 162

Though many leading physicists cite the expansion rate of the universe as a good example of fine-tuning, some have questioned whether it should be considered an independent fine-tuning parameter, since the rate of expansion is a consequence of other physical factors. Nevertheless, these physical factors are themselves independent of each other and probably finely tuned. For example, the expansion rate in the earliest stages of the history of the universe would have depended upon the density of mass and energy at those early times.

https://3lib.net/book/15644088/9c418b

Paul Davies The Guardian (UK), page 23 26 Jun 2007

“Scientists are slowly waking up to an inconvenient truth - the universe looks suspiciously like a fix. The issue concerns the very laws of nature themselves. For 40 years, physicists and cosmologists have been quietly collecting examples of all too convenient "coincidences" and special features in the underlying laws of the universe that seem to be necessary in order for life, and hence conscious beings, to exist. Change any one of them and the consequences would be lethal. Fred Hoyle, the distinguished cosmologist, once said it was as if "a super-intellect has monkeyed with physics".

https://www.theguardian.com/commentisfree/2007/jun/26/spaceexploration.comment

Naumann, Thomas: Do We Live in the Best of All Possible Worlds? The Fine-Tuning of the Constants of Nature Sep 2017

The Cosmic Inventory

The evolution of the Universe is characterized by a delicate balance of its inventory, a balance between attraction and repulsion, between expansion and contraction. Since gravity is purely attractive, its action sums up throughout the whole Universe. The strength of this attraction is defined by the gravitational constant GN and by an environmental parameter, the density ΩM of (dark and baryonic) matter. The strength of the repulsion is defined by two parameters: the initial impetus of the Big Bang parameterized by the Hubble constant H0 ( the expansion rate of the universe) and the cosmological constant Λ.

https://www.epj-conferences.org/articles/epjconf/pdf/2017/33/epjconf_icnfp2017_07011.pdf

Fine-tuning of the attractive forces:

1. Gravitational constant G: It must be specified to a precision of 1/10^60

2. Omega Ω, density of dark matter: The early universe must have had a density even closer to the critical density, departing from it by one part in 10^62 or less.

Fine-tuning of the repulsive forces:

3. Hubble constant H0: the so-called "density parameter," was set, in the beginning, with an accuracy of 1 part in 10^60

4. Lambda

the cosmological constant: In terms of Planck units, and as a natural dimensionless value,

the cosmological constant: In terms of Planck units, and as a natural dimensionless value,  , is on the order of 10^122

, is on the order of 10^122 Furthermore:

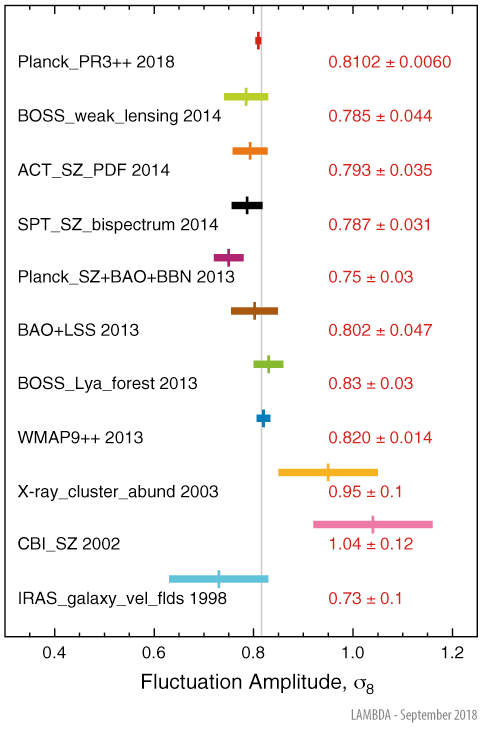

5. Primordial Fluctuations Q: The amplitude of primordial fluctuations, is one of Martin Rees’ Just Six Numbers. It is a ratio equal to 1/100,000

1.Gravitational constant G

Dr. Walter L. Bradley: Is There Scientific Evidence for the Existence of God? How the Recent Discoveries Support a Designed Universe 20 August 2010

The "Big Bang" follows the physics of an explosion, though on an inconceivably large scale. The critical boundary condition for the Big Bang is its initial velocity. If this velocity is too fast, the matter in the universe expands too quickly and never coalesces into planets, stars, and galaxies. If the initial velocity is too slow, the universe expands only for a short time and then quickly collapses under the influence of gravity. Well-accepted cosmological models tell us that the initial velocity must be specified to a precision of 1/10^60. This requirement seems to overwhelm chance and has been the impetus for creative alternatives, most recently the new inflationary model of the Big Bang. Even this newer model requires a high level of fine-tuning for it to have occurred at all and to have yielded irregularities that are neither too small nor too large for the formation of galaxies. Astrophysicists originally estimated that two components of an expansion-driving cosmological constant must cancel each other with an accuracy of better than 1 part in 10^50. In the January 1999 issue of Scientific American, the required accuracy was sharpened to the phenomenal exactitude of 1 part in 10^123. Furthermore, the ratio of the gravitational energy to the kinetic energy must be equal to 1.00000 with a variation of less than 1 part in 100,000. While such estimates are being actively researched at the moment and may change over time, all possible models of the Big Bang will contain boundary conditions of a remarkably specific nature that cannot simply be described away as "fortuitous".

https://web.archive.org/web/20110805203154/http://www.leaderu.com/real/ri9403/evidence.html#ref21

Guillermo Gonzalez, Jay W. Richards: The Privileged Planet: How Our Place in the Cosmos Is Designed for Discovery 2004 page 216

Gravity is the least important force at small scales but the most important at large scales. It is only because the minuscule gravitational forces of individual particles add up in large bodies that gravity can overwhelm the other forces. Gravity, like the other forces, must also be fine-tuned for life. Gravity would alter the cosmos as a whole. For example, the expansion of the universe must be carefully balanced with the deceleration caused by gravity. Too much expansion energy and the atoms would fly apart before stars and galaxies could form; too little, and the universe would collapse before stars and galaxies could form. The density fluctuations of the universe when the cosmic microwave background was formed also must be a certain magnitude for gravity to coalesce them into galaxies later and for us to be able to detect them. Our ability to measure the cosmic microwave background radiation is bound to the habitability of the universe; had these fluctuations been significantly smaller, we wouldn’t be here.

https://3lib.net/book/5102561/45e43d

Laurence Eaves The apparent fine-tuning of the cosmological, gravitational and fine structure constants

A value of alpha −1 close to 137 appears to be essential for the astrophysics, chemistry and biochemistry of our universe. With such a value of alpha, the exponential forms indicate that Λ and G have the extremely small values that we observe in our universe, so that Λ is small enough to permit the formation of large-scale structure, yet large enough to detect and measure with present-day astronomical technology, and G is small enough to provide stellar lifetimes that are sufficiently long for complex life to evolve on an orbiting planet, yet large enough to ensure the formation of stars and galaxies. Thus, if Beck’s result is physically valid and if the hypothetical relation represents an as yet ill-defined physical law relating alpha to G, then the apparent fine-tuning of the three is reduced to just one, rather than three coincidences, of seemingly extreme improbability.

https://arxiv.org/ftp/arxiv/papers/1412/1412.7337.pdf

2. Omega Ω, density of dark matter

A Brief History of Time, Stephen Hawking wrote ( page 126):

If the overall density of the universe were changed by even 0.0000000000001 percent, no stars or galaxies could be formed. If the rate of expansion one second after the Big Bang had been smaller by even one part in a hundred thousand million million, the universe would have recollapsed before it reached its present size.

Eric Metaxas: Is atheism dead? page 58

Hawking certainly was not one who liked the idea of a universe fine-tuned to such a heart-stopping level, but he nonetheless sometimes expressed what the immutable facts revealed. In that same book, he said, “It would be very difficult to explain why the universe would have begun in just this way, except as the act of a God who intended to create beings like us.” Again, considering the source, this is an astounding admission, especially because in decades after he tried to wriggle away from this conclusion any way he could, often manufacturing solutions to the universe’s beginning that seemed intentionally difficult to comprehend by anyone clinging to the rules of common sense. Nonetheless, in his famous book, he was indisputably frank about what he saw and what the obvious conclusions seemed to be. The astrophysicist Fred Hoyle was also candid about the universe’s fine-tuning, despite being a long-time and dedicated atheist. In fact, as we have said, he led the charge in wrinkling his nose at the repulsive idea of a universe with a beginning, and inadvertently coined the term “Big Bang.” But in 1959, a decade after this, he was giving a lecture on how stars in their interiors created every naturally occurring element in the universe and was explaining that they do this with the simplest element: hydrogen. “

https://3lib.net/book/18063091/2dbdee

Brad Lemley Why is There Life? November 01, 2000

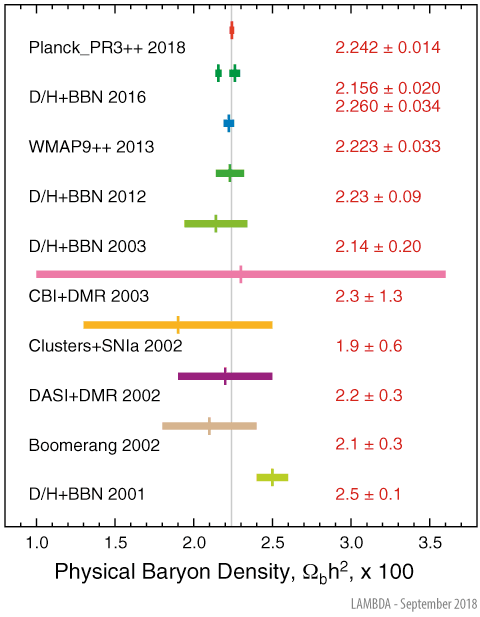

As determined from data of the PLANCK satellite combined with other cosmological observations, the total density of the Universe is Ωtot = ρ/ρcrit = 1.0002 ± 0.0026 and thus extremely close to its critical density ρcrit = 3H02/8πGN with a contribution from a curvature of the Universe of Ωk = 0.000 ± 0.005. However, the balance between the ingredients of the Universe is time-dependent. Any deviation from zero curvature after inflation is amplified by many orders of magnitude. Hence, a cosmic density fine-tuned to flatness today to less than a per mille must have been initially fine-tuned to tens of orders of magnitude.

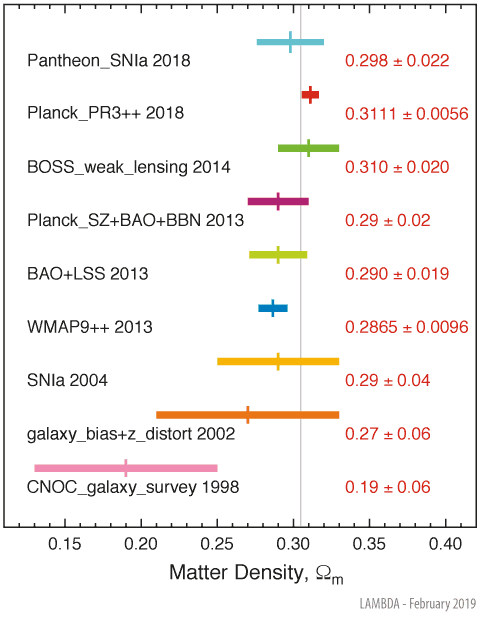

Omega Ω , which measures the density of material in the universe— including galaxies, diffuse gas, and dark matter. The number reveals the relative importance of gravity in an expanding universe. If gravity were too strong, the universe would have collapsed long before life could have evolved. Had it been too weak, no galaxies or stars could have formed.

https://web.archive.org/web/20140722210250/http://discovermagazine.com/2000/nov/cover/

Wikipedia: Flatness problem

The flatness problem (also known as the oldness problem) is a cosmological fine-tuning problem within the Big Bang model of the universe. Such problems arise from the observation that some of the initial conditions of the universe appear to be fine-tuned to very 'special' values, and that small deviations from these values would have extreme effects on the appearance of the universe at the current time.

In the case of the flatness problem, the parameter which appears fine-tuned is the density of matter and energy in the universe. This value affects the curvature of space-time, with a very specific critical value being required for a flat universe. The current density of the universe is observed to be very close to this critical value. Since any departure of the total density from the critical value would increase rapidly over cosmic time, the early universe must have had a density even closer to the critical density, departing from it by one part in 10^62 or less. This leads cosmologists to question how the initial density came to be so closely fine-tuned to this 'special' value.

The problem was first mentioned by Robert Dicke in 1969. The most commonly accepted solution among cosmologists is cosmic inflation, the idea that the universe went through a brief period of extremely rapid expansion in the first fraction of a second after the Big Bang; along with the monopole problem and the horizon problem, the flatness problem is one of the three primary motivations for inflationary theory.

https://en.wikipedia.org/wiki/Flatness_problem

Martin Rees Just Six Numbers : The Deep Forces that Shape the Universe 1999 page 71

We know the expansion speed now, but will gravity bring it to a halt? The answer depends on how much stuff is exerting a gravitational pull. The universe will recollapse - gravity eventually defeating the expansion unless some other force intervenes - if the density exceeds a definite critical value. We can readily calculate what this critical density is. It amounts to about five atoms in each cubic metre. That doesn't seem much; indeed, it is far closer to a perfect vacuum than experimenters on Earth could ever achieve. But the universe actually seems to be emptier still. But galaxies are, of course, especially high concentrations of stars. If all the stars from all the galaxies were dispersed through intergalactic space, then each star would be several hundred times further from its nearest neighbour than it actually is within a typical galaxy - in our scale model, each orange would then be millions of kilometres from its nearest neighbours. If all the stars were dismantled and their atoms spread uniformly through our universe, we'd end up with just one atom in every ten cubic metres. There is about as much again (but seemingly no more) in the form of diffuse gas between the galaxies. That's a total of 0.2 atoms per cubic metre, twenty-five times less than the critical density of five atoms per cubic metre that would be needed for gravity to bring cosmic expansion to a halt.

https://3lib.net/book/981612/c97295

3. Hubble constant H0

The Hubble constant is the present rate of expansion of the universe, which astronomers determine by measuring the distances and redshifts of galaxies.

Gribbin: Cosmic Coincidences 1989

So our existence tells us that the Universe must have expanded, and be expanding, neither too fast nor too slow, but at just the "right" rate to allow elements to be cooked in stars. This may not seem a particularly impressive insight. After all, perhaps there is a large range of expansion rates that qualify as "right" for stars like the Sun to exist. But when we convert the discussion into the proper description of the Universe, Einstein's mathematical description of space and time, and work backwards to see how critical the expansion rate must have been at the time of the Big Bang, we find that the Universe is balanced far more crucially than the metaphorical knife edge. If we push back to the earliest time at which our theories of physics can be thought to have any validity, the implication is that the relevant number, the so-called "density parameter," was set, in the beginning, with an accuracy of 1 part in 10^60 . Changing that parameter, either way, by a fraction given by a decimal point followed by 60 zeroes and a 1, would have made the Universe unsuitable for life as we know it. The implications of this finest of finely tuned cosmic coincidences form the heart of this book.

Hawking A Brief History of Time 1996

If the rate of expansion one second after the big bang had been smaller by even one part in a hundred thousand million million, the universe would have recollapsed before it ever reached its present size.

Ethan Siegel The Universe Really Is Fine-Tuned, And Our Existence Is The Proof Dec 19, 2019

On the one hand, we have the expansion rate that the Universe had initially, close to the Big Bang. On the other hand, we have the sum total of all the forms of matter and energy that existed at that early time as well, including:

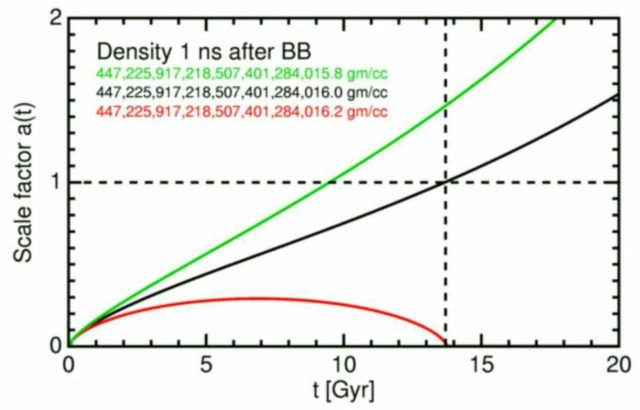

radiation, neutrinos, normal matter, dark matter, antimatter, and dark energy. Einstein's General Theory of Relativity gives us an intricate relationship between the expansion rate and the sum total of all the different forms of energy in it. If we know what your Universe is made of and how quickly it starts expanding initially, we can predict how it will evolve with time, including what its fate will be. A Universe with too much matter-and-energy for its expansion rate will recollapse in short order; a Universe with too little will expand into oblivion before it's possible to even form atoms. Yet not only has our Universe neither recollapsed nor failed to yield atoms, but even today, those two sides of the equation appear to be perfectly in balance. If we extrapolate this back to a very early time — say, one nanosecond after the hot Big Bang — we find that not only do these two sides have to balance, but they have to balance to an extraordinary precision. The Universe's initial expansion rate and the sum total of all the different forms of matter and energy in the Universe not only need to balance, but they need to balance to more than 20 significant digits. It's like guessing the same 1-to-1,000,000 number as me three times in a row, and then predicting the outcome of 16 consecutive coin-flips immediately afterwards.

If the Universe had just a slightly higher matter density (red), it would be closed and have recollapsed already; if it had just a slightly lower density (and negative curvature), it would have expanded much faster and become much larger. The Big Bang, on its own, offers no explanation as to why the initial expansion rate at the moment of the Universe's birth balances the total energy density so perfectly, leaving no room for spatial curvature at all and a perfectly flat Universe. Our Universe appears perfectly spatially flat, with the initial total energy density and the initial expansion rate balancing one another to at least some 20+ significant digits

The odds of this occurring naturally, if we consider all the random possibilities we could have imagined, are astronomically small. It's possible, of course, that the Universe really was born this way: with a perfect balance between all the stuff in it and the initial expansion rate. It's possible that we see the Universe the way we see it today because this balance has always existed. But if that's the case, we'd hate to simply take that assumption at face value. In science, when faced with a coincidence that we cannot easily explain, the idea that we can blame it on the initial conditions of our physical system is akin to giving up on science. It's far better, from a scientific point of view, to attempt to come up with a reason for why this coincidence might occur. One option — the worst option, if you ask me — is to claim that there are a near-infinite number of possible outcomes, and a near-infinite number of possible Universes that contain those outcomes. Only in those Universes where our existence is possible can we exist, and therefore it's not surprising that we exist in a Universe that has the properties that we observe.

If you read that and your reaction was, "what kind of circular reasoning is that," congratulations. You're someone who won't be suckered in by arguments based on the anthropic principle. It might be true that the Universe could have been any way at all and that we live in one where things are the way they are (and not some other way), but that doesn't give us anything scientific to work with. Instead, it's arguable that resorting to anthropic reasoning means we've already given up on a scientific solution to the puzzle. The fact that our Universe has such a perfect balance between the expansion rate and the energy density — today, yesterday, and billions of years ago — is a clue that our Universe really is finely tuned. With robust predictions about the spectrum, entropy, temperature, and other properties concerning the density fluctuations that arise in inflationary scenarios, and the verification found in the Cosmic Microwave Background and the Universe's large-scale structure, we even have a viable solution. Further tests will determine whether our best conclusion at present truly provides the ultimate answer, but we cannot just wave the problem away. The Universe really is finely tuned, and our existence is all the proof we need.

https://www.forbes.com/sites/startswithabang/2019/12/19/the-universe-really-is-fine-tuned-and-our-existence-is-the-proof/?sh=73017fbf4b87

4. Lambda

the cosmological constant

the cosmological constantThe letter {\displaystyle \Lambda }

(lambda) represents the cosmological constant, which is currently associated with a vacuum energy or dark energy in empty space that is used to explain the contemporary accelerating expansion of space against the attractive effects of gravity.

(lambda) represents the cosmological constant, which is currently associated with a vacuum energy or dark energy in empty space that is used to explain the contemporary accelerating expansion of space against the attractive effects of gravity.https://en.wikipedia.org/wiki/Lambda-CDM_model

Commonly known as the cosmological constant, describes the ratio of the density of dark energy to the critical energy density of the universe, given certain reasonable assumptions such as that dark energy density is a constant. In terms of Planck units, and as a natural dimensionless value,

, is on the order of 10^122. This is so small that it has no significant effect on cosmic structures that are smaller than a billion light-years across. A slightly larger value of the cosmological constant would have caused space to expand rapidly enough that stars and other astronomical structures would not be able to form.

, is on the order of 10^122. This is so small that it has no significant effect on cosmic structures that are smaller than a billion light-years across. A slightly larger value of the cosmological constant would have caused space to expand rapidly enough that stars and other astronomical structures would not be able to form.https://en.wikipedia.org/wiki/Fine-tuned_universe#cite_note-discover_nov_2000_cover_story-16

, the newest addition to the list, discovered in 1998. It describes the strength of a previously unsuspected force, a kind of cosmic antigravity, that controls the expansion of the universe. Fortunately, it is very small, with no discernable effect on cosmic structures that are smaller than a billion light-years across. If the force were stronger, it would have stopped stars and galaxies— and life— from forming.

, the newest addition to the list, discovered in 1998. It describes the strength of a previously unsuspected force, a kind of cosmic antigravity, that controls the expansion of the universe. Fortunately, it is very small, with no discernable effect on cosmic structures that are smaller than a billion light-years across. If the force were stronger, it would have stopped stars and galaxies— and life— from forming.https://web.archive.org/web/20140722210250/http://discovermagazine.com/2000/nov/cover/

Another mystery is the smallness of the cosmological constant. First of all, the Planck scale is the only natural energy scale of gravitation: mPl = (ħc/GN)1/2 = 1.2 × 1019 GeV/c2. Compared to this natural scale, the cosmological constant or dark energy density Λ is tiny: Λ~(10 meV)4~(10−30 mPl)4 = 10−120 mPl4. The cosmological constant is also much smaller than expected from the vacuum expectation value of the Higgs field, which like the inflaton field or dark energy is an omnipresent scalar field: ‹Φ4›~mH4~(100 GeV)4~1052 Λ As observed by Weinberg in 1987 there is an “anthropic” upper bound on the cosmological constant Λ. He argued “that in universes that do not recollapse, the only such bound on Λ is that it should not be so large as to prevent the formation of gravitationally bound states.”.

https://www.proquest.com/docview/2124812833

If the state of the hot dense matter immediately after the Big Bang had been ever so slightly different, then the Universe would either have rapidly recollapsed, or would have expanded far too quickly into a chilling, eternal void. Either way, there would have been no ‘structure’ in the Universe in the form of stars and galaxies.

https://link.springer.com/content/pdf/10.1007%2F978-3-319-26300-7.pdf

Neil A. Manson GOD AND DESIGN The teleological argument and modern science , page 180

The cosmological constantThe smallness of the cosmological constant is widely regarded as the single the greatest problem confronting current physics and cosmology. The cosmological constant is a term in Einstein’s equation that, when positive, acts as a repulsive force, causing space to expand and, when negative, acts as an attractive force, causing space to contract. Apart from some sort of extraordinarily precise fine-tuning or new physical principle, today’s theories of fundamental physics and cosmology lead one to expect that the vacuum that is, the state of space-time free of ordinary matter fields—has an extraordinarily large energy density. This energy density, in turn, acts as an effective cosmological constant, thus leading one to expect an extraordinarily large effective cosmological constant, one so large that it would, if positive, cause space to expand at such an enormous rate that almost every object in the Universe would fly apart, and would, if negative, cause the Universe to collapse almost instantaneously back in on itself. This would clearly, make the evolution of intelligent life impossible. What makes it so difficult to avoid postulating some sort of highly precise fine-tuning of the cosmological constant is that almost every type of field in current physics—the electromagnetic field, the Higgs fields associated with the weak force, the inflaton field hypothesized by inflationary cosmology, the dilaton field hypothesized by superstring theory, and the fields associated with elementary particles such as electrons—contributes to the vacuum energy. Although no one knows how to calculate the energy density of the vacuum, when physicists make estimates of the contribution to the vacuum energy from these fields, they get values of the energy density anywhere from 10^53 to 10^120 higher than its maximum life-permitting value, max.6 (Here, max is expressed in terms of the energy density of empty space.)

https://3lib.net/book/733035/b853a0

Steven Weinberg Department of Physics, University of Texas

There are now two cosmological constant problems. The old cosmological constant problem is to understand in a natural way why the vacuum energy density ρV is not very much larger. We can reliably calculate some contributions to ρV , like the energy density in fluctuations in the gravitational field at graviton energies nearly up to the Planck scale, which is larger than is observationally allowed by some 120 orders of magnitude. Such terms in ρV can be cancelled by other contributions that we can’t calculate, but the cancellation then has to be accurate to 120 decimal places.

When one calculates, based on known principles of quantum mechanics, the "vacuum energy density" of the universe, focusing on the electromagnetic force, one obtains the incredible result that empty space "weighs" 1,093g per cubic centimetre (cc). The actual average mass density of the universe, 10-28g per cc, differs by 120 orders of magnitude from theory. 5 Physicists, who have fretted over the cosmological constant paradox for years, have noted that calculations such as the above involve only the electromagnetic force, and so perhaps when the contributions of the other known forces are included, all terms will cancel out to exactly zero, as a consequence of some unknown fundamental principle of physics. But these hopes were shattered with the 1998 discovery that the expansion of the universe is accelerating, which implied that the cosmological constant must be slightly positive. This meant that physicists were left to explain the startling fact that the positive and negative contributions to the cosmological constant cancel to 120-digit accuracy, yet fail to cancel beginning at the 121st digit.

Curiously, this observation is in accord with a prediction made by Nobel laureate and physicist Steven Weinberg in 1987, who argued from basic principles that the cosmological constant must be zero to within one part in roughly 10^123 (and yet be nonzero), or else the universe either would have dispersed too fast for stars and galaxies to have formed, or else would have recollapsed upon itself long ago. In short, numerous features of our universe seem fantastically fine-tuned for the existence of intelligent life. While some physicists still hold out for a "natural" explanation, many others are now coming to grips with the notion that our universe is profoundly unnatural, with no good explanation.

L. Susskind et al. Disturbing Implications of a Cosmological Constant 14 November 2002

“How far could you rotate the dark-energy knob before the “Oops!” moment? If rotating it…by a full turn would vary the density across the full range, then the actual knob setting for our Universe is about 10^123 of a turn away from the halfway point. That means that if you want to tune the knob to allow galaxies to form, you have to get the angle by which you rotate it right to 123 decimal places!

That means that the probability that our universe contains galaxies is akin to exactly 1 possibility in 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,

000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 . Unlikely doesn’t even begin to describe these odds. There are “only” 10^81 atoms in the observable universe, after all.

The low entropy starting point is the ultimate reason that the universe has an arrow of time, without which the second law would not make sense. However, there is no universally accepted explanation of how the universe got into such a special state. Far from providing a solution to the problem, we will be led to a disturbing crisis. Present cosmological evidence points to an inflationary beginning and an accelerated de Sitter end. Most cosmologists accept these assumptions, but there are still major unresolved debates concerning them. For example, there is no consensus about initial conditions. Neither string theory nor quantum gravity provide a consistent starting point for a discussion of the initial singularity or why the entropy of the initial state is so low. High scale inflation postulates an initial de Sitter starting point with Hubble constant roughly 10−5 times the Planck mass. This implies an initial holographic entropy of about 10^10, which is extremely small by comparison with today’s visible entropy. Some unknown agent initially started the inflaton high up on its potential, and the rest is history. We are forced to conclude that in a recurrent world like de Sitter space our universe would be extraordinarily unlikely. A possibility is an unknown agent intervened in the evolution, and for reasons

of its own restarted the universe in the state of low entropy characterizing inflation.

https://arxiv.org/abs/hep-th/0208013v3

5. The Amplitude of Primordial Fluctuations Q

Q, the amplitude of primordial fluctuations, is one of Martin Rees’ Just Six Numbers. In our universe, its value is Q ≈ 2 × 10^5 , meaning that in the early universe the density at any point was typically within 1 part in 100,000 of the mean density. What if Q were different? “If Q were smaller than 10−6 , gas would never condense into gravitationally bound structures at all, and such a universe would remain forever dark and featureless, even if its initial ‘mix’ of atoms, dark energy and radiation were the same as our own. On the other hand, a universe where Q were substantially larger than 10−5 — were the initial “ripples” were replaced by large-amplitude waves — would be a turbulent and violent place. Regions far bigger than galaxies would condense early in its history. They wouldn’t fragment into stars but would instead collapse into vast black holes, each much heavier than an entire cluster of galaxies in our universe . . . Stars would be packed too close together and buffeted too frequently to retain stable planetary systems.” (Rees, 1999, pg. 115)

https://arxiv.org/pdf/1112.4647.pdf

Q represents the amplitude of complex irregularities or ripples in the expanding universe that seed the growth of such structures as planets and galaxies. It is a ratio equal to 1/100,000. If the ratio were smaller, the universe would be a lifeless cloud of cold gas. If it were larger, "great gobs of matter would have condensed into huge black holes," says Rees. Such a universe would be so violent that no stars or solar systems could survive.

https://www.discovermagazine.com/the-sciences/why-is-there-life

Martin Rees Just Six Numbers : The Deep Forces that Shape the Universe 1999 page 103

Why Q is about is still a mystery. But its value is crucial: were it much smaller, or much bigger, the 'texture' of the universe would be quite different, and less conducive to the emergence of life forms. If Q were smaller than but the other cosmic numbers were unchanged, aggregations in the dark matter would take longer to develop and would be smaller and looser. The resultant galaxies would be anaemic structures, in which star formation would be slow and inefficient, and 'processed' material would be blown out of the galaxy rather than being recycled into new stars that could form planetary systems. If Q were smaller than loL6, gas would never condense into gravitationally bound structures at all, and such a universe would remain forever dark and featureless, even if its initial 'mix' of atoms, dark matter and radiation were the same as in our own. On the other hand, a universe where Q were substantially larger than - where the initial 'ripples' were replaced by large-amplitude waves - would be a turbulent and violent place. Regions far bigger than galaxies would condense early in its history. They wouldn't fragment into stars but would instead collapse into vast black holes, each much heavier than an entire cluster of galaxies in our universe. Any surviving gas would get so hot that it would emit intense X-rays and gamma rays. Galaxies (even if they managed to form) would be much more tightly bound than the actual galaxies in our universe. Stars would be packed too close together and buffeted too frequently to retain stable planetary systems. (For similar reasons, solar systems are not able to exist very close to the centre of our own galaxy, where the stars are in a close-packed swarm compared with our less-central locality). The fact that Q is 1/100,000 incidentally also makes our universe much easier for cosmologists to understand than would be the case if Q were larger. A small Qguarantees that the structures are all small compared with the horizon, and so our field of view is large enough to encompass many independent patches each big enough to be a fair sample. If Q were much bigger, superclusters would themselves be clustered into structures that stretched up to the scale of the horizon (rather than, as in our universe, being restricted to about one per cent of that scale). It would then make no sense to talk about the average 'smoothed-out' properties of our observable universe, and we wouldn't even be able to define numbers such as a. The smallness of Q, without which cosmologists would have made no progress, seemed until recently a gratifying contingency. Only now are we coming to realize that this isn't just a convenience for cosmologists, but that life couldn't have evolved if our universe didn't have this simplifying feature.

https://3lib.net/book/981612/c97295

6. Matter/Antimatter Asymmetry

Elisabeth Vangioni Cosmic origin of the chemical elements rarety in nuclear astrophysics 23 November 2017

Baryogenesis Due to matter/antimatter asymmetry (1 + 109 protons compared to 109 antiprotons), only one proton for 109 photons remained after annihilation. The theoretical prediction of antimatter made by Paul Dirac in 1931 is one of the most impressive discoveries (Dirac 1934). Antimatter is made of antiparticles that have the same (e.g. mass) or opposite (e.g. electric charge) characteristics but that annihilate with particles, leaving out at the end mostly photons. A symmetry between matter and antimatter led him to suggest that ‘maybe there exists a completely new Universe made of antimatter’. Now we know that antimatter exists but that there are very few antiparticles in the Universe. So, antiprotons (an antiproton is a proton but with a negative electric charge) are too rare to make any macroscopic objects. In this context, the challenge is to explain why antimatter is so rare (almost absent) in the observable Universe. Baryogenesis (i.e. the generation of protons and neutrons AND the elimination of their corresponding antiparticles) implying the emergence of the hydrogen nuclei is central to cosmology. Unfortunately, the problem is essentially unsolved and only general conditions of baryogenesis were well posed by A. Sakharov a long time ago (Sakharov 1979). Baryogenesis requires at least departure from thermal equilibrium, and the breaking of some fundamental symmetries, leading to a strong observed matter–antimatter asymmetry at the level of 1 proton per 1 billion of photons. Mechanisms for the generation of the matter–anti matter strongly depend on the reheating temperature at the end of inflation, the maximal temperature reached in the early Universe. Forthcoming results from the Large Hadronic Collisionner (LHC) at CERN in Geneva, BABAR collaboration, astrophysical observations and the Planck satellite mission will significantly constrain baryogenesis and thereby provide valuable information about the very early hot Universe.

https://www.tandfonline.com/doi/pdf/10.1080/21553769.2017.1411838?needAccess=true

https://reasonandscience.catsboard.com/t1935-matter-antimatter-asymmetry

7. The low-entropy state of the universe

Roger Penrose The Emperor's New Mind: Concerning Computers, Minds, and the Laws of Physics page 179 1994

This figure will give us an estimate of the total phase-space volume V available to the Creator, since this entropy should represent the logarithm of the volume of the (easily) largest compartment. Since 10123 is the logarithm of the volume, the volume must be the exponential of 10123, i. e.

https://3lib.net/book/464216/95ca46

The British mathematician Roger Penrose conducted a study of the probability of a universe capable of sustaining life occurring by chance and found the odds to be 1 in 1010 123 (expressed as 10 to the power of 10 to the power of 123). That is a mindboggling number. According to probability theory, odds of 1 to 10^50 represents “Zero Probability.” But Dr. Penrose’s calculations place the odds of life emerging as Darwin described it at more than a trillion trillion trillion times less than Zero.

https://pt.3lib.net/book/2708494/cd49d9

Roger Penrose BEFORE THE BIG BANG: AN OUTRAGEOUS NEW PERSPECTIVE AND ITS IMPLICATIONS FOR PARTICLE PHYSICS 2006

The Second Law of thermodynamics is one of the most fundamental principles of physics. The term “entropy” refers to an appropriate measure of disorder or lack of “specialness” of the state of the universe.

https://accelconf.web.cern.ch/e06/papers/thespa01.pdf

Feynman“[I]t is necessary to add to the physical laws the hypothesis that in the past the universe was more ordered” (1994)

https://webcache.googleusercontent.com/search?q=cache:6lUfPicl9lMJ:https://academiccommons.columbia.edu/doi/10.7916/D8T72R50/download+&cd=17&hl=pt-BR&ct=clnk&gl=br

Luke A. Barnes The Fine-Tuning of the Universe for Intelligent Life June 11, 2012

The problem of the apparently low entropy of the universe is one of the oldest problems of cosmology. The fact that the entropy of the universe is not at its theoretical maximum, coupled with the fact that entropy cannot decrease, means that the universe must have started in a very special, low entropy state. The initial state of the universe must be the most special of all, so any proposal for the actual nature of this initial state must account for its extreme specialness.

https://arxiv.org/pdf/1112.4647.pdf

The Entropy of the Early Universe

The low-entropy condition of the early universe is extreme in both respects: the universe is a very big system, and it was once in a very low entropy state. The odds of that happening by chance are staggeringly small. Roger Penrose, a mathematical physicist at Oxford University, estimates the probability to be roughly 1/10^10^123. That number is so small that if it were written out in ordinary decimal form, the decimal would be followed by more zeros than there are particles in the universe! It is even smaller than the ratio of the volume of a proton (a subatomic particle) to the entire volume of the visible universe. Imagine filling the whole universe with lottery tickets the size of protons, then choosing one ticket at random. Your chance of winning that lottery is much higher than the probability of the universe beginning in a state with such low entropy! Huw Price, a philosopher of science at Cambridge, has called the low-entropy condition of the early universe “the most underrated discovery in the history of physics.”

http://www.faithfulscience.com/energy-and-entropy/entropy-of-the-early-universe.html

There are roughly 6.51×10^22 copper atoms in one cubic meter.

https://math.stackexchange.com/questions/287737/number-of-copper-atoms-in-1-mboxcm3-of-copper

If we take that the distance from Earth to the edge of the observable universe is about 14.26 gigaparsecs (46.5 billion light-years or 4.40×10^26 m) in any direction, and assuming that space is roughly flat (in the sense of being a Euclidean space), this size corresponds to a volume of about 3.566×10^80 m3

https://en.wikipedia.org/wiki/Observable_universe

That means there would be roughly 10^100 copper atoms if we filled the entire volume of the observable universe with atoms without leaving any space. Getting the right initial low entropy state of our universe is like picking one red atom amongst all atoms in the universe. By chance, or design?

JAY W. RICHARDS LIST OF FINE-TUNING PARAMETERS

Besides physical constants, there are initial or boundary conditions, which describe the conditions present at the beginning of the universe. Initial conditions are independent of the physical constants. One way of summarizing the initial conditions is to speak of the extremely low entropy (that is, a highly ordered) initial state of the universe. This refers to the initial distribution of mass energy. In The Road to Reality,

Physicist Roger Penrose estimates that the odds of the initial low entropy state of our universe occurring by chance alone are on the order of 1 in 10 10^123. This ratio is vastly beyond our powers of comprehension. Since we know a life-bearing universe is intrinsically interesting, this ratio should be more than enough to raise the question: Why does such a universe exist? If someone is unmoved by this ratio, then they probably won’t be persuaded by additional examples of fine-tuning. In addition to initial conditions, there are a number of other, wellknown features about the universe that are apparently just brute facts. And these too exhibit a high degree of fine-tuning. Among the fine-tuned

(apparently) “brute facts” of nature are the following:

The ratio of masses for protons and electrons—If it were slightly different, building blocks for life such as DNA could not be formed.

The velocity of light—If it were larger, stars would be too luminous. If it were smaller, stars would not be luminous enough.

Mass excess of neutron over proton—if it were greater, there would be too few heavy elements for life. If it were smaller, stars would quickly collapse as neutron stars or black holes.

https://www.discovery.org/m/securepdfs/2018/12/List-of-Fine-Tuning-Parameters-Jay-Richards.pdf

David H Bailey: Is the universe fine-tuned for intelligent life?April 1st, 2017

The overall entropy (disorder) of the universe is, in the words of Lewis and Barnes, “freakishly lower than life requires.” After all, life requires, at most, a galaxy of highly ordered matter to create chemistry and life on a single planet. Physicist Roger Penrose has calculated (see The Emperor's New Mind, pg. 341-344) the odds that the entire universe is as orderly as our galactic neighborhood to be one in 10^10^123, a number whose decimal representation has vastly more zeroes than the number of fundamental particles in the observable universe. Extrapolating back to the big bang only deepens this puzzle.

https://mathscholar.org/2017/04/is-the-universe-fine-tuned-for-intelligent-life/

J. Warner Wallace: Initial Conditions in a Very Low Entropy State JULY 21, 2014

Entropy represents the amount of disorder in a system. Thus, a high entropy state is highly disordered – think of a messy teenager’s room. Our universe began in an incredibly low entropy state. A more precise definition of entropy is that it represents the number of microscopic states that are macroscopically indistinguishable. An egg has higher entropy once broken because you’re “opening” up many more ways to arrange the molecules. There are more ways of arranging molecules that would still be deemed an omelet than there are ways to arrange the particles in an unbroken egg in where certain molecules are confined to subsets of the space in the egg – such as a membrane or the yolk. Entropy is thus closely associated with probability. If one is randomly arranging molecules, it’s much more likely to choose a high entropy state than a low entropy state. Randomly arranged molecules in an egg would much more likely look like an omelet that an unbroken egg.

It turns out that nearly all arrangements of particles in the early universe would have resulted in a lifeless universe of black holes. Tiny inconsistencies in the particle arrangements would be acted on by gravity to grow in size. A positive feedback results since the clumps of particles have an even greater gravitational force on nearby particles. Penrose’s analysis shows that in the incredibly dense early universe, most arrangements of particles would have resulted basically in nothing but black holes. Life certainly can’t exist in such a universe because there would be no way to have self-replicating information systems. Possibly the brightest objects in the universe are quasars, which release radiation as bright as some galaxies due to matter falling into a supermassive black hole. The rotation rates near black holes and the extremely high-energy photons would non-life permitting.

Roger Penrose is the first scientist to quantify the fine-tuning necessary to have a low entropy universe to avoid such catastrophes. “In order to produce a universe resembling the one in which we live, the Creator would have to aim for an absurdly tiny volume of the phase space of possible universes, about 1/10^10123.” This number is incomprehensibly small – it represents 1 chance in 10 to the power of (10 to the power of 123). Writing this number in ordinal notational would require more zeroes than the number of subatomic particles in the observable universe, 10123 zeroes. Under the assumption of atheism, the particles in our universe would have been arranged randomly or at least not with respect to future implications for intelligent life. Nearly all such arrangements would not have been life-permitting so this fine-tuning evidence favors theism over atheism. We have a large but finite number of possible original states and rely on well-established statistical mechanics to assess the relevant probability.

The incredibly low entropy state of the initial conditions shows fine-tuning was required to avoid excessive black holes! This fact about the initial conditions also calls into question Smolin’s proposed scenario that universes with differing physical constants might be birthed out of black holes. Smolin suggests the possibility of an almost Darwinian concept in which universes that produce more black holes, therefore, more baby universes than those which don’t. But if our universe requires statistically miraculous initial conditions to be life-permitting by avoiding excessive black holes, universes evolving to maximize black hole production would be unlikely to lead to life! (Even if the evolution of universes were possible) Furthermore, the skeptic who thinks that black holes suggest a purposeless universe should consider that black holes can, in moderation and kept at distance, be helpful for life. While a universe comprised of mostly black holes would be life-prohibiting, having a large black hole at the center of a galaxy is actually quite helpful for life. Here is a Scientific American article that documents the benefits of Black Holes for life – it summarizes: “the matter-eating beast at the center of the Milky Way may actually account for Earth’s existence and habitability.”

https://crossexamined.org/fine-tuning-initial-conditions-support-life/

8. The universe would require 3 dimensions of space, and time, to be life-permitting.

Luke A. Barnes The Fine-Tuning of the Universe for Intelligent Life June 11, 2012

If whatever exists were not such that it is accurately described on macroscopic scales by a model with three space dimensions, then life would not exist. If “whatever works” was four dimensional, then life would not exist, whether the number of dimensions is simply a human invention or an objective fact about the universe.

Anthropic constraints on the dimensionality of spacetime (from Tegmark, 1997).

UNPREDICTABLE: the behavior of your surroundings cannot be predicted using only local, finite-accuracy data, making storing and processing information impossible.

UNSTABLE: no stable atoms or planetary orbits.

TOO SIMPLE: no gravitational force in empty space and severe topological problems for life.

TACHYONS ONLY: energy is a vector, and rest mass is no barrier to particle decay. For example, an electron could decay into a neutron, an antiproton, and a neutrino. Life is perhaps possible in very cold environments.

https://arxiv.org/pdf/1112.4647.pdf

Lee Smolin wrote in his 2006 book The Trouble with Physics:

We physicists need to confront the crisis facing us. A scientific theory [the multiverse/ Anthropic Principle/ string theory paradigm] that makes no predictions and therefore is not subject to experiment can never fail, but such a theory can never succeed either, as long as science stands for knowledge gained from rational argument borne out by evidence.

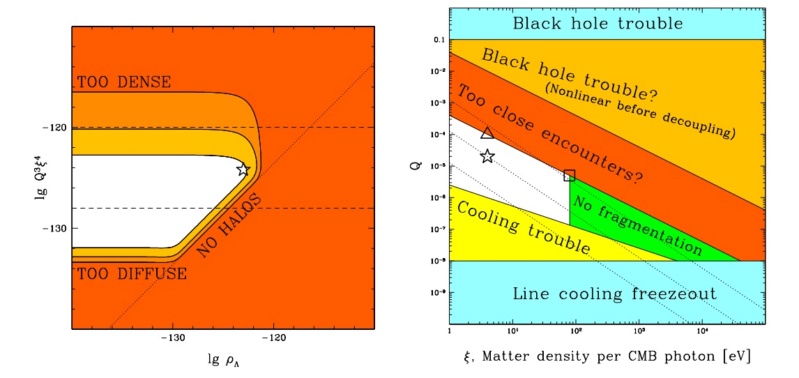

Anthropic limits on some cosmological variables: the cosmological constant Λ (expressed as an energy density ρΛ in Planck units), the amplitude of primordial fluctuations Q, and the matter to photon ratio ξ. The white region shows where life can form. The coloured regions show where various life-permitting criteria are not fulfilled,

The dramatic change is because we have changed the force exponentially.

https://3lib.net/book/5240658/bd3f0d

John M. Kinson The Big Bang: Does it point to God ? July 9, 2021

http://www.godandscience.info/gs/new/bigbang.html

Ethan Siegel Ask Ethan: What Was The Entropy Of The Universe At The Big Bang? Apr 15, 2017

https://www.forbes.com/sites/startswithabang/2017/04/15/ask-ethan-what-was-the-entropy-of-the-universe-at-the-big-bang/?sh=113b77237280

https://evo2.org/big-bang-precisely-planned/

http://aeon.co/magazine/science/why-does-the-universe-appear-fine-tuned-for-life/

https://phys.org/news/2014-04-science-philosophy-collide-fine-tuned-universe.html

Three Cosmological Parameters

Last edited by Otangelo on Mon Mar 18, 2024 5:47 pm; edited 33 times in total