1.The speed of lightSince the speed of light in a vacuum did not change after numerous repeated experiments done to measure it, so it has been accepted as a constant. Why does it not change? Nobody knows. It has that intrinsic property without scientific explanation.PhD, Michael Guillen, Believing Is Seeing: A Physicist Explains How Science Shattered His Atheism and Revealed the Necessity of Faith

Suppose a light quantum streaks across your room. Standing at the door with a souped-up radar gun, you clock it going, as expected, 299,792,458 meters per second. (Obviously I’m ignoring that your room is not a vacuum. But this simplification does not affect what I’m explaining.) Now, how about to someone driving by at 1,000,000,000 mph? Here’s the shocker: The light quantum will still seem to be traveling at 299,792,458 meters per second. Unlike the speed of a car or anything else in the universe, the speed of light doesn’t depend on one’s point of view; it’s the same for everyone, everywhere, always. I

t’s an absolute truth, the only speed in the universe with that supreme status—for reasons, mind you, we do not understand. It’s a mysteryhttps://3lib.net/book/17260619/6aa859

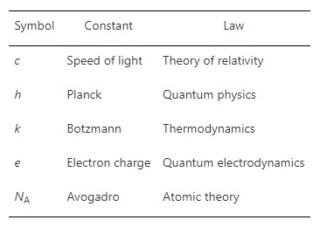

Dr. Christopher S. Baird Is the reason that nothing can go faster than light because we have not tried hard enough? July 20, 2017If you look at the equations which are at the core of Einstein's theories of relativity, you find that as you approach the speed of light, your spatial dimension in the forward direction shrinks down to nothing and your clock slows to a stop. A reference frame with zero width and with no progression in time is really a reference frame that does not exist. Therefore, this tells us that nothing can ever go faster than the speed of light, for the simple reason that space and time do not actually exist beyond this point. Because the concept of "speed" requires measuring a certain amount of distance traveled in space during a certain period of time, the concept of speed does not even physically exist beyond the speed of light. In fact, the phrase "faster than light" is physically meaningless. It's like saying "darker than black."https://www.wtamu.edu/~cbaird/sq/2017/07/20/is-the-reason-that-nothing-can-go-faster-than-light-because-we-have-not-tried-hard-enough/Professor David Wiltshire Why is the speed of light what it is? 5 May 2017Why is the speed of light in vacuum a universally constant finite value? The answer to this is related to the fact that the speed of light in vacuum, c, is an upper bound to all possible locally measured speeds of any object. There are only two possibilities for the laws of physics: (i) the relativity of Galileo and Newton in which any speed is possible and ideal clocks keep a universal time independent of their motion in space; or (ii) Einstein's special relativity in which there is an upper speed limit. Experiment shows that we live in a Universe obeying the second possibility and that the speed limit is the speed of light in vacuum.Dr Alfredo Carpineti Why Is The Speed Of Light In Vacuum A Constant Of Nature? 30 April 2021Light in a vacuum moves at a constant speed of 299,792,458 meters per second. So why is it this value? Why is it constant? And why does it pop up everywhere? We don’t have a conclusive answer just yet. It is one of those things we don’t know that hint at the complex machinery of the universe...https://www.iflscience.com/physics/why-is-the-speed-of-light-in-vacuum-a-constant-of-nature/Sidney Perkowitz Light dawns 18 September 2015Light travels at around 300,000 km per second. Why not faster? Why not slower? We have now fixed the speed of light in a vacuum at exactly 299,792.458 kilometres per second. Albert Einstein showed that c, the speed of light through a vacuum, is the universal speed limit. According to his special theory of relativity, nothing can move faster. So, thanks to Maxwell and Einstein, we know that the speed of light is connected with a number of other (on the face of it, quite distinct) phenomena in surprising ways. Why this particular speed and not something else? Or, to put it another way, where does the speed of light come from?Until quantum theory came along, electromagnetism was the complete theory of light. It remains tremendously important and useful, but it raises a question. To calculate the speed of light in a vacuum, Maxwell used empirically measured values for two constants that define the electric and magnetic properties of empty space. Call them, respectively, Ɛ0 and μ0.The thing is, in a vacuum, it’s not clear that these numbers should mean anything. After all, electricity and magnetism actually arise from the behaviour of charged elementary particles such as electrons. But if we’re talking about empty space, there shouldn’t be any particles in there, should there?This is where quantum physics enters. In the advanced version called quantum field theory, a vacuum is never really empty. It is the ‘vacuum state’, the lowest energy of a quantum system. It is an arena in which quantum fluctuations produce evanescent energies and elementary particles.What’s a quantum fluctuation? Heisenberg’s Uncertainty Principle states that there is always some indefiniteness associated with physical measurements. According to classical physics, we can know exactly the position and momentum of, for example, a billiard ball at rest. But this is precisely what the Uncertainty Principle denies. According to Heisenberg, we can’t accurately know both at the same time. It’s as if the ball quivered or jittered slightly relative to the fixed values we think it has. These fluctuations are too small to make much difference at the human scale; but in a quantum vacuum, they produce tiny bursts of energy or (equivalently) matter, in the form of elementary particles that rapidly pop in and out of existence.These short-lived phenomena might seem to be a ghostly form of reality. But they do have measurable effects, including electromagnetic ones. That’s because these fleeting excitations of the quantum vacuum appear as pairs of particles and antiparticles with equal and opposite electric charge, such as electrons and positrons. An electric field applied to the vacuum distorts these pairs to produce an electric response, and a magnetic field affects them to create a magnetic response. This behaviour gives us a way to calculate, not just measure, the electromagnetic properties of the quantum vacuum and, from them, to derive the value of c.In 2010, the physicist Gerd Leuchs and colleagues at the Max Planck Institute for the Science of Light in Germany did just that. They used virtual pairs in the quantum vacuum to calculate the electric constant Ɛ0. Their greatly simplified approach yielded a value within a factor of 10 of the correct value used by Maxwell – an encouraging sign! This inspired Marcel Urban and colleagues at the University of Paris-Sud to calculate c from the electromagnetic properties of the quantum vacuum. In 2013, they reported that their approach gave the correct numerical value.The speed of light is, of course, just one of several ‘fundamental’ or ‘universal’ physical constants. These are believed to apply to the entire universe and to remain fixed over time. All these quantities raise a host of unsettling questions. Are they truly constant? In what way are they ‘fundamental’? Why do they have those particular values? What do they really tell us about the physical reality around us?So, let’s assume that these constants really are constant. Are they fundamental? Are some more fundamental than others? What do we even mean by ‘fundamental’ in this context? One way to approach the issue would be to ask what is the smallest set of constants from which the others can be derived. Sets of two to 10 constants have been proposed, but one useful choice has been just three: h, c and G, collectively representing relativity and quantum theory.In 1899, Max Planck, who founded quantum physics, examined the relations among h, c and G and the three basic aspects or dimensions of physical reality: space, time, and mass. Every measured physical quantity is defined by its numerical value and its dimensions. We don’t quote c simply as 300,000, but as 300,000 kilometres per second, or 186,000 miles per second, or 0.984 feet per nanosecond. The numbers and units are vastly different, but the dimensions are the same: length divided by time. In the same way, G and h have, respectively, dimensions of [length3/(mass x time2)] and [mass x length2/time]. From these relations, Planck derived ‘natural’ units, combinations of h, c and G that yield a Planck length, mass and time of 1.6 x 10-35 metres, 2.2 x 10-8 kilogrammes, and 5.4 x 10-44 seconds. Among their admirable properties, these Planck units give insights into quantum gravity and the early Universe.But some constants involve no dimensions at all. These are so-called dimensionless constants – pure numbers, such as the ratio of the proton mass to the electron mass. That is simply the number 1836.2 (which is thought to be a little peculiar because we do not know why it is so large). According to the physicist Michael Duff of Imperial College London, only the dimensionless constants are really ‘fundamental’, because they are independent of any system of measurement. Dimensional constants, on the other hand, ‘are merely human constructs whose number and values differ from one choice of units to the next’.Perhaps the most intriguing of the dimensionless constants is the fine-structure constant α. It was first determined in 1916, when quantum theory was combined with relativity to account for details or ‘fine structure’ in the atomic spectrum of hydrogen. In the theory, α is the speed of the electron orbiting the hydrogen nucleus divided by c. It has the value 0.0072973525698, or almost exactly 1/137.Whether it was the ‘hand of God’ or some truly fundamental physical process that formed the constants, it is their apparent arbitrariness that drives physicists mad. Why these numbers? Couldn’t they have been different?https://aeon.co/essays/why-is-the-speed-of-light-the-speed-of-lightQuestion: What would happen if the speed of light would change?Answer: The speed of light c pops up everywhere.- It's the exchange rate between mass and energy. Lower it, and stars need to burn faster to counteract gravity. Low-mass stars will go out and become degenerate super-planets. High mass stars will cool, but may also explode if they're helium-burning.- It's the exchange rate between velocity and time (this is why we call it a speed, as the maximum speed possible in space, is a consequence of this). Time will run slower or faster if you change c. Distances also change.- It's the fundamental constant of electromagnetism. The stable atomic orbitals will be at different energy levels, which would change the colors of all our lights (we generate light by knocking electrons around orbitals). Magnetic fields would be stronger or weaker, while electricity would be more or less energetic and move faster or slower in a different medium. The changing of atomic and molecular orbitals would break all our semiconductors. In high-energy physics, the electroweak unification takes over, this too would be distorted.- The Einstein tensor would be greater with a slower speed of light, or smaller with a higher speed of light. This would affect the strength of gravity and feed into the rate at which stars burn, possibly triggering a positive feedback loop and blowing up every star as a pair-instability supernova if you did it right.- The bioelectrical system nerves use (like the ones that make your brain) is tuned carefully to ionic potential, which is an electric potential. This'd change, and you would die.https://arstechnica.com/civis/viewtopic.php?f=26&t=1246707George F R Ellis: Note on Varying Speed of Light Cosmologies March 5, 2018The first key point is that some proposal needs to be made in this regard: when one talks about the speed of light varying, this only gains physical meaning when related to Maxwell’s equations or its proposed generalisations, for they are the equations that determine the actual speed of light. The second key point is that just because there is a universal speed vlim does not prove there is a particle that moves at that speed. On the standard theory, massless particles move at that speed and massive particles don’t; but by itself, that result does not imply that massless particles exist. Their existence is a further assumption of the standard theory, related to the wavelike equations satisfied by the Maxwell electromagnetic field. One can consider theories with massive photons. Any proposed variation of the speed of light has major consequences for almost all physics, as it enters many physics equations in various ways, particularly because of the Lorentz invariance built into fundamental physics.https://arxiv.org/pdf/astro-ph/0703751.pdfQuestion: What determines the speed of light?Answer: Springer: Ephemeral vacuum particles induce speed-of-light fluctuations 25 March 2013A specific property of vacuum called the impedance, which is crucial to determining the speed of light, depends only on the sum of the square of the electric charges of particles but not on their masses. If their idea is correct, the value of the speed of light combined with the value of vacuum impedance gives an indication of the total number of charged elementary particles existing in nature. Experimental results support this hypothesis.https://www.springer.com/about+springer/media/springer+select?SGWID=0-11001-6-1414244-0Gerd Leuchs: A sum rule for charged elementary particles 21 March 2013As to the speed of light, the value predicted by the model is determined by the relative properties of the electric and magnetic interaction of light with the quantum vacuum and is independent of the number of elementary particles, a remarkable property underlining the general character of the speed of light.https://link.springer.com/content/pdf/10.1140/epjd/e2013-30577-8.pdfMarcel Urban The quantum vacuum as the origin of the speed of light 21 March 2013The vacuum permeability μ0, the vacuum permittivity 0, and the speed of light in vacuum c are widely considered as being fundamental constants and their values, escaping any physical explanation, are commonly assumed to be invariant in space and time. We describe the ground state of the unperturbed vacuum as containing a finite density of charged ephemeral fermions antifermions pairs. Within this framework, 0 and μ0 originate simply from the electric polarization and from the magnetization of these pairs when the vacuum is stressed by an electrostatic or a magnetostatic field respectively. Our calculated values for 0 and μ0 are equal to the measured values when the fermion pairs are produced with an average energy of about 30 times their Page 6 of 6 Eur. Phys. J. D (2013) 67: 58 rest mass. The finite speed of a photon is due to its successive transient captures by these virtual particles. This model, which proposes a quantum origin to the electromagnetic constants 0 and μ0 and to the speed of light, is self consistent: the average velocity of the photon cgroup, the phase velocity of the electromagnetic wave cφ, given by cφ = 1/ √μ00, and the maximum velocity used in special relativity crel are equal. The propagation of a photon being a statistical process, we predict fluctuations of its time of flight of the order of 0.05 fs/√m. This could be within the grasp of modern experimental techniques and we plan to assemble such an experiment.https://sci-hub.ren/10.1140/epjd/e2013-30578-7Paul Sutter Why is the speed of light the way it is? July 16, 2020https://www.space.com/speed-of-light-properties-explained.htmlImpedance of free spaceThe impedance of free space, Z0, is a physical constant relating the magnitudes of the electric and magnetic fields of electromagnetic radiation travelling through free space.https://en.wikipedia.org/wiki/Impedance_of_free_spaceHow does vacuum (nothingness) offer an impedance of 377 ohms to EM waves? Is it analogous to a wire (due it's physical properties) offering resistance to voltage and current signals?

That is based on

the permittivity of free space, which is based on the fine-structure constant, roughly 137, which physicists have been mulling over for millions of person-hours as to what THAT number could possibly be based on.

My best answer, which is not original, but I will call “mine”, is the anthropic answer. If the FSC was even a tiny bit different, then nothing would exist, atoms would not hold together, stars could not form and ignite, yadda, yadda yadda, there wouldn’t be an “us” to even think of the question. For all we know there are a trillion trillion other universes, somewhere, each one with say a random FSC, and 99.99999999999% of them failed universes, from our viewpoint, as they are too cold, too dark, or too violent, or too short-lived for anything interesting to happen in them.

Vacuum permittivity, commonly denoted ε0 (pronounced as "epsilon nought" or "epsilon zero") is the value of the absolute dielectric permittivity of classical vacuum. Alternatively it may be referred to as the permittivity of free space, the electric constant, or the distributed capacitance of the vacuum. It is an ideal (baseline) physical constant. Its CODATA value is:ε0 = 8.8541878128(13)×10−12 F⋅m−1 (farads per meter), with a relative uncertainty of 1.5×10−10. It is the capability of an electric field to permeate a vacuum. This constant relates the units for electric charge to mechanical quantities such as length and force.[2] For example, the force between two separated electric charges with spherical symmetry (in the vacuum of classical electromagnetism) is given by Coulomb's law.Initial Conditions and “Brute Facts” Velocity of light If it were larger, stars would be too luminous. If it were smaller, stars would not be luminous enough. http://www.reasons.org/articles/where-is-the-cosmic-density-fine-tuningLight proves the Existence of God - Here is whyThe speed of light is a fundamental constant that plays an essential role in defining the very fabric of our universe. It is not only a fundamental limit on the propagation of all massless particles and the transmission of information, but it also determines the behavior of electromagnetic radiation, which is responsible for virtually all the light and radiation we observe in the cosmos. The speed of light is intimately connected to the fundamental constants of nature, such as the vacuum permittivity and permeability, which govern the behavior of electric and magnetic fields in empty space. These constants, in turn, are tied to the quantum nature of the vacuum itself, where virtual particle-antiparticle pairs constantly fluctuate in and out of existence. The precise value of the speed of light, along with other fundamental constants, is extraordinarily fine-tuned for the existence of a universe capable of supporting complex structures, stars, and ultimately, life as we know it. Even a slight variation in the speed of light would have profound implications, potentially preventing the formation of stable atoms, the nuclear fusion processes that power stars, or the very existence of electromagnetic radiation that enables the transmission of information and the propagation of light signals throughout the cosmos. While no precise numerical bounds can be given, most physicists estimate that allowing even a 1-4% variation in the value of the speed of light from its current figure would likely render the universe incapable of producing life. Thus, the speed of light stands as a immutable cosmic principle, deeply intertwined with the fundamental laws of physics and the delicate balance of constants that makes our universe hospitable for the emergence and evolution of complexity and conscious observers like ourselves.

The speed of light in vacuum, denoted c, is defined by two fundamental physical constants: 1) The permittivity of free space (vacuum permittivity), denoted ε0 and 2) The permeability of free space (vacuum permeability), denoted μ0. Specifically, the speed of light c is related to these constants by the equation: c = 1 / √(ε0 * μ0). The currently accepted values are: ε0 = 8.854187817... × 10^-12 F/m (farads per meter) and μ0 = 1.256637061... × 10^-6 N/A^2 (newtons per ampere squared). Plugging these precise constant values into the above equation gives: c = 299,792,458 m/s (meters per second). This figure matches the defined value of the speed of light extremely accurately based on experimental measurements.

What defines or determines the specific values of the vacuum permittivity (ε0) and vacuum permeability (μ0) constants that then give rise to the defined speed of light value? The answer is that in our current theories of physics, the values of ε0 and μ0 are taken as immutable fundamental constants of nature that are not further derived from more basic principles. They have been precisely measured experimentally, but their specific values are not calculable from other considerations. However, there are some theoretical perspectives that provide insight into where these constants could potentially originate from:

1. In some unified field theory approaches like string theory, the constants like ε0 and μ0 could emerge as vacuum expectation values from a more fundamental unified framework describing all forces and dimensions.

2. The values may be inevitably tied to the particular energy scale where the electromagnetic and strong/weak nuclear forces became distinct in the early universe after the Big Bang.

3. Some have speculated they could be linked to the fundamental properties of space-time itself or the Planck scale where gravity becomes quintessential.

ε0 and μ0 are treated as fundamental constants in our theories, which means they have specific, fixed values that cannot vary. They are not free parameters that can take on any value. The values of ε0 and μ0 are tied to the properties of the vacuum itself and the way electromagnetic fields propagate through it, which is a fundamental aspect of the universe's laws. If ε0 and μ0 could take on any value from negative to positive infinity, it would violate the internal consistency and principles of Maxwell's equations and electromagnetism as we understand them.

While unifying theories like string theory aim to derive these constants from deeper frameworks, those frameworks still inherently restrict ε0 and μ0 to precise values defined by the overall mathematical constraints and physical principles. So in essence, the theoretical parameter space is finite because ε0 and μ0 represent real, immutable physical constants defining the properties of the vacuum and electromagnetism itself. Allowing them to vary unconstrained from negative to positive infinity is simply not compatible with our established theories of physics and electromagnetics. If ε0 and μ0 had values even slightly different from their current experimental values, then the universe and laws of physics as we know them simply would not exist.

There is no theoretical principle that prevents ε0 and μ0 from having different values. The values they have are not derived from more fundamental reasons, they simply define the parameters of this particular instantiation of the universe. There is no inherent reason why these specific values for the vacuum permittivity and permeability must exist, rather than not exist at all. The universe could have had a completely different set of values for these constants. So in that sense - the parameter space is infinite, but other values would be inconsistent or non-life conductive. Rather, the values ε0 and μ0 have are simply the ones miraculously conducive for a universe that developed the laws of physics and reality as we've observed it.

While the reasoning we established concludes that the odds of randomly stumbling upon the precise life-permitting values for ε0 and μ0 from their infinite parameter space are infinitesimally small, they are not technically zero. In an unbounded, infinite sequence or parameter space, every possible permutation or value will be inevitably realized at some point if we consider an unending random process across infinite iterations or trials. So in that sense, even if the life-permitting values have an infinitesimal probability of being randomly selected in any one instance, they would eventually be "stumbled upon" given an infinite number of tries across eternity. This mirrors the philosophical perspective often raised regarding the "infinite monkey theorem" - that given infinite time and opportunities, even highly improbable events like monkeys randomly reproducing the complete works of Shakespeare by chance will occur.

However, this gives rise to some profound implications and considerations: Is our universe one such "chance" occurrence in a wide multiverse or infinite cycle of universes? Are all possible permutations realized somewhere? If so, does this undermine or support the idea of an intelligent agency being required? Some may argue stumbling upon a life-universe through blind chance, however improbable, still negates a need for a creator. Even if inevitable in infinity, should we still regard such an exquisitely perfect life-permitting universe as a staggering statistical fluke worthy of marvel and philosophical inquiry? The fact that we are able to ponder these questions as conscious observers introduces an additional layer of improbability - not just a life-permitting universe, but one that gave rise to intelligent life capable of such reasoning.

Now let me directly challenge the viability of the "infinite multiverse generator" idea as an explanation for our life-permitting universe arising by chance. For there to be an infinite number of trials/attempts at randomly generating a life-permitting universe across the infinite parameter spaces, there would need to be some kind of eternal "multiverse generator" mechanism constantly creating new universes with different values of fundamental constants. However, such an infinite multiverse generator itself would require an infinite past duration of time across which it has already been endlessly operating and generating universes through trials/errors. The notion of actualizing or traversing an infinite set or sequence is problematic from a philosophical and mathematical perspective. There are paradoxes involved in somehow having already completed an infinite set of steps/changes to reach the present moment. Infinite regress issues also arise - what kicked off or set in motion this supposed infinite multiverse generator in an utterly beginningless past eternity? How could such a changeless, static infinite existence transition into a dynamic generative engine? Our best scientific theories point to the universe and time itself having a finite beginning at the Big Bang, not an infinite past regress of previous states or transitions. Proposing an infinite multiverse generator that has somehow already produced an eternity of failed universes prior to this one relies on flawed assumptions about completing infinite processes and the nature of time itself. If time, change, and the laws that govern our universe had a definitive beginning, as our evidence suggests, then it undermines the idea that an infinite series of random universe generations over an beginningless past could have produced our highly specified, life-permitting cosmic parameters by chance alone. This strengthens the need to posit an intelligent agency, causal principle, or a deeper theoretical framework to account for the precise life-permitting values being deterministically established at the cosmic inception, rather than appealing to infinite odds across Here is the continuation of the text:

Major Premise: If a highly specified set of conditions/parameters is required for a particular outcome (like a life-permitting universe), and the range of possible values allowing that outcome is infinitesimally small relative to the infinite total parameter space, then that outcome could not occur by chance across a finite number of trials or temporal stages.

Minor Premise: The values of the vacuum permittivity (ε0) and vacuum permeability (μ0) form an infinitesimally small life-permitting region within their infinite theoretical parameter spaces, requiring highly specified "fine-tuning" for a life-bearing universe to emerge, as evidenced by the failure of all other value combinations to produce an inhabitable cosmos.

Conclusion: Therefore, a life-permitting universe with precisely fine-tuned values of ε0 and μ0, such as ours, could not have arisen by chance alone across any finite series of temporal stages or universe generations, as prescribed by the known laws of physics and the finite past implied by the Big Bang theory.

This leads to the additional conclusion that an intelligent agency is necessary to deterministically establish or select the precise life-permitting values of ε0 and μ0 at the cosmic inception in order for an inhabitable universe to be actualized.

, and is of fundamental importance in quantum mechanics. A photon's energy is equal to its frequency multiplied by the Planck constant. Due to mass–energy equivalence, the Planck constant also relates mass to frequency.

, and is of fundamental importance in quantum mechanics. A photon's energy is equal to its frequency multiplied by the Planck constant. Due to mass–energy equivalence, the Planck constant also relates mass to frequency.