https://reasonandscience.catsboard.com/t1653-the-irreducible-complex-system-of-the-eye-and-eye-brain-interdependence

The human eye consists of over two million working parts making it second only to the brain in complexity. Evolutionists believe that the human eye is a product of millions of years of mutations and natural selection. As you read about the amazing complexity of the eye please ask yourself: could this really be a product of evolution?

Automatic focus

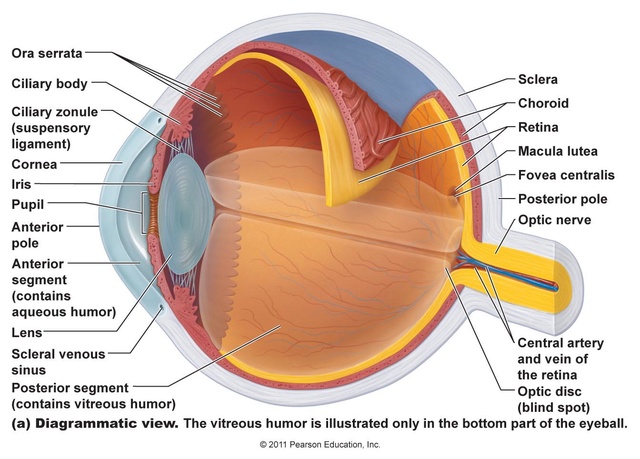

The lens of the eye is suspended in position by hundreds of string like fibres called Zonules. The ciliary muscle changes the shape of the lens. It relaxes to flatten the lens for distance vision; for close work it contracts rounding out the lens. This happens automatically and instantaneously without you having to think about it.How could evolution produce a system that even knows when it is in focus? Let alone the mechanism to focus.

How would evolution produce a system that can control a muscle that is in the perfect place to change the shape of the lens?

A visual system

The retina is composed of photoreceptor cells. When light falls on one of these cells, it causes a complex chemical reaction that sends an electrical signal through the optic nerve to the brain. It uses a signal transduction pathway, consisting of 9 irreducible steps. the light must go all the way through. Now, what if this pathway did happen to suddenly evolve and such a signal could be sent and go all the way through. So what?! How is the receptor cell going to know what to do with this signal? It will have to learn what this signal means. Learning and interpretation are very complicated processes involving a great many other proteins in other unique systems. Now the cell, in one lifetime, must evolve the ability to pass on this ability to interpret vision to its offspring. If it does not pass on this ability, the offspring must learn as well or vision offers no advantage to them. All of these wonderful processes need regulation. No function is beneficial unless it can be regulated (turned off and on). If the light sensitive cells cannot be turned off once they are turned on, vision does not occur. This regulatory ability is also very complicated involving a great many proteins and other molecules - all of which must be in place initially for vision to be beneficial. How does evolution explain our retinas having the correct cells which create electrical impulses when light activates them?Making sense of it all

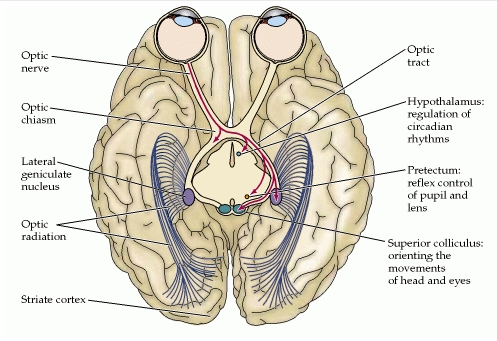

Each eye takes a slightly different picture of the world. At the optic chiasm each picture is divided in half. The outer left and right halves continue back toward the visual cortex. The inner left and right halves cross over to the other side of the brain then continue back toward the visual cortex.Also, the image that is projected onto the retina is upside down. The brain flips the image back up the right way during processing. Somehow, the human brain makes sense of the electrical impulses received via the optic nerve. The brain also combines the images from both eyes into one image and flips it up the right way… and all this is done in real time. How could natural selection recognize the problem and evolve the mechanism of the left side of the brain receiving the information from the left side of both eyes and the right side of the brain taking the information from the right side of both eyes? How would evolution produce a system that can interpret electrical impulses and process them into images? Why would evolution produce a system that knows the image on the retina is upside down?

Constant level of light

The retina needs a fairly constant level of light intensity to best form useful images with our eyes. The iris muscles control the size of the pupil. It contracts and expands, opening and closing the pupil, in response to the brightness of surrounding light. Just as the aperture in a camera protects the film from over exposure, the iris of the eye helps protect the sensitive retina. How would evolution produce a light sensor? Even if evolution could produce a light sensor.. how can a purely naturalistic process like evolution produce a system that knows how to measure light intensity? How would evolution produce a system that would control a muscle which regulates the size of the pupil?Detailed vision

Cone cells give us our detailed color daytime vision. There are 6 million of them in each human eye. Most of them are located in the central retina. There are three types of cone cells: one sensitive to red light, another to green light, and the third sensitive to blue light.Isn’t it fortunate that the cone cells are situated in the centre of the retina? Would be a bit awkward if your most detailed vision was on the periphery of your eye sight?

Night vision

Rod cells give us our dim light or night vision. They are 500 times more sensitive to light and also more sensitive to motion than cone cells. There are 120 million rod cells in the human eye. Most rod cells are located in our peripheral or side vision. it can modify its own light sensitivity. After about 15 seconds in lower light, our bodies increase the level of rhodopsin in our retina. Over the next half hour in low light, our eyes get more an more sensitive. In fact, studies have shown that our eyes are around 600 times more sensitive at night than during the day. Why would the eye have different types of photoreceptor cells with one specifically to help us see in low light?Lubrication

The lacrimal gland continually secretes tears which moisten, lubricate, and protect the surface of the eye. Excess tears drain into the lacrimal duct which empty into the nasal cavity.If there was no lubrication system our eyes would dry up and cease to function within a few hours.

If the lubrication wasn’t there we would all be blind. Had this system not have to be fully setup from the beginning?

Fortunate that we have a lacrimal duct aren’t we? Otherwise we would have steady stream of tears running down our faces!

Protection

Eye lashes protect the eyes from particles that may injure them. They form a screen to keep dust and insects out. Anything touching them triggers the eyelids to blink.How is it that the eyelids blink when something touches the eye lashes?

Operational structure

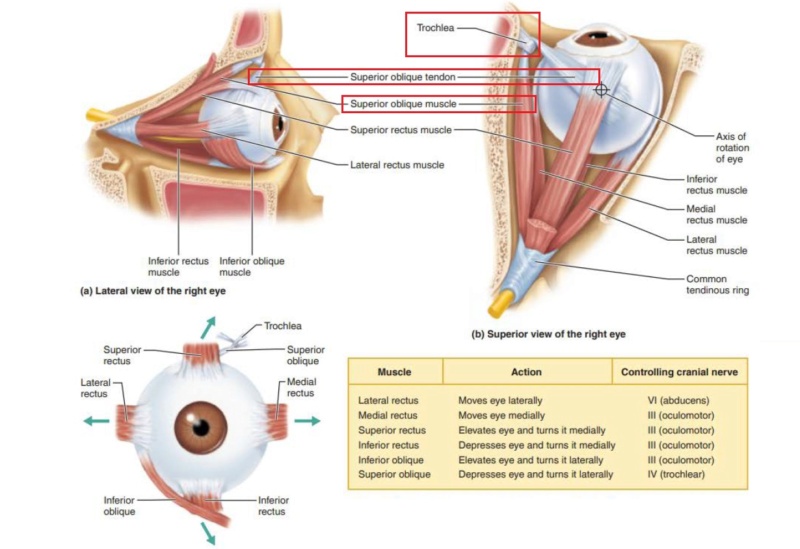

Six muscles are in charge of eye movement. Four of these move the eye up, down, left and right. The other two control the twisting motion of the eye when we tilt our head.The orbit or eye socket is a cone-shaped bony cavity that protects the eye. The socket is padded with fatty tissue that allows the eye to move easily. When you tilt your head to the side your eye stays level with the horizon.. how would evolution produce this? Isn’t it amazing that you can look where you want without having to move your head all the time? If our eye sockets were not padded with fatty tissue then it would be a struggle to move our eyes.. why would evolution produce this?

Poor Design?

Some have claimed that the eye is wired back to front and therefore it must be the product of evolution. They claim that a designer would not design the eye this way. Well, it turns out this argument stems from a lack of knowledge.The idea that the eye is wired backward comes from a lack of knowledge of eye function and anatomy.

Dr George Marshall

Dr Marshall explains that the nerves could not go behind the eye, because that space is reserved for the choroid, which provides the rich blood supply needed for the very metabolically active retinal pigment epithelium (RPE). This is necessary to regenerate the photoreceptors, and to absorb excess heat. So it is necessary for the nerves to go in front instead.

The more I study the human eye, the harder it is to believe that it evolved. Most people see the miracle of sight. I see a miracle of complexity on viewing things at 100,000 times magnification. It is the perfection of this complexity that causes me to baulk at evolutionary theory.

Dr George Marshall

Evolution of the eye?

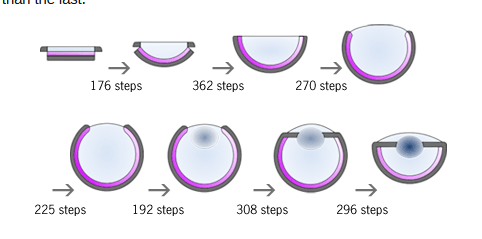

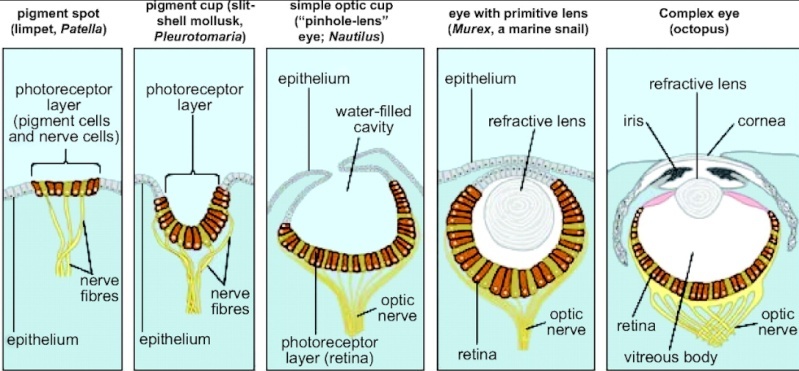

Proponents of evolutionary mechanisms have come up with how they think the eye might have gradually evolved over time but it’s nothing more than speculation.For instance, observe how Dawkins explains the origin of the eye:

Observe the words ‘suppose’, ‘probably’, ‘suspect’, ‘perhaps’ & ‘imagine’? This is not science but pseudo scientific speculation and story telling. Sure, there are a lot of different types of eyes out there but that doesn’t mean they evolved. Besides, based on the questions above you can see how much of an oversimplification Dawkins presentation is.

Conclusion

The human eye is amongst the best automatic camera in existence. Every time we change where we’re looking, our eye (and retina) is changing everything else to compensate: focus & light intensity are constantly adjusting to ensure that our eyesight is as good it can be. Man has made his own cameras… it took intelligent people to design and build them. The human eye is better than the best human made camera. How is the emergence of eyes best explained, evolution, or design ?!Did eyes evolve by Darwinian mechanisms? 2

The simplest eye type known is the ocellus, a multicellular eye comprising of photoreceptor cells, pigment cells and nerve cells to process the information—is step 4 in Darwin’s list.27 The most primitive eye that meets the definition of an eye is the tiny—about the size of the head of a pin—microscopic marine crustacean copepod copilia. Only the females possess what Wolken and Florida call ‘remarkable eyes which make up more than half of its transparent body.’28 Claimed to be a link between an eyespot and a more complex eye, it has two exterior lenses that raster like a scanning electron microscope to gather light that is processed and then sent to its brain.29 It has retinal cells and an eye ‘analogous to a superposition-type ommatidium of compound eyes’.30 This, the most primitive true eye known, is at stage 6 of Darwin’s evolutionary hierarchy!

Dennett wrote that the eye lens is ‘exquisitely well-designed to do its job, and the engineering rationale for the details is unmistakable, but no designer ever articulated it.’44 He concludes that its design is not real, but an illusion because evolution explains the eye without the need for a designer. This review has shown that evolution does not explain the existence of the vision system, but an intelligent designer does. The leading eye evolution researchers admit they only ‘have some understanding of how eyesmight have evolved’.45 These explanations do not even scratch the surface of how a vision system could have arisen by evolution—let alone ‘when’.

Much disagreement exists about the hypothetical evolution of eyes, and experts recognize that many critical problems exist. Among these problems are an explanation of the evolution of each part of the vision system, including the lens, the eyeball, the retina, the entire optical system, the occipital lobes of the brain, and the many accessory structures. Turner stressed that ‘the real miracle [of vision] lies not so much in the optical eye, but in the computational process that produces vision.’46 All of these different systems must function together as an integrated unit for vision to be achieved. As Arendt concludes, the evolution of the eye has been debated ever since Darwin and is still being debated among Darwinists.47 For non-evolutionists there is no debate.

http://reasonandscience.heavenforum.org/t1638-eye-brain-is-a-interdependent-and-irreducible-complex-system#3058

and

The Inference to Design

Thesis by Wayne Talbot

Our sense of sight is best explained by intelligent design: the system is irreducibly complex and the prerequisites for processing visual information are beyond development by undirected biological processes.

Propositions:

1. The origin of knowledge and information in the brain cannot be explained by undirected organic processes.

2. Sight is the result of intelligent data processing.

3. Data input requires a common understanding between sender and receiver of the data coding and transmission protocols.

4. Data storage and retrieval requires an understanding of the data from an informational perspective.

5. Data processing requires meta-data: conceptual and contextual data to be pre-loaded prior to the input of transaction data.

6. Light can be considered an encoded data stream which is decoded and re-encoded by the eye for transmission via the optic nerve to serve as input data.

7. All data transmissions are meaningless without the superimposition of meta-data.

8. None of the components of our visual system individually offer any advantage for natural selection.

The Concepts of Light and Sight

Imagine that some thousands of years ago, a mountain tribe suffered a disease or mutation such that all members became blind. Generation after generation were born blind and eventually even the legends of the elders of being able to see were lost. Everyone knew that they had these soft spots in their heads which hurt when poked, but nobody knew if they had some intended function. Over time, the very concepts of light and sight no longer existed within their tribal knowledge. As a doctor specialising in diseases and abnormalities of the eye, you realise that with particular treatment you can restore the sight of these people. Assuming that you are fluent in the local language, how would you describe what you can do for them? How would you convey the concept of sight to people to whom such an idea was beyond their understanding?

My point is that this is the very problem that primitive organisms would have faced if sight did in fact evolve organically in an undirected fashion. When the first light sensitive cell hypothetically evolved, the organism had no way of understanding that the sensation it experienced represented light signals conveying information about its environment: light and sight were concepts unknown to it.

The Training of Sight

Those familiar with the settlement of Australia by Europeans in the 19th century, and the even earlier settlements in the Americas and Africa would have heard of the uncanny ability of the indigenous population to track people and animals. It was not so much that their visual acuity was better, but that they had learned to understand what they were seeing. It was found that this tracking ability could be taught and learned. In military field craft, soldiers are taught to actively look for particular visual clues and features. In my school cadet days, we undertook night “lantern stalks” (creeping up on enemy headquarters) and later in life, the lessons learned regarding discrimination of objects in low light were put to good use in orienteering at night. All of this experience demonstrates that while many people simply “see” passively, it is possible to engage the intellect and actively “look”, thus seeing much more.

With the advent of the aeroplane came aerial photography and its application during wartime as a method of intelligence gathering. Photographic analysis was a difficult skill to acquire - many people could look at the same picture but offer differing opinions as to what they were seeing, or rather thought they were seeing.

The underlying lesson is that sight is as much a function of intellect as it is receiving light signals through the eyes. Put another way, it is intelligent data processing.

Understanding Data vs Information

The digital computer was invented circa 1940 and during the technical revolution that followed, we have come to learn a great deal about information processing. I was fortunate to join the profession in the very early days of business computing and through training and experience, acquired an understanding of data coding methodologies, their application and interpretation. More importantly however, I came to understand a great deal about how data becomes information, and when it isn’t. In the early days of sequential and index-sequential files, the most common programming error occurred in attempting to match master, application (reference), and transaction files. With the advent of direct access files and disk resident databases, new skills were required in the fields of data analysis, data structuring, and data mining.

The computing experience teaches this: data only becomes cognitive information when intelligently processed against a pre-loaded referential framework of conceptual and contextual data. Using this computer analogy, master files represented conceptual information, application files provided context, and input data was provided by transaction files.

With apologies to Claude Shannon, Werner Gitt and other notables who have contributed so much to our understanding on this subject, I would contend that in the context of this discussion, none of these files contain information in the true sense: each contains data which only becomes usable information when intelligently correlated. I would further contend that no single transmission in any form can stand alone as information: absent of a preloaded conceptual and contextual framework in the recipient, it can only ever be a collection of meaningless symbols. This is easily demonstrated by simply setting down everything you have to know before you can read and understand these words written here, or what you would have to know before reading a medical journal in a foreign language in an unfamiliar script such as Hebrew or Chinese.

Can Coding Systems Evolve?

A computer processor operates via switches set to either “on” or “off”. A system with 8 switches (28) provides 256 options; 16 switches (216) 65,536; 32 switches (232) 4,294,967,296; and 64 switches (264) the very massive 18 trillion. This feature provides the terminology such as 32-bit and 64-bit computers: it refers to the number of separate addresses than can be accessed in memory. In the early days of expensive iron-core memory, 8 or 16 bit addressing was adequate, but with the development of the silicon chip and techniques for dissipating heat, larger memory became viable thus requiring greater addressing capability and the development of 32 then 64-bit computers. All very interesting, you may say but why is that relevant? The relevance is found in most users’ experience: software versions that are neither forward nor backward compatible. The issue is that as coding systems change or evolve, the processing and storage systems cannot simply evolve in an undirected fashion: they must be intelligently converted. Let us look at some practical examples.

Computer coding systems are multi-layered. The binary code (1’s and 0’s) of computers translates to a coded language such as Octal through to ASCII, or Hexadecimal through to EBCDIC, and then to a common human language such as English or French. Computer scientists long ago established these separate coding structures for reasons not relevant here. The point to note is that in general, you cannot go from Octal to EBCDIC or from Hexadecimal to ASCII: special intermediate conversion routines are needed. The problem is that once coding systems are established, particularly multi-layered systems, any sequence of symbols which does not conform to the pattern cannot be processed without an intelligent conversion process.

Slightly off-topic, but consider the four chemicals in DNA which are referred to as A, C, G, and T. Very recently, scientists expressed surprise in finding that the DNA sequences code not just for proteins, but for the processing of these proteins. In other words, there is not just one but two or more “languages” written into our genome. What surprises me is that they were surprised: I would have thought the multi-language function to be obvious, but I will leave that for another time. If an evolving genome started with just two chemicals, say A and C, downstream processes could only recognise combinations of these two. If a third chemical G arose, there would be no system that could utilise it and more probably, its occurrence would interfere in a deleterious way. Quite simply, you cannot go from a 2 letter code to a 3 letter code without re-issuing the code book, a task quite beyond undirected biological evolution.

The Code Book Enigma

I will use an example similar to DNA because it is much easier to illustrate the problem using a system comprising just four symbols, in this case W, X, Y, and Z. I am avoiding ACGT simply so that you are not distracted by your knowledge of that particular science. Our coding system uses these 4 letters in groups of 3. If I sent you the message “XYW ZWZ YYX WXY” you would have no idea of what it means: it could be a structured sequence or a random arrangement, the letters are just symbols which are individually meaningless until intelligently arranged in particular groups or sequences. To be useful, we would need to formalise the coding sequences in a code book: that way the sender can encode the message and someone with the same version of the code book can decode the message and communication is achieved. Note that if the sender and receiver are using different versions of the code book, communication is compromised.

This brings us to a vital concept: meta-data (or data about data).

There is a foundational axiom that underpins all science and intellectual disciplines––nothing can explain itself. The explanation of any phenomenon will always lie outside itself, and this applies equally to any coding system: it cannot explain itself. You may recall the breakthrough achieved by archaeologists in deciphering Egyptian hieroglyphs when they found the Rosetta Stone.

In our example, the code book provides the meta-data about the data in the encoded message. Any language, particularly one limited to just four letters, requires a code book to both compose and decipher the meaning. Every time there is a new rearrangement of the letters, or new letters are added to (or deleted from) an existing string, the code book has to be updated. From a chronological sequence perspective, for a change to be useful, the code explanation or definition must precede, not follow, any new arrangement of letters in a message. Rearrangements that occur independently of the code book cannot be understood by downstream processes. Logically, the code book is the controlling mechanism, not the random rearrangements of coding sequences. First the pre-arranged code sequence, then its implementation. In other words, it is a top-down process, not the bottom-up process that evolutionists such as Richard Dawkins assert.

Now it matters not whether you apply this to the evolution of the genome or to the development of our senses, the same principle holds: ALL data must be preceded by meta-data to be comprehensible as information. In general, messages or other forms of communication can be considered as transactions which require a conceptual and contextual framework to provide meaning.

Understanding Input Devices

We have five physical senses: sight, hearing, smell, taste, and touch, and each requires unique processes, whether physical and/or chemical. What may not be obvious from an evolution standpoint is that for the brain to process these inputs, it must first know about them (concept) and how to differentiate amongst them (context). Early computers used card readers as input devices, printers for output, and magnetic tape for storage (input & output). When new devices such as disk drives, bar code readers, plotters, etc were invented, new programs were needed to “teach” the central processor about these new senses. Even today, if you attach a new type of reader or printer to your computer, you will get the message “device not recognised” or similar if you do not preload the appropriate software. It is axiomatic that an unknown input device cannot autonomously teach the central processor about its presence, its function, the type of data it wishes to transmit, or the protocol to be used.

The same lessons apply to our five senses. If we were to hypothesise that touch was the first sense, how would a primitive brain come to understand that a new sensation from say light was not just a variation of touch?

Signal Processing

All communications can be studied from the perspective of signal processing, and without delving too deeply, we should consider a just few aspects of the protocols. All transmissions, be they electronic, visual, or audible are encoded using a method appropriate to the medium. Paper transmissions are encoded in language using the symbol set appropriate for that language; sound makes use of wavelength, frequency and amplitude; and light makes use of waves and particles in a way that I cannot even begin to understand. No matter, it still seems to work. The issue is that for communication to occur, both the sender and receiver must have a common understanding of the communication protocol: the symbols and their arrangement, and both must have equipment capable of encoding and decoding the signals.

Now think of the eye. It receives light signals containing data about size, shape, colour, texture, brightness, contrast, distance, movement, etc. The eye must decode these signals and re-encode them using a protocol suitable for transmission to the brain via the optic nerve. Upon receipt, the brain must store and correlate the individual pieces of data to form a mental picture, but even then, how does it know what it is seeing? How did the brain learn of the concepts conveyed in the signal such as colour, shape, intensity, and texture? Evolutionists like to claim that some sight is better than no sight, but I would contend that this can only be true provided that the perceived image matches reality: what if objects approaching were perceived as receding? Ouch!

When the telephone was invented, the physical encode/decode mechanisms were simply the reverse of one another, allowing sound to be converted to electrical signals then reconverted back to sound. Sight has an entirely different problem because the encode mechanism in the eye has no counterpart in the brain: the conversion is to yet another format for storage and interpretation. These two encoding mechanisms must have developed independently, yet had to be coherent and comprehensible with no opportunity for prolonged systems testing. I am not a mathematician, but the odds against two coding systems developing independently yet coherently in any timespan must argue against it ever happening.

Data Storage and Retrieval

Continuing our computer analogy, our brain is the central processing unit and just as importantly, our data storage unit, reportedly with an equivalent capacity of 256 billion gigabytes (or thereabouts). In data structuring analysis, there is always a compromise to be made between storage and retrieval efficiency. The primary difference from an analysis perspective is whether to establish the correlations in the storage structure thus optimising the retrieval process, or whether to later mine the data looking for the correlations. In other words, should the data be indexed for retrieval rather than just randomly distributed across the storage device. From our experience in data analysis, data structuring, and data mining, we know that it requires intelligence to structure data and indices for retrieval, and even greater intelligence to make sense of unstructured data.

Either way, considerable understanding of the data is required.

Now let’s apply that to the storage, retrieval, and processing of visual data. Does the brain store the data then analyse, or analyse and then store, all in real time? Going back to the supposed beginnings of sight, on what basis did the primitive brain decide where to store and how to correlate data which was at that time, just a meaningless stream of symbols? What was the source of knowledge and intelligence that provided the logic of data processing?

Correlation and Pattern Recognition

In the papers that I have read on the subject, scientists discuss the correlation of data stored at various locations in the brain. As best as I understand it, no-one has any idea of how or why that occurs. Imagine a hard drive with terabytes of data and those little bits autonomously arranging themselves into comprehensible patterns. Impossible you would assert, but that is what evolution claims. It is possible for chemicals to self-organise based on their physical properties, but what physical properties are expressed in the brain’s neural networks such that self-organisation would be possible? I admit to very limited knowledge here but as I understand it, the brain consists of neurons, synapses, and axons, and in each class, there is no differentiation: every neuron is like every other neuron, and so forth. Now even if there are differences such as in electric potential, the differences must occur based on physical properties in a regulated manner for there to be consistency. Even then, the matter itself can have no “understanding” of what those material differences mean in terms of its external reality.

In the case of chemical self-organisation, the conditions are preloaded in the chemical properties and thus the manner of organisation is pre-specified. When it comes to data patterns and correlation however, there are no pre-specified properties of the storage material that are relevant to the data which is held, whether the medium be paper, silicon, or an organic equivalent. It can be demonstrated that data and information is independent of the medium in which it is stored or transmitted, and is thus not material in nature. Being immaterial, it cannot be autonomously manipulated in a regulated manner by the storage material itself, although changes to the material can corrupt the data.

Pattern recognition and data correlation must be learned, and that requires an intelligent agent that itself is preloaded with conceptual and contextual data.

Facial Recognition

Facial recognition has become an important tool for security and it is easy for us to think, “Wow! Aren’t computers smart!” The “intelligence” of facial recognition is actually an illusion: it is an algorithmic application of comparing data points but what the technology cannot do is identify what type of face is being scanned. In 2012, Google fed 10 million images of cat faces into a very powerful computer system designed specifically for one purpose: that an algorithm could learn from a sufficient number of examples to identify what was being seen. The experiment was partially successful but struggled with variations in size, positioning, setting and complexity. Once expanded to encompass 20,000 potential categories of object, the identification process managed just 15.8% accuracy: a huge improvement on previous efforts, but nowhere near approaching the accuracy of the human mind.

The question raised here concerns the likelihood of evolution being able to explain how facial recognition by humans is so superior to efforts to date using the best intelligence and technology.

Irreducible Complexity

Our sense of sight has many more components than described here. The eye is a complex organ which would have taken a considerable time to evolve, the hypothesis made even more problematic by the claim that it happened numerous times in separate species (convergent evolution). Considering the eye as the input device, the system requires a reliable communications channel (the optic nerve) to convey the data to the central processing unit (the brain) via the visual cortex, itself providing a level of distributed processing. This is not the place to discuss communications protocols in detail, but very demanding criteria are required to ensure reliability and minimise data corruption. Let me offer just one fascinating insight for those not familiar with the technology. In electronic messaging, there are two basic ways of identifying a particular signal: (1) by the purpose of the input device, or (2) by tagging the signal with an identifier.

A certain amount of signal processing occurs in the eye itself; particular receptor cells have been identified in terms of function: M cells are sensitive to depth and indifferent to color; P cells are sensitive to color and shape; K cells are sensitive to color and indifferent to shape or depth. The question we must ask is how an undirected process could inform the brain about these different signal types and how they are identified. The data is transmitted to different parts of the brain for parallel processing, a very efficient process but one that brings with it a whole lot of complexity. The point to note is that not only does the brain have the problem of decoding different types of messages (from the M, P, and K cells), but it has to recombine this data into a single image, a complex task of co-ordinated parallel processing.

Finally we have the processor itself which if the evolution narrative is true, progressively evolved from practically nothing to something hugely complex. If we examine each of the components of the sight system, it is difficult to identify a useful function for any one of them operating independently except perhaps the brain. However, absent of any preloaded data to interpret input signals from wherever, it is no more useful than a computer without an operating system. It can be argued that the brain could have evolved independently for other functions, but the same argument could not be made for those functions pertaining to the sense of sight.

As best as I can understand, our system of sight is irreducibly complex.

Inheriting Knowledge

Let us suppose, contrary to all reason and everything that we know about how knowledge is acquired, that a primitive organism somehow began developing a sense of sight. Maybe it wandered from sunlight into shadow and after doing that several times, came to “understand” these variations in sensation as representative of its external environment, although just what it understood is anyone’s guess, but let us assume that it happened. How is this knowledge then inherited by its offspring for further development? If the genome is the vehicle of inheritance, then sensory experience must somehow be stored therein.

I have no answer to that, but I do wonder.

Putting it all together

I could continue to introduce even greater complexities that are known to exist, but I believe that we have enough to draw some logical conclusions. Over the past sixty years, we have come to understand a great deal about the nature of information and how it is processed. Scientists have been working on artificial intelligence with limited success, but it would seem probable that intelligence and information can only be the offspring of a higher intelligence. Even where nature evidences patterns, those patterns are the result of inherent physical properties, but the patterns themselves cannot be externally recognised without intelligence. A pattern is a form of information, but without an understanding of what is regular and irregular, it is nothing more than a series of data points.

We often hear the term, emergent properties of the brain, to account for intelligence and knowledge, but just briefly, what is really meant is emergent properties of the mind. You may believe that the mind is nothing more than a description of brain processes but even so, emergence requires something from which to emerge, and that something must have properties which can be foundational to the properties of that which emerges. Emergence cannot explain its own origins, as we have noted before.

Our system of sight is a process by which external light signals are converted to an electro-chemical data stream which is fed to the brain for storage and processing. The data must be encoded in a regulated manner using a protocol that is comprehensible by the recipient. The brain then stores that data in a way that allows correlation and future processing. Evolutionists would have us believe that this highly complex system arose through undirected processes with continual improvement through generations of mutation and selection. However, there is nothing in these processes which can begin to explain how raw data received through a light sensitive organ could be processed without the pre-loading of the meta-data that allows the processor to make sense of the raw data. In short, the only source of data was the very channel that the organism neither recognised nor understood.

Without the back-end storage, retrieval, and processing of the data, the input device has no useful function. Without an input device, the storage and retrieval mechanisms have no function. Just like a computer system, our sensory sight system is irreducibly complex.

Footnote:

Earlier I noted that I was surprised that scientists were surprised to find a second language in DNA, but on reflection I considered that I should justify that comment. The majority of my IT career was in the manufacturing sector. I have a comprehensive understanding of the systems and information requirements of manufacturing management, having designed, developed, and implemented integrated systems across a number of vertical markets and industries.

The cell is often described as a mini factory and using that analogy, it seems logical to me that if the genome holds all of the production data in DNA, then it must include not just the Bill of Material for proteins, but also the complete Bill of Resources for everything that occurs in human biology and physiology. Whether that is termed another language I will leave to others, but what is obvious to anyone with experience in manufacturing management is that an autonomous factory needs more information than just a recipe.

Fred Hoyle’s “tornado through a junk yard assembling a Boeing 747” analogy understates the complexity by several orders of magnitude. A more accurate analogy would be a tornado assembling a full automated factory capable of replicating itself and manufacturing every model of airplane Boeing ever produced.

http://reasonandscience.heavenforum.org/t1653-the-human-eye-intelligent-designed#2563

https://www.youtube.com/watch?v=NZMY5v79zyI

http://goddidit.org/the-human-eye/

The eye lense is suspended in position by hundreds of string like fibres called zonules.

The ciliary muscle changes the shape of the lens. It relaxes to flattern the lens for distance vision. For close work, it contracts rounding out the lens. This is all automatic.

Question : How could evolution produce a system that can control a muscle that is in the perfect place to change the shape of the lense ?

The retina is composed of photoreceptor cells. When light falls on one of these cells, it causes a complex chemical reaction that sends a electrical signal through the optic nerve to the brain.

Question : How does evolution explain our retinas having the correct cells which create electrical impulses when light activates them ?

The image from the left side from the left eye is combined with the image from the left side of the right eye and vice versa.

The image that is projected on to the retina is upside down. The brain flips the image during processing. Somehow, the brain makes sense of the electrical impulses received via the optic nerve.

Questions : how would evolution produce a system that can intepret electrical impulses and process them into images ?

Why would evolution produce a system that knows that the image in the retina is upside down ?

How does evolution explain the left side of the brain receiving the information from the left side of both eyes and the right side of the brain taking information from the right side of both eyes ?

The retina needs a fairly constant level of light to best form useful images with our eyes. the iris muscles controls the size of the pupil. it contracts and expands, opening and closing the pupil, in response to the brightness of the surrounding light. Just as the aperture in the camera protects the film from over exposure, the iris of the eye helps protect the sensitive retina.

Questions: how would evolution produce a light sensor ? even if evolution could produce a light sensor, how could a purely naturalistc process like evolution produce a system, that can measure light intesity ? how could evolution produce a system that would control a muscle that regulates the size of the pupill ?

Cone cells give us the detailled color daytime vision. There are six million of them in each human eye. Most of them are located in the central retina. There are 3 types of cone cells: one sensitive to red light, another to green light, and the third sensitive to blue light.

Question: Isnt it fortunate that the cone cells are located at the center of the retina ? Would be a bit awkward if your most detailled vision was on the periphery of your eye sight ?

Rod cells give us our dim light or night vision. They are 500 times more sensitive to light and also more sensitive to motion than cone cells. There are 120 mil rod cells in the human eye. Most rod cells are located in our peripheral or side vision.

The eye can modify its own light sensitivity. After about 15 seconds in lower light, our body increases the level of rhodopsin in our retina. Over the new half hour in low light, our eyes get more and more sensitive. In fact, studies have shown that our eyes are around 600 times more sensitive at night than during the day.

Questions : why would the eye have different kind of photoreceptor cells with one helping us specifically see at low light ?

The lacrimal gland continually secrets tears which moisten, lubricate, and protect the surface of the eye. Excess tears drain into the lacrimal duct which empty into the nasal cavity.

If there was no lubrication system, our eyes would dry up and cease to function within a few hours.

Question : If the lubrication wasnt there we would all be blind. This system had to be there right from the beginning, no ?Fortunate that we have a lacrimal duct , arent we ?

otherwise , we would have stead stream of tears running down our faces.

Eye lashes protec the eyes from particles that may injure them. They form a screen to keep dust and insects out. Anything touching them triggers the eye lids to blink.

Question : How could evolution produce this ?

Six muscles are in charge of eye movement. four of these move the eye up, down, left, and right. The other two control the twisting motion of the eye when we tilt our head. The orbit or eye socket is a cone shaped bony cavity that protects the eye. the socket is padded with fatty tissue that allows the eye to move easily.

Question: When you tilt your head to the side your eye stays level with the horizon. how would evolution produce this ?

Isnt it amazing that you can look where you want without having to move your head all the time ? If our eye sockets were not padded with fatty tissues, then it would be a struggle to move our eyes. why would evolution produce this ?

Conclusion : the eye is the best automatic camera in existence. Every time we change were we are looking, our eye and retina is changing everything else to compensate . focus and light intensity are constantly adjusting to ensure that our eye sight is as good as it can be.

Man has made its own cameras. It took intelligent people to design and build them. The human eye is better than any of them. Was it therefore designed or not ?

Common objection :

Is Our ‘Inverted’ Retina Really ‘Bad Design’?

http://reasonandscience.heavenforum.org/t1689-is-our-inverted-retina-really-bad-design

As it turns out, the supposed problems Dawkins finds with the inverted retina become actual advantages in light of recent research published by Kristian Franze et. al., in the May 2007 issue of PNAS . As it turns out, "Muller cells are living optical fibers in the vertebrate retina." Consider the observations and conclusions of the authors in the following abstract of their paper:

http://www.pnas.org/content/104/20/8287.short

Although biological cells are mostly transparent, they are phase objects that differ in shape and refractive index. Any image that is projected through layers of randomly oriented cells will normally be distorted by refraction, reflection, and scattering. Counterintuitively, the retina of the vertebrate eye is inverted with respect to its optical function and light must pass through several tissue layers before reaching the light-detecting photoreceptor cells. Here we report on the specific optical properties of glial cells present in the retina, which might contribute to optimize this apparently unfavorable situation. We investigated intact retinal tissue and individual Muller cells, which are radial glial cells spanning the entire retinal thickness. Muller cells have an extended funnel shape, a higher refractive index than their surrounding tissue, and are oriented along the direction of light propagation. Transmission and reflection confocal microscopy of retinal tissue in vitro and in vivo showed that these cells provide a low-scattering passage for light from the retinal surface to the photoreceptor cells. Using a modified dual-beam laser trap we could also demonstrate that individual Muller cells act as optical fibers. Furthermore, their parallel array in the retina is reminiscent of fiberoptic plates used for low-distortion image transfer. Thus, Muller cells seem to mediate the image transfer through the vertebrate retina with minimal distortion and low loss. This finding elucidates a fundamental feature of the inverted retina as an optical system and ascribes a new function to glial cells

Stephen Jay Gould, Former Professor of Geology and Paleontology at Harvard University

To suppose that the eye with all its inimitable contrivances for adjusting the focus to different distances, for admitting different amounts of light, and for the correction of spherical and chromatic aberration, could have been formed by natural selection, seems, I freely confess, absurd in the highest degree.

http://webvision.med.utah.edu/book/part-ii-anatomy-and-physiology-of-the-retina/photoreceptors/

http://www.ic.ucsc.edu/~bruceb/psyc123/Vision123.html.pdf

http://www.detectingdesign.com/humaneye.html

http://www.creationstudies.org/Education/Darwin_and_the_eye.html

http://www.harunyahya.com/en/Books/592/darwinism-refuted/chapter/51

Human sight is a very complex system of irreducibly complex interacting parts. These include all the physical components of the eye as well as the activity of the optic nerve attached to certain receptors in the brain.

The optic nerve is attached to the sclera or white of the eye. The optic nerve is also known as cranial nerve II and is a continuation of the axons of the ganglion cells in the retina. There are approximately 1.1 million nerve cells in each optic nerve. The optic nerve, which acts like a cable connecting the eye with the brain, actually is more like brain tissue than it is nerve tissue. In addition to this, there are complex equations that the brain uses to transform what we see in real life onto the curved screen of the retina in the human eyeball.

This complex system is combination of an intelligently designed camera, lens, and brain programming all work together enabling us to see our world in incredible clarity.

Each of these components have no function of their own, even in other systems. That is a interdependent system. It cannot arise in a stepwise evolutionary fashion, because the single parts by their own would have no function.

Imagine having to use spherical shaped film instead of the conventional flat form of film in your camera. The images should be distorted. Just like those funny mirrors in the fun houses at state fairs and carnivals. That is exactly the way we see the world reflected against our curved retinas in back of the human eye.

We manage to correct these images and see them accurately without distortion because the Creator has installed fast-running programs in the brain that instantly correct the distortions in the image, so that the world around us appears to be flawless, like a photograph.

Not only that, the human brains turns our eyes, which are already more complex and refined than the most advanced High Definition cameras available today, into biological computers that can estimate the size and distance of objects seen. The objects are not measured as they appear on the retina, our brains act as an advanced evaluation program processing the physical data received by our sensory organs: it enlarges, reduces, and adjusts them precisely, so that the information is presented in a way that makes our sense of sight into an apparatus that is far superior to any pure instrument of physics.

Comparing the eye to a camera is an interesting analogy because our sight is really superior to an instrument of pure physics. Our eyes are able to see the darkest shadows as well as the brightest sunlight by automatically adjusting the optical range of operation. It can see colors. It can perceive white paper as being white, even when it is illuminated by bright light of varying colors. Our eyes contain the ability to perceive colors in essentially the same way. Color and shape are perceived as the same, whether the object is close or far away, even if the lighting varies radically.

http://iaincarstairs.wordpress.com/2013/03/25/as-smart-as-molecules/

The invention of television was certainly ingenious and changed the face of the Earth, and relied on a material called selenium, which converted photon stimulation to electrical signals. The Russian, Nipkow, experimented with it in the 1800’s but found it unworkable due to the weak signal and rapid decay. It was Baird who in the 1920’s, with the advent of electrical amplifiers, realised that the signals all decayed at the same rate, and all that was required was a consistent amplification. Refining the process was to take up the remainder of his life.

The eye uses a similar system in which retinal, a small molecule which fits into the binding site of a large protein called opsin, making up rhodopsin, is triggered into activity by the sensitivity of the opsin molecule to photon stimulation. The following chain reaction of chemicals and eventual electrical signals, include feedback loops, timing mechanisms, amplifiers and interpretive mechanisms in the brain woudl fill a book.

Even modern television doesn’t improve on the devices contained within the retina, which are dealt with in greater detail elsewhere on this site. The chain of events which give rise to sight are so important that the eyes use about 1/5th of the body’s energy; the eyes are constantly in vibratory motion, without which, the signals would cease to be forwarded to the brain.

The eyes include their own immune system, variable blood flow heat sinks behind the RPE controlled by the iris contraction, built in sunglasses, a recycling depot and separate circuits for motion, line detection and binocular perspective. And lastly, remember that all these components are smaller than the wavelength of visible light. They transmit signals of light for us to use, but in their molecular world, they all work completely in the dark.

Priceless!

The eye, due to design, or evolution ?

http://reasonandscience.heavenforum.org/t1653-the-human-eye-intelligent-designed#2563

http://www.arn.org/docs/glicksman/eyw_041201.htm

Evolutionary Simplicity?

Review of this and the last two columns clearly demonstrates:

the extreme complexity and physiological interdependence of many parts of the eyeball

the absolute necessity of many specific biomolecules reacting in exactly the right order to allow for photoreceptor cells and other neurons to transmit nervous impulses to the brain

the presence of, not only an eyeball whose size is in the proper order to allow for focusing by the cornea and lens, but also a region in the retina (fovea) that is outfitted with the proper concentration of photoreceptor cells that are connected to the brain in a 1:1:1 fashion to allow for clear vision

that vision is dependent on a complex array of turned around, upside down, split-up, and overlapping messages, from over two million optic nerve fibers that course their way to the visual cortex causing a neuroexcitatory spatial pattern that is interpreted as sight

that scientists are blind to how the brain accomplishes the task of vision

The foregoing is likely to give most people pause before they subscribe to the theory of macroevolution and how it may apply to the development of the human eye and vision. How can one be so certain of an origins theory when one still doesn’t fully understand how something actually works? Most of what I’ve read by its supporters about this topic contains a lot of rhetoric and assumption without much detail and logical progression. It all seems just a bit premature and somewhat presumptuous.

Quite frankly, science just doesn’t have the tools to be able to definitively make this determination, yet. Will it ever have them? Maybe yes, maybe no. Until such time, I reserve the right to look upon evolutionary biologists’ explanations for the development of the human eye and the sensation of vision, with a large amount of skepticism, and as seeming overly simplistic and in need of a heavy dose of blind faith.

Human Eye Has Nanoscale Resolution

http://crev.info/2015/07/human-eye-has-nanoscale-resolution/

1. http://web.archive.org/web/20160322111142/http://goddidit.org/the-human-eye

2) http://creation.com/did-eyes-evolve-by-darwinian-mechanisms[/size][/size][/size][/size][/size][/size]

Last edited by Admin on Fri Apr 13, 2018 7:44 pm; edited 22 times in total